JST ACCEL

EMBODIED MEDIA PROJECT

FACTBOOK 2014-2019

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

The JST Accel Embodied Media Project has been researching haptics and telexistence technology to empower humans to live like human beings.

The cover illustration portrays the sending and receiving of people’s corporeal information across a network in a virtual space, within the confines of a physical earth.

The story unfolding in the illustration represents a future envisioned by the Embodied Media Project.

The alternative for hazardous work

By making use of telexistence robots in hazardous work environments, such as disaster areas and outer space, humans can do work without being on location, thus reducing accident risk and development costs. (①, ⑤, ⑨, ⑪)

Use that transcends the time and space of people’s abilities

By applying telexistence, people can not only enjoy leisure activities like traveling the world, shopping or doing sports at an instant, but also deliver their creative talents and specialized abilities in fields such as medicine to places around the world. In addition, by making use of regional time-zone differences, people who have particular lifestyle circumstances, like raising their children, can find work fitting their own schedule. (②, ④, ⑤, ⑥, ⑦, ⑩, ⑬, ⑭)

Expanding, supplementing functions of the body

By using a telexistence robot, those with disabilities or elderly with physical ailments can compensate for their physical limitations and work or enjoy the outdoors. (⑤, ⑨, ⑫)

A connection that transcends distance

With sports or music concerts, going in person can provide a sense of immediacy, but with haptics people can feel a oneness to the experience that transcends distance, enabling them to experience the excitement of the stadium with all their senses. (③, ⑧)

Expanding the experience with the virtual space

By creating bodily sensations in a virtual space, people can feel like they’ve transformed into a protagonist in a game or a famous person in history. In addition to entertainment, this enables for applications in education as well. (⑫)

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

For humans to live like human beings

Susumu Tachi

(Professor Emeritus, The University of Tokyo)

When it comes to embodied media, virtual reality and telexistence are the best-known examples. With the former, you take on a virtual avatar and enter into the virtual world created by a computer; with the latter there’s a physical avatar that you interact with remotely from a different physical location. Both enable you to exist in a world outside your own.

As the internet has advanced, information such as words, voice audio, images and video have become ubiquitous as media. The recent Internet of Things (IoT) has turned things into information, and by connecting to the web, those things are becoming ubiquitous. The next step beyond that is embodied media. As technology advances and the human body becomes information on the net, humans themselves become ubiquitous.

What would that technology look like in the real world? It could be having avatars placed in locations throughout the world, with users being able to access them by connecting online. They could log off anytime, just as with computer-generated virtual worlds. From the user experience perspective, there isn’t a difference whether the avatar’s world is real or virtual. You’re still existing in both as an avatar to gather information, have new experiences, enjoyment, or even work.

If we have a telexistence society where human functions can be teleported at an instant, it would fundamentally change how industry and people work. It resolves issues of poor working conditions, and revolutionizes how and where industries can be set up. When it’s possible to work at a factory from any location in the world, labor doesn’t need to be centralized at major cities, which averts the population decline of rural areas. And by leveraging the time differences around the world, there would be no need for late-night shifts when utilizing labor from different time zones. It also enables people to be a part of the workforce even while raising kids or being a caregiver, making society more conducive to quality family life.

There’s also the merit of cutting costs for global businesses for business travel. Commuting becomes unnecessary, which helps reduce traffic congestion. Without the need of living close to work, labor doesn’t need to be centralized in major cities, which again revitalizes rural areas. Work life balance is improved when people live where they want, have more free time, and live life the way they see fit.

Also, with prosthetic robotic bodies, body functionality can be restored or expanded upon. The elderly and people with disabilities can then contribute as vigorously as younger people while leveraging their experience, which substantially improves the quality of the labor pool.

Recruiting top-tier talent from around the world from specialized fields like technology and medicine becomes easier, enabling for the placement of the right talent. That also means emergency assistance can be provided at an instant, from anywhere around the world. It goes without saying that the technology can be used for tourism, travel, shopping, leisure and education as well.

Creating a “virtual human teleportation industry” brings about a telexistence society that’s greener and lower in energy consumption, which would dramatically improve people’s quality of life, comfort, health and convenience.

The most important thing is for humans to live like human beings. I think that’s the whole reason for telexistence and virtual reality--for everyone to be who they are to the fullest. Not just us, but everyone around the world. From there we can expand and supplement human ability. Even if a person’s body is impaired, by using a telexistence body as their own, they can become the ultimate cyborg. By interfacing with a new body, a person can work with their hands that were once lost, for example. With restoring, strengthening or expanding human functionality, people are then free to pursue what they want to do most. What that looks like depends on the individual, but I think science and technology are supposed to be used to realize the dreams of every person.

In the world, there are many who inexorably lead difficult lives due to poverty or conflict. As an example to understand that reality, there’s a world of a difference between simply watching news about refugee camps on TV, and using telexistence to visit the camp to directly see and inquire about it “in person.” Plus, you could use telexistence for volunteering—providing counseling, care or medical treatment if you’re a specialist in your field. By people around the world taking advantage of a telexistence society, they can make use of the added free time to help those around the world in need, without being limited by travel.

With telexistence giving people new augmented bodies, and instantaneously transporting them virtually, it will empower humans to live more like human beings, greatly contributing to humankind in the years to come.

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

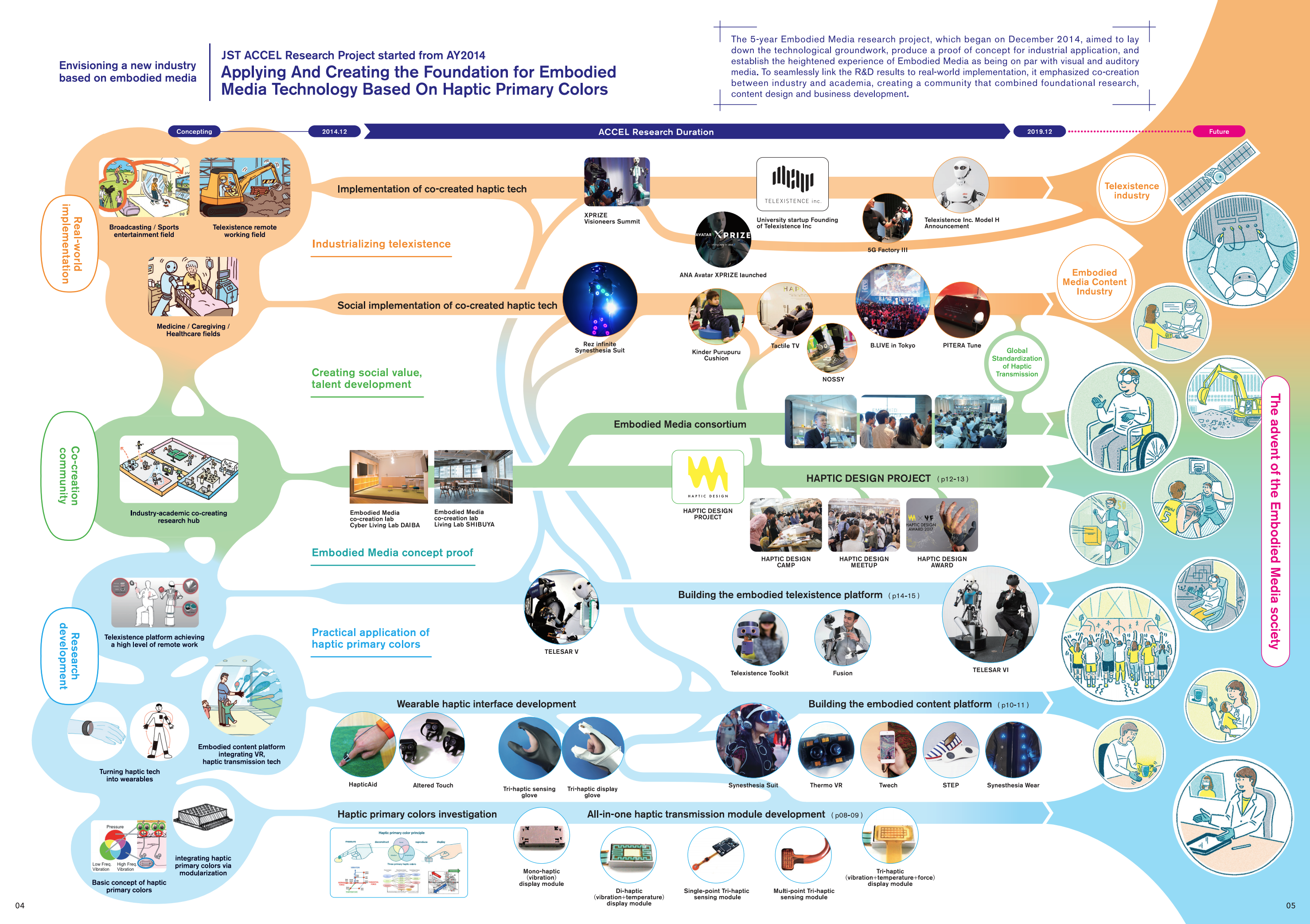

Envisioning a new industry based on embodied media

JST ACCEL Research Project started from AY2014

Applying And Creating the Foundation for Embodied Media Technology Based On Haptic Primary Colors

The 5-year Embodied Media research project, which began on December 2014, aimed to lay down the technological groundwork, produce a proof of concept for industrial application, and establish the heightened experience of Embodied Media as being on par with visual and auditory media. To seamlessly link the R&D results to real-world implementation, it emphasized co-creation between industry and academia, creating a community that combined foundational research, content design and business development.

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

The essence of reality brought to life with the power of the virtual

Research Director

ACCEL Embodied Media Project

Susumu Tachi (Professor Emeritus, The University of Tokyo)

What was the envisioned plan for ACCEL?

ACCEL is the research and development program from JST (Japan Science and Technology Agency). JST selected projects for ACCEL from promising cutting-edge research that came out Strategic Basic Research Programs (CREST, PRESTO, ERATO, etc.), which had great potential but couldn’t be pursued by private companies and other organizations due to perceived risks. In December 2014, JST selected Embodied Media Project for ACCEL, led by Prof. Susumu Tachi as research director. Assigning Dr. Junji Nomura as the program manager (PM) for innovation-oriented R&D management, we sought to create viable technology that can be a link to further development by businesses, venture firms and other enterprises.

In line with ACCEL’s purpose of “being the link to the real-world implementation of R&D technology,” we had two big accomplishments. First, by creating a consortium, we were not only able to share the research concepts and technology for many to understand, but also created an environment where that can be societally implemented via collaborative research. Second, we were able to establish the startup called Telexistence Inc., which centered around the developed technology. Looking ahead, we’ll need to figure out how to shape our society using telexistence.

Having created the seed for a world where telexistence use is widespread, we feel the five years of ACCEL have been very worthwhile. With this initiative as the starting point, we’re striving to create societal change on a global scale.

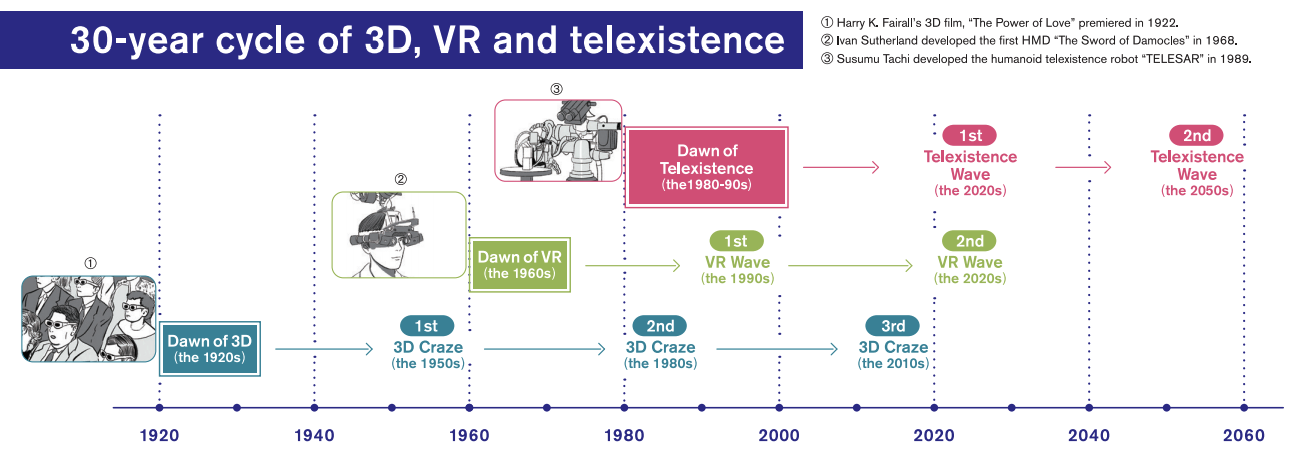

What kind of developments have there been with VR and telexistence prior to this project?

Turning data into 3D or VR has been looked into in the past for creating media with embodied elements, but that innovation has happened in alternating, 30-year spans. Only recently there’s been a second wave of innovation with VR technology, and before that it was largely forgotten. And now, device prices have gone down a hundredfold, while performance has risen 100x or 100,000x. The advancements in computing inevitably gave rise to high-performance VR devices like Oculus Rift, and as we approach 2020, VR has become thoroughly mainstream.

In conjunction with the development of 5G technology, people are assessing turning haptics into communication data. With a 5G environment, lag is reduced to 1ms (4G lag is tens of ms). When such speeds are reliably established, personal haptic data transmission becomes possible, enabling people to share tactile sensations without lag.

Typically, humans can recognize something in the 50Hz frequency band if it’s continuous. With visual communication, in a 50Hz frequency band about 20ms is picked up as a single frame, so there’s little issue if there’s slight latency. But with tactile, if there’s even a little delay then the sensation of touch isn’t communicated. That’s why a 5G environment would open a lot of doors, and it’s why telecommunication companies are vigorously seeking telexistence technology that takes advantage of 5G.

I’ve reiterated this from time to time, but what’s important now is to move away from the notion of “virtual=imaginary.” Actually, it’s “virtual=real.” The externals may not look real, but the essence is. In other words, virtual means existing in essence or effect though not in actual fact or form. That I think truly defines “VR.”

When virtual information can be reproduced without lag compared to real sensations, then that’s already real. But, not reproduced in the same way as real life. Substantially representing the world virtually means to grasp the world at its essence. For example, by using VR or telexistence to do a simulation of an evacuation during a disaster, that correlates to concrete action during a real evacuation. The key question then is, “what essential elements need to be in VR to produce the desired action?”

Can you explain the strengths of telexistence?

The advantage of telexistence is it allows you to take the helm of autonomous, intelligent robots. In other words, through the supervisory controlling of intelligent robots and letting them do what they can, and having the person do what only the person can do, it enables a single person to use multiple robots. In addition, when using telexistence with a robot you can log off anytime, while the robot subsequently returns to its original place. On top of that, when a person does remote work via telexistence, then that output is stored as data, and as multiple people around the world use telexistence to work, then the aggregate data can be used to automate the work with a robot. So telexistence has an important role when it comes to the machine learning of tasks.

What type of society will be realized in the future with telexistence?

Telexistence can be thought of as the ultimate cyborg. Without modifying your own body, it can restore body functions, or strengthen them. Telexistence can give birth to a “virtual human teleportation industry” that enables people to move at an instant to a different location, without being bound by distance, age, environment or physical ability. A society with telexistence can not only spur leisure activities or humane economic development through remote labor, but also tackle societal issues the world is facing. For example, doctors, educators, and specialists can be brought into areas that are severely lacking in expert resources, or into disaster areas by making use of avatars.

Susumu Tachi, Ph.D.

Professor Emeritus of The University of Tokyo, Ph.D. in Mathematical Engineering and Information Physics at The University of Tokyo, and founding president of the Virtual Reality Society of Japan (VRSJ). In 1980, Dr. Tachi was the first to propose the concept of telexistence, conducting research for its realization ever since. Other internationally recognized work include the world’s first guide dog robot for the blind (MELDOG), haptic primary colors, autostereoscopic VR, and Retro-reflective Projection Technology. Recipient of the IEEE Virtual Reality Career Award, Japan Minister of Trade and Industry Prize, Japan Minister of Education, Culture, Sports, Science and Technology Prize. Initiated the IVRC (International-collegiate Virtual Reality Contest) in 1993, published numerous books including “Introduction to Virtual Reality,” and “Telexistence.”

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module

To realize a future where haptics can be readily recorded and reproduced.

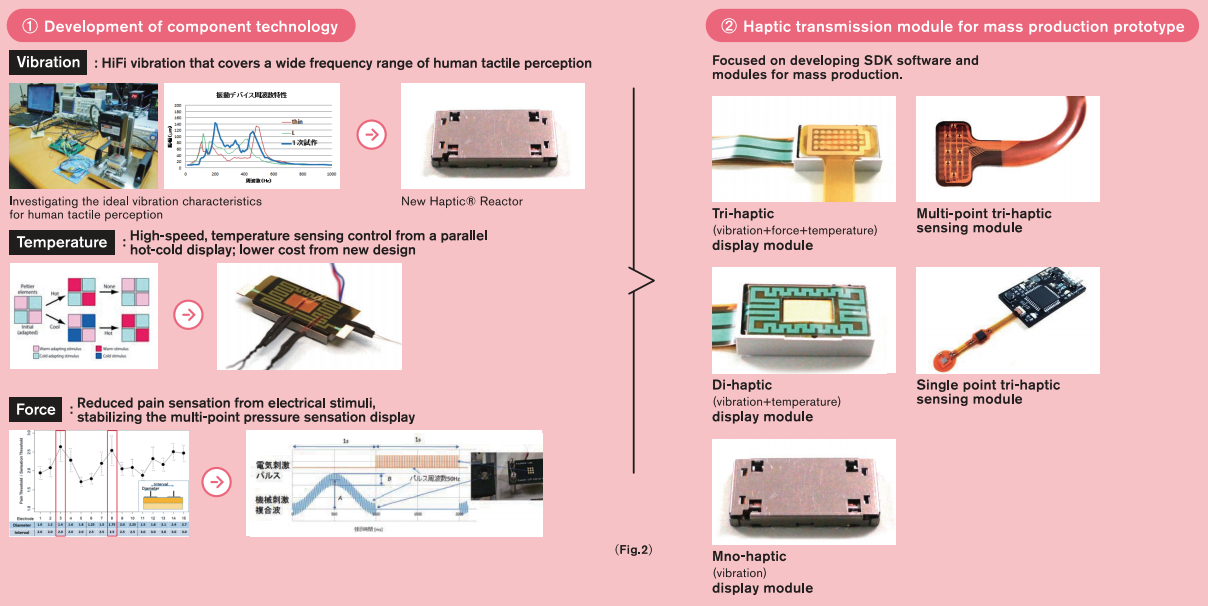

What were the roles for the team developing ACCEL’s integrated haptic transmission module?

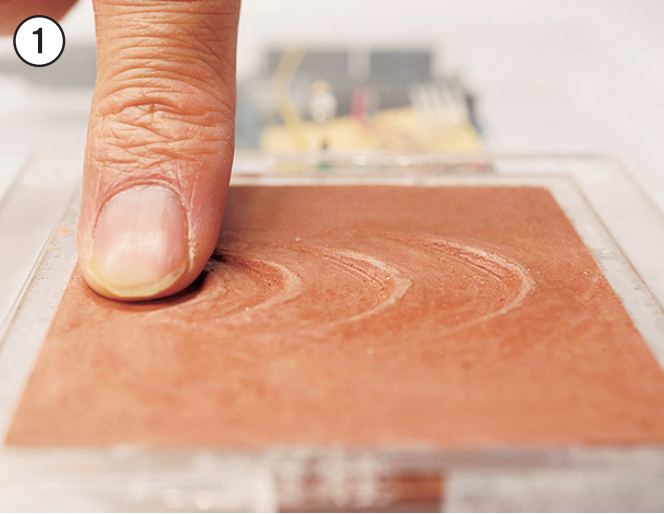

Hiroyuki Kajimoto:Based on the principle of haptic primary colors, we developed software and a haptic module to record and reproduce haptics. Up until that point I was researching electrical haptics, and Prof. Katsunari Sato was doing the world’s first research on temperature display and minimizing heat generation. Mektron did impactful work in the past by putting numerous haptic sensors on a robot’s hand. Alps Alpine was creating high-quality and compact vibration feedback devices, and had integrated quality vibrational displays into its products for many years. This project brought all of those elements together.

Hidekazu Yoshihara:Since we needed flexible printed circuits (FPC) to mount the module, we used our expertise to design a wiring structure that fit curved surfaces and wouldn’t easily break.

Yasuji Hagiwara: Leveraging Alps Alpine’s expertise in miniaturization technology, we not only made the di-haptic module that combines vibration and temperature display to be more compact, but also contributed by making it effectively radiate heat.

Kajimoto:For the module we developed HiFi vibration, which covered a wide frequency band of human tactile perception. For force, we reduced the sensation of pain in electric stimulation, and achieved stability with displaying multi-point pressure sensations. From the onset, our development kept mass production in mind, and we gained a lot of insight for real-world implementation.

Sato:Since my focus was temperature, I configured the temperature display component in parallel, implemented high-speed temperature sensation controls, and looked into its real-world feasibility as well as cost reduction.

What was the biggest challenge with the project?

Kajimoto:The biggest challenge was putting the electric stimulation display module on top of the vibration and temperature di-haptic display module. When putting the electric stimulation film on top of the temperature display, the heat would no longer transfer, making the temperature display non-functional. So, we kept changing the design requirements to try and resolve this. Also, we did countless tests to ensure stability when numerous people use the device multiple times.

Haptic rendering application

Haptic rendering application

Then there was the software development. The software integrated into the hardware is only a couple hundred lines of code, and the challenge that arises is the rendering algorithm. Producing a tactile sensation with software could be a short as a single line of code, but it’s something that requires extensive experimentation to verify. And we haven’t figured out all the answers yet.

Sato: For vibration, if we transmit the waveform vibration as-is, then the output correspondingly reflects that. Adding force and temperature stimulus enables for more abundant tactile expression. But how you combine the three stimuli, and what tactile expression you get is still unclear. It’s something we still need to work on.

Hagiwara: Devices that display temperature stimuli haven’t really been around until now, and with news of this project spreading, it’s not only become a topic of discussion, but has seen many issues raised—for example, how different people feel temperature, or potential safety issues. So, we need to build up the expertise in those areas moving forward.

How has industry-academia co-creation been valuable for this project?

Sato: The ability to debate on ideas to be implemented was significant. What I valued was finding compromise between physical performance and the subjective sense of feel. It’s similar to VR, of how realism is defined as a person being able to feel the experience. If we can reproduce an environment that feels real, then it’s not a big issue even when the physical performance isn’t perfect, which freed us to experiment and combine a variety of technologies.

Kajimoto: We believed and still believe that if we’re able to achieve the technology at the fingertips, then anywhere else is possible, and that creating tri-haptic sensing/display gloves would be tackling the most difficult challenge.

Tri-haptic sensing gloves, Tri-haptic display gloves

Tri-haptic sensing gloves, Tri-haptic display gloves

At the time there weren’t any devices that could be worn at the fingertips to record and reproduce all the haptics. There were some that recorded/reproduced only vibrations, or only force. What we were making was a one-of-a-kind.

Yoshihara:Five years ago, our starting point was little more than a pair of plastic gloves. We started with creating haptics for the index finger and thumb, then made the rest of the fingers in a continuous trial-and-error process.

Kajimoto:When we started development, we realized nobody really knew how to create realistic tactile sensations. Because of that, we had to revisit the basics of haptics—in some ways becoming fundamentalists. If we set a goal of producing realistic tactile sensations, then at the very least the haptic researchers in this team had to become more deeply invested. Being involved in this project really showed us that.

Yoshihara: Including the “haptic primary colors” phrase itself, there’s still not a lot of recognition with this R&D. The technology to open up new worlds with VR goggles and haptic devices is already before us, and I’d really want word to spread of the haptic primary color principles that undergird that.

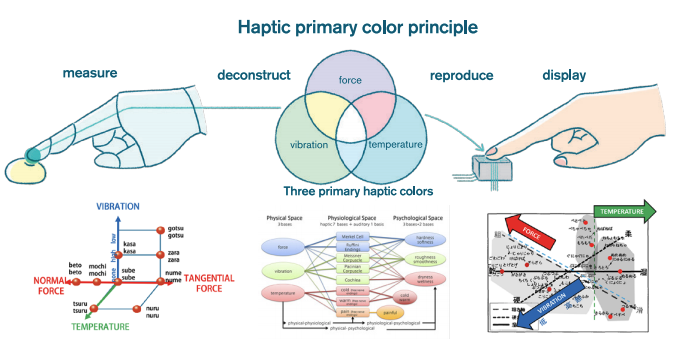

About the principle of haptic primary colors

Haptic primary color principle is based on the knowledge of physiology, and is a technological concept that endeavors to deconstruct, transmit and display haptic information. Just as the three colors (red, green blue) for sight are based on the wavelength characteristics of cone cells in the eye, haptic primary colors are defined as three stimuli of force, vibration and temperature.

As the technology for its research and development advances, then haptic information can be comprehensively measured, recorded, transmitted, reproduced and displayed. In conjunction, fabrication of sensing modules and display modules that rival imaging modules (such as CCD) and display modules (such as LCD) can become a possibility—thus opening the door for industry applications. Haptic primary color principle is a technological concept that aims for a future where haptic media can rival visual or auditory media.

(Figure 1)

URL

https://tachilab.org/jp/about/hpc.html

Integrated haptic transmission module design, mass-production prototype

Following the vibration/temperature/force display module, development of the mass-production prototype module began.

(Figure 2)

(Figure 2)

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Wearable Haptic Interface/Embodied Content Platform Creation

Record, create, transmit people’s embodied experiences Creating an embodied content platform

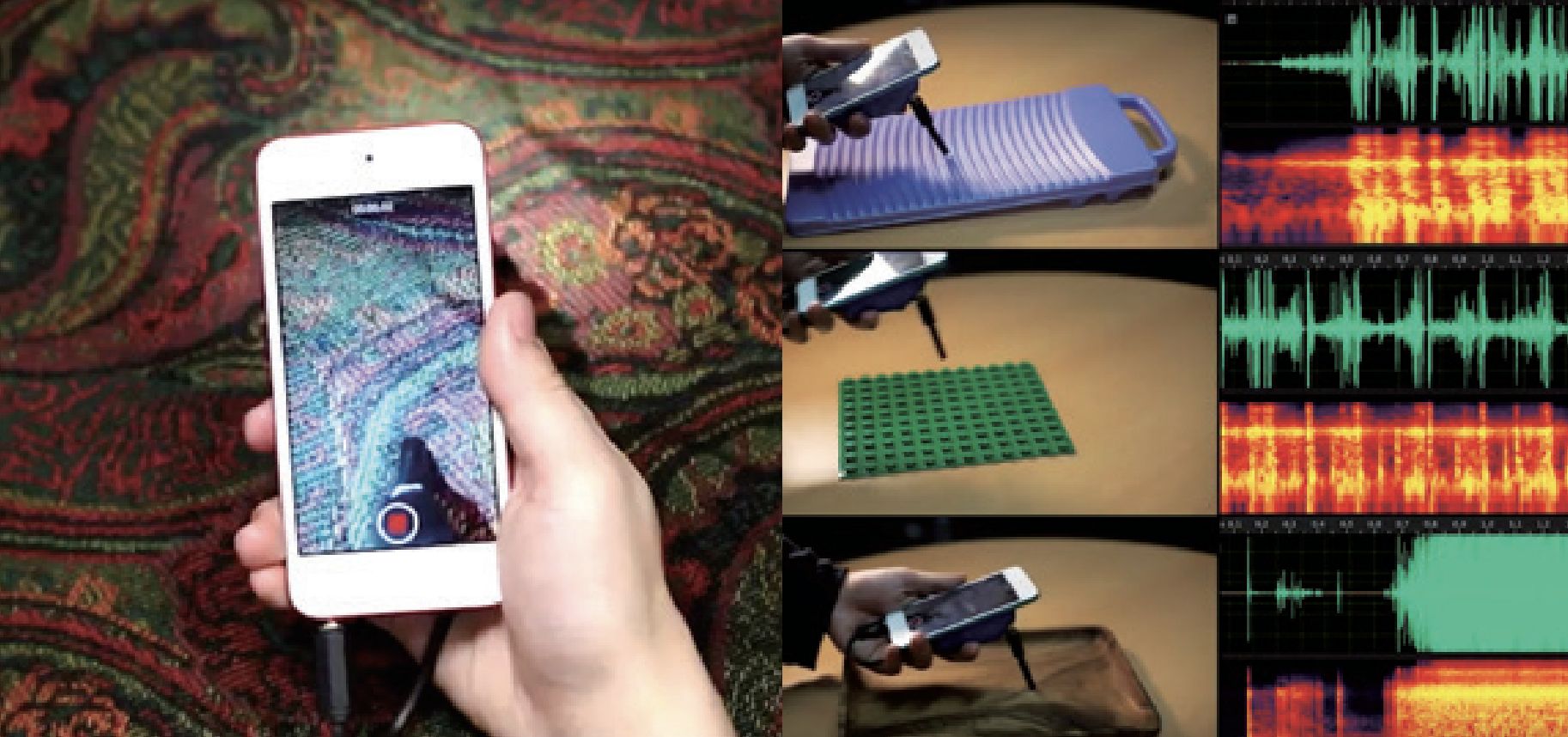

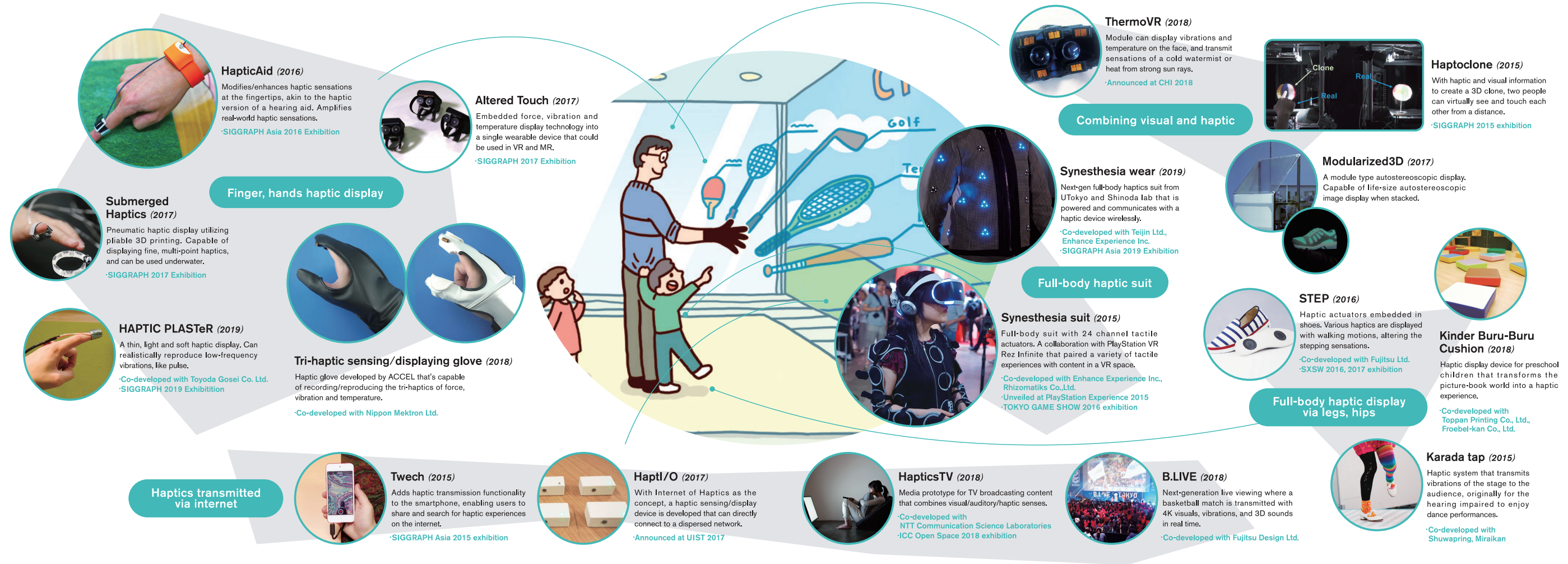

In a near future where the technology to record and reproduce people’s haptic sensations are realized, and embodied experiences via the internet become the norm, what new haptic technology experiences can we expect? ACCEL’s embodied content platform aims to bring haptics to the fields of broadcasting and entertainment, and has researched and developed experiences combining visual/auditory senses with full-body haptics.

Combining component technologies to create rich experiences

Minamizawa:As we gain insight on principles of human haptics and somatic sensation, how will the sensing/display component technology be applied, and what experiences will be created? ACCEL’s embodied content platform strives to tangibly show such experiences. What’s possible when haptics are added to existing media such as TV and smartphones? What kind of services come about when wearable haptic interfaces enable people to record their experiences and share them online? How will future entertainment enhanced by haptic media technology delight people? When somatic senses are enhanced/expanded upon, how will people’s abilities and actions be altered? To answer these questions, our group not only researches each component technology, but also designs and develops wearable as well as mobile interface prototypes that can really be used. Also, by developing content design methods that integrate haptics into embodied experiences, we are proposing experiences in a variety of fields, including sports and entertainment. Partnering with companies that have concrete business needs, we create experiences open to the public that widely showcase the content possibilities with embodied media. We aspire to work with people of all disciplines and co-create experiences of the future, which are born out of embodied media.

The current foundational technology that supports content experiences

Shinoda:I was in charge of the foundational technology’s creation and implementation to create haptic experiences. Human haptics can be divided into deep sensation and skin sensation. Deep sensation is mainly the force perceived in the muscles. To fully reproduce this and try to display even the powerful forces, you need a sturdy mechanism. On the other hand, skin sensation can be adequately displayed with wearable devices. This doesn’t necessarily limit haptic experiences. We’ve recently understood that if we can firmly apply skin sensation stimuli, then numerous haptic experiences can be reproduced. We aimed for wearables that can be easily be put on, and can reproduce rich haptic experiences.

What becomes important is not just the technology, but adapting it to the user. Haptics reproduced by mobile, wearable devices often have some kind of shortfall. If users are aware of it and adapt to that, then it’s more useful. A concrete example would be driving a car, which has been standardized. And I think it’s important for there to be standardization with the application of haptics and embodied media.

“Internet of Haptics”

Looking towards a future linking haptics with the internet

To realize a future where embodied experiences can be shared on the TV or internet, it’s important to establish not only the content or UI, but also transmission standards. In anticipation of next-generation 5G, ACCEL, in association with various private companies and universities, is working with the IEC (International Electrotechnical Commission) to establish a global standard for transmitting haptic information, alongside visual/auditory transmission standards.

Twech, a platform to record/share/search haptic sensations, represented as video

http://embodiedmedia.org/project/twech/

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Forming a co-creation community

Making a career out of Haptic Design

HAPTIC DESIGN PROJECT

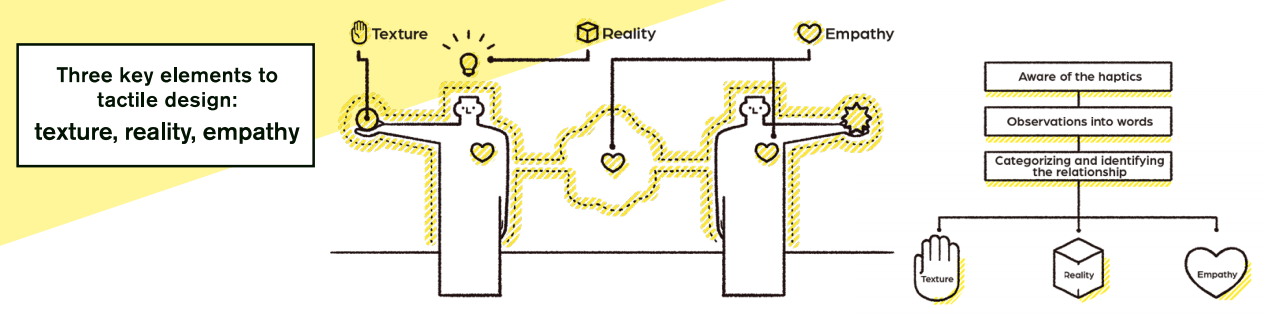

We connect with various people and objects in our daily life, establishing relationships with them through our sense of touch. Like the visual and sound designs we encounter every day, which engage our sense of sight and hearing, haptic design is all around us. We can not only design the tactile sensations of materials or the transmission of information, but also the very relationships between the objects and persons using them. We call this Haptic Design, and pursue the discipline from a variety of perspectives.

http://hapticdesign.org

From a hobby to work. From work to a career.

「With community as the starting point, we drew interest into haptic technology to spur a business-building movement. We held meet-ups to create conversations around the topic, as well as hackathons and workshops, which used sensor devices that generate haptic feedback to create haptic experiences. These activities aimed to instill new value systems in individuals, which can help bring haptic technology into the workplace. Participants organically networked in the event, encouraging and facilitating the co-creation of new products, services and businesses.

Haptic Designer as a career

As the opportunities to apply haptic technology with products, services and businesses increase, the presence of people who approach haptics as a career becomes vital. Like visual designers who handle the sense of sight, or sound designers who handle the sense of hearing, we’ve identified specialists who handle haptics as haptic designers. Haptic designers not only design the feel of materials, or how haptic information is conveyed, but also the relationships that exist between people and objects.

Haptic Design starts with becoming aware of the haptics we experience in our daily life. First, we look at the elements that can create an emotional connection—texture, reality empathy—to observe haptics. Then, we put those observations into words, categorizing and identifying the relationships they have in order to create a foundational basis for haptic design. By organizing that information, we can then design the objects and concept experiences from a haptic perspective.

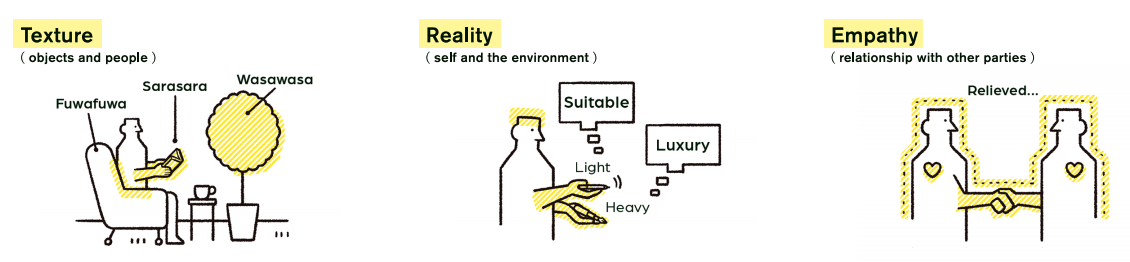

Texture (objects and people)

Texture is how objects or materials feel to the touch, and the sense of substance people feel from them. It’s constantly experienced in the products people interact with daily. We break down and categorize the textures into onomatopoeias like “fluffy” or “gooey,” and create a diagram similar to a color grid, treating them like color tones in design.

Reality (self and the environment)

When catching a basketball pass, your body instinctively moves before you think. The idea of the body remembering and recording the sensation as “reality” is easy to understand in sports like tennis, basketball and golf. In products and services that demand experiential design, the concept of reality as sensory information is becoming increasingly important.

Empathy (relationship with other parties)

Embracing someone you like brings peace of mind; giving a handshake instills trust. Beyond words, bodily contact and the sharing of tactile experiences is important for establishing relationship with others. The exhilaration of seeing entertainment live, like a concert or ballgame--this is also due to empathy. While a field still in its infancy, there’s a lot of possibilities to create unique communication.

■Creating user communities

“Shockathon,” the largest haptics hackathon

A collaborative project from the embodied media consortium, held every summer over a 5-year period from 2014-2018. With many haptic researchers participating, about 60 engineers and creators gathered at every session to create works utilizing haptic technology. Started in Tokyo, now held all over the world.

HAPTIC DESIGN AWARD

The global award focusing on embodied/sensory/haptic design--the next step beyond visual/auditory media. Over 200 works have been submitted from over 20 countries in the world, ranging from video, VR and products to workshops.

HAPTIC DESIGN AWARD WORKS

① 2016 GRAND PRIZE “Ridge User Interface” (Shigeya Yasui)

② 2016 FIRST PRIZE “Stacked Paper” (Minami Kawasaki)

③ 2017 GRAND PRIZE”The Third Thumb”(Dani Clode)

④ 2017 JUDGE’S SELECT “Voice of the Stone”(MATHRAX)

HAPTIC DESIGN Meetup

The HAPTIC DESIGN Meetup was held to create a community that nurtures Haptic Designer talent. The events discussed the potential of HAPTIC DESIGN, a topic that hasn’t been explored with haptic research in the past.

Between November 2016 and March 2019 a total of 11 meetups were held.

With the theme of HAPTIC + ( ) Design, every session explored a collaboration with a different field.

1. Sound / 2. Body / 3. Entertainment / 4. Emotion / 5. Social / 6. Costume/ 7. Kids / 8. Material / 9. Skill

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Creating the Embodied Telexistence Platform

Realizing the expansion of human existence: The current state of Telexistence

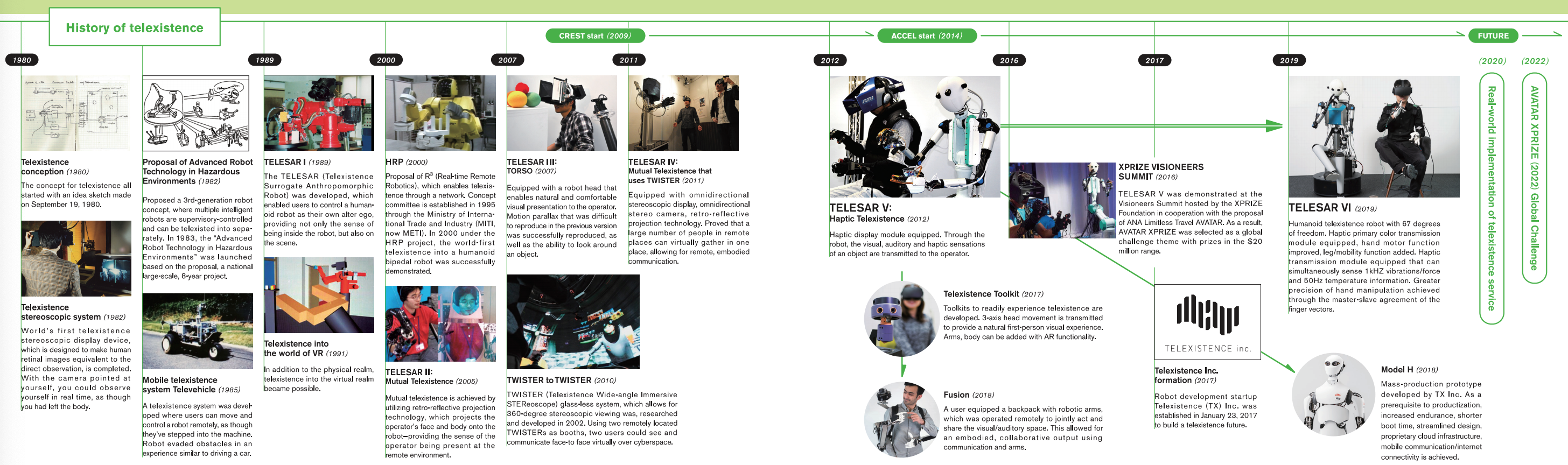

Telexistence is the comprehensive technology that expands human existence by using avatars around the world as alter egos, integrating technology including virtual reality, haptics, robotics, AI, and networking. ACCEL through its R&D efforts have industrialized the technology in January 2017 and formed the startup Telexistence (TX) Inc. The company aims to dramatically increase the productivity and accessibility of individuals, private companies and organizations, in order to continually benefit society. Herein we look at its 40-year history and project the next technological revolution in telexistence.

Telexistence AVATAR selected as the next challenge at XPRIZE Visioneers Summit

The XPRIZE Foundation, founded by renowned entrepreneur and futurist Peter Diamandis, held the Visioneers Summit competition in October 2016. Answering XPRIZE’s Avatar team’s request to “show the world’s most cutting-edge Avatar technology,” Susumu Tachi with the members of Tachi Laboratory demonstrated the TELESAR V. The next XPRIZE theme was to be selected from multiple candidates in this competition, and telexistence was chosen amidst the strong contenders. Telexistence research and development has made a great leap because of the competition starting for the final review of the AVATAR XPRIZE in 2022, where participants from all over the world will gather.

Founding of Telexistence (TX) Inc.

For ACCEL’s other major accomplishment, there’s the formation of Telexistence (TX) Inc., a robotics venture creating the future for telexistence. With Susumu Tachi as Chairman, Jin Tomioka as CEO, Charith Fernando as CTO, and Kouta Minamizawa as technical advisor, TX Inc. is moving forward with the design, manufacture and operations of avatar robots that support the implementation of telexistence. “From the very beginning, our strong desire was not just to R&D, but to bring the robots into the world,” said Charith. “As menial tasks are increasingly taken on by robots, we hope it’ll create an environment for humans to focus on creativity.”

Creating an embodied telexistence platform that can mutually transmit people’s actions and perceptions

Nearly 40 years of telexistence R&D can be seen in the embodied telexistence platform TELESAR series development evolution (chart below). In particular with ACCEL, TELESAR VI was developed from the TELESAR V system, borne out of CREST’s research. From its predecessor, TELESAR VI realized full embodiment with 67 degrees of freedom by adding legs and improving hand motor functions, and expanded haptic information transmission by integrating haptic primary color modules. We also developed telexistence tool kits that enable easier demonstration and experimentation of telexistence, and further expanded the application of the telexistence.

Virtual human teleportation industry’s rise to be on par with the current automotive industry

With the 5 years of ACCEL research, the AVATAR XPRIZE selection, and major corporations as well as startups entering into the space, a “telexistence society” is not a distant reality.

In a telexistence society, avatars (surrogate bodies) are placed around the world for users to freely use from their home, which frees them constraints of space or time. In addition, by freeing the mobilities of people, it potentially contributes to more efficient energy consumption. A wide variety of day-to-day applications can be anticipated, including sanitation labor, construction, agriculture, inspection/repairs in hazardous factory environments, law enforcement, test pilot/driver, exploration, and leisure. With its potential use in search, rescue and recovery, telexistence takes on a dual use of everyday and emergency tasks. It’s a reality that’s right before us.

What is telexistence

“Telexistence” is defined as the expansion of human existence—to virtually exist and freely act in a space that’s different from the person’s current location—and the technological systems that make this possible. Through this technology, a person can exist as an alter-ego robot in a remote location, as a computer-generated avatar in a virtual space, or physically exist in a physical space through a virtual space. It’s a concept brought forth in 1980 by ACCEL’s Research Director, Susumu Tachi, who is a worldwide leader in this field for the past 40 years.

The five years of ACCEL have enabled the upgrading of telexistence robots from a culmination of research, as well as bringing the technological implementation into the international spotlight in two ways: the AVATAR XPRIZE and the formation of Telexistence Inc. Currently, startups and major industries such as automotive, airline, and telecommunication industries are participating, which contributes to the formation of a new industrial sector.

tele- or tel- = distance, distant

existence = The fact or state of existing; being

telexistence = tel- + existence; the (virtual) existence from a distance

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Turning ideas into reality

Program Manager

JUNJI NOMURA

Associate Program Manager

KOUTA MINAMIZAWA

What was your initial aim with the ACCEL program?

Kouta Minamizawa:CREST’s (Core Research for Evolutional Science and Technology) Haptics Media was purely a university project, and had progressed to the point where the practical application was in sight. But since its research was on the level of “something interesting at an academic conference,” ACCEL was created to be a 5-year sprint to turn that into reality, bridging the ideas into something industries can create. From the initial stages we had Mr. Nomura, who had industry VR experience, and professor Susumu Tachi, one of the pioneers of VR who brought the concept to reality, join and work together, which was a giant boost to the ACCEL team.

Junji Nomura:Technology for us is having the academic achievements be the basis of industrial application—simply commercializing the technology wouldn’t get us there. “How should the technology be” is the fundamental driving principle for the university.

In the industrial world, taking 10 years from researching the technology to commercializing is standard. At Panasonic, the business division turns around product development in a 2–3 years, but the research division typically takes 5, even 10 years for basic research. The company researchers independently propose the fundamental research for technology looking 10 years ahead, which doesn’t always factor logistics like staffing cost.

So in the industrial world research can progress independently, but we think there’s value in working with academic institutions to lay the foundation for the concepts, in order to bring the technology to life in products. And we think this approach can only be done in the framework of something like ACCEL.

Minamizawa:With universities continually shifting their approach, how do we closely align with businesses to bring something new to life? To that end Mr. Nomura’s contribution was significant with his knowledge and know-how from the industry side. While personal drive can advance research on the academic side, there’s limitations in grasping the practical needs to implement the technology in society at large. For the people on the industry side who know the needs, they need to know what the technology will look like in 5–10 years, otherwise the discussion ends with the current viable technology. So we propose what that technology would be in 5–10 years, then use that as a basis for discussion. That molds the technological direction so that both sides can be aligned as one. For ACCEL’s project management, we put a lot of effort to create that kind of environment.

Looking back, how did the collaboration actually turn out?

Minamizawa:Recently there’s a lot of talk about open innovation or co-creation, and with research divisions in the industrial sector there’s likely a lot of successful instances of involving universities. But looking at it from the university side, in most cases it’s the businesses that take the initiative to say “let’s work together.” One of our big missions this time was for the academic side to be proactive, to consider how we’ve previously worked together, and create the framework to work with the industrial sector.

Nomura: With ACCEL’s framework, people on the industry and academic sides can continually be in discussion so that something crystalizes on the other end. That’s key. If the industrial sector is entrenched, then it can’t see beyond itself—and the planning becomes rigid. To start with a clean slate and debate with the academic side gives a fresh perspective, which brings about new ideas. Ultimately I think it’s meaningful for the country as a whole.

Minamizawa:I definitely agree. With universities involved then valuable conversations can happen, where businesses can take a step back and look at the long-term perspective, relative to where they started. With ACCEL, that could be the ACCEL consortium, or the HAPTIC DESIGN PROJECT. When people from various genres come with different inspirations, then a new field can be born. With researchers like us being there to look at the seeds of innovation that come out of it, we can then advance the science, technology, technological development and fundamental research. By taking people’s visions of the future and putting feasibility and evidence to it, that allows for industries and universities to move forward together.

Nomura:Including with productization, I think it’s the industrial sector’s role to produce results set to a standard. Since academic research is generalized and not something businesses monopolize, the question then is how do we meaningfully raise the bar? To be sure, there’s positives and negatives to try and set a standard by asking “how will this be useful in the world,” but the development has to be applicable in some way.

Minamizawa:In that sense, the driving force to say “let’s go in this direction” came from university researchers. The researchers each have a direction they want to go in, and the business people who are there consider the viable possibilities and how they’re applicable. When the direction of the business and research side mesh well, then they can move forward together. Fortunately I think that’s happening now.

The times I think, “this is going well,” are usually when the business side give us the freedom to put forth the projects or businesses we have been envisioning. When the researchers simply cast the vision and the business side executes, then it inevitably doesn’t work out. But with ACCEL, each person on the business side has within them the notion that “we’re creating this future together,” and our proposals are embodied in that sentiment.

What was a single person’s sentiment can turn into the sentiment of our society. We think it’s ACCEL’s purpose to be that bridge, and we want to nurture the seeds that have been planted to create a future together, with people across different disciplines and backgrounds.

Junji Nomura

Japan Science and Technology Agency (JST) ACCEL program manager. Graduated from Kyoto University Graduate School of Engineering in 1971. Started work at Matsushita Electric Industrial Co., Ltd. in 1971, obtaining a Doctorate of Engineering in 1988. Supervised the development of the System Kitchen VR System (VIVA), which enabled users to design their own system kitchen; installed in Matsushita Electric’s Shinjuku showroom. Served as senior managing director and corporate advisor to Panasonic Corp., as well as president of the IEC.

Kouta Minamizawa

Associate professor at Keio University Graduate School of Media Design (KMD), Japan Science and Technology Agency’s (JST) ACCEL assistant program manager. Completed doctorate for The University of Tokyo’s Graduate School of Information Science and Technology.

Implemented and conducting R&D of embodied media that used haptic technology to transmit, expand and create embodied experiences. Propagated haptic design into the mainstream through the Haptic Design Project, driving the co-creation of new sports experiences.

ACCEL Program setting the trajectory for industry-academia co-creation

| 2015.4 | Opened Miraikan’s Cyber Living Lab DAIBA in Odaiba. | ||

|---|---|---|---|

| 2015-2018 | Launched “Shockathon,” the largest hackathon for haptics. | ||

| 2016.4 | Established the Embodied Media Consortium (50 companies participated as of 2019.9) | ||

| 2016.10 | Started HAPTIC DESIGN PROJECT to nurture haptic design talent | ||

| 2017.4 | Opened Living Lab SHIBUYA at Shibuya FabCafe MTRL | ||

| 2018-2019 | Drove IEC (International Electrotechnical Commission) standardization of haptic transmission protocols to implement haptic transmissions in the 5G era, centered around Japan’s industry-academic community. |

Examples of social implementation through industry-university co-creation

Miraikan

MiraikanCyber Living Lab DAIBA

Living Lab SHIBUYA

Living Lab SHIBUYA

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Research Organization

| Tachi Laboratory , Institute of Gerontology, The University of Tokyo | https://tachilab.org |

| Embodied Media Project, Keio University Graduate School of Media Design | http://embodiedmedia.org |

| Shinoda & Makino Lab, Graduate School of Frontier Sciences, The University of Tokyo | https://hapislab.org |

| Kajimoto Laboratory, The University of Electro-Communications | http://kaji-lab.jp |

| Sato Laboratory, Nara Women's University | http://katsunari.jp/satolab |

| ALPS ALPINE CO., LTD. | https://www.alpsalpine.com |

| NIPPON MEKTRON, LTD. | https://www.mektron.co.jp |

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Research Period

2014.12.15〜2019.11.30(5 years)

The ACCEL project started as a continuation of JST CREST’s research project on ”Construction and Utilization of Human-harmonized Tangible Information Environment” (2009.10〜2015.3).

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Paper / Patent

Haptic Primary Colors/Integrated Haptic Transmission Module

- Vibol Yem, Hiroyuki Kajimoto: “Comparative Evaluation of Tactile Sensation by Electrical and Mechanical Stimulation”, IEEE Transactions on Haptics, Vol. 10, Issue 1, pp.130-134, IEEE, 2017

- Vibol Yem, Hiroyuki Kajimoto: "Masking of Electrical Vibration Sensation Using Mechanical Vibration for Presentation of Pressure Sensation", IEEE World Haptics Conference 2017, IEEE, 2017

- Yuki Tajima, Yasuyuki Inoue, Fumihiro Kato, Susumu Tachi: "Tactile Sensation Reproducing Method of a Haptic Display that Presents Force, Vibration, and Temperature as Haptic Primary Colors", Transactions of the Virtual Reality Society of Japan, Vol.24, No.1, pp.125-135, 2019

- Patent application2018-510679, WO2017/175867, TACTILE INFORMATION CONVERSION DEVICE, TACTILE INFORMATION CONVERSION METHOD, AND TACTILE INFORMATION CONVERSION PROGRAM, Susumu Tachi,Masashi Nakatani, Katsunari Sato, Kouta Minamizawa, Hiroyuki Kajimoto

- Patent application 2018-510680, WO2017/175868, TACTILE INFORMATION CONVERSION DEVICE, TACTILE INFORMATION CONVERSION METHOD, TACTILE INFORMATION CONVERSION PROGRAM, AND ELEMENT ARRANGEMENT STRUCTURE, Susumu Tachi,Masashi Nakatani,Katsunari Sato,Kouta Minamizawa,Hiroyuki Kajimoto

Wearable Haptic Interface/ Embodied Contents Platform

- Yukari Konishi, Nobuhisa Hanamitsu, Benjamin Outram, Youichi Kamiyama, Kouta Minamizawa, Ayahiko Sato, Tetsuya Mizuguchi: “Synesthesia Suit”, In Haptic Interaction. AsiaHaptics 2016. Lecture Notes in Electrical Engineering, Vol. 432, pp. 499-503, Springer, Singapore, 2016

- Akihito Noda and Hiroyuki Shinoda: “Frequency-Division-Multiplexed Signal and Power Transfer for Wearable Devices Networked via Conductive Embroideries on a Cloth”, In Proceedings of the 2017 IEEE MTT-S International Microwave Symposium, pp. 537-540, ACM, 2017

- Tomosuke Maeda, Keitaro Tsuchiya, Roshan Peiris, Yoshihiro Tanaka, Kouta Minamizawa: “HapticAid: Haptic Experiences System Using Mobile Platform”, In Proceedings of the 10th International Conference on Tangible, Embedded, and Embodied Interaction, pp. 397-402, ACM, 2017

- Roshan Lalintha Peiris, Wei Peng, Zikun Chen, Liwei Chan, and Kouta Minamizawa: “ThermoVR: Exploring Integrated Thermal Haptic Feedback with Head Mounted Displays”, In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, ACM, 2017

- Junichi Kanebako,Kazuya Yanagihara, Kazuya Ohara, Kouta Minamizawa: “Designing Tactile Sense -Haptic Design Project’s Approach, Jounal of the Japan Society for Simulation Technology”, Vol.37 No.1, 2018

- Patent application2017-555171, WO2017/099241, TACTILE PRESENTATION SYSTEM, TACTILE PRESENTATION METHOD, AND TACTILE PRESENTATION PROGRAM Kouta Minamizawa, Yoshihiro Tanaka, Tomosuke Maeda, Masashi Nakatani, Roshan Peiris

Embodied Telexistence Platform

- Charith Lasantha Fernando, Mhd Yamen Saraiji, Kouta Minamizawa, Susumu Tachi: “Effectiveness of Spatial Coherent Remote Drive Experience with a Telexistence Backhoe for Construction Sites”, In Proceedings of the 25th International Conference on Artificial Reality and Telexistence (ICAT’15), Kyoto, Japan, 2015

- Susumu Tachi: “Telexistence: Enabling Humans to be Virtually Ubiquitous, Computer Graphics and Applications”, Vol. 36, No.1, pp.8-14, 2016

- Susumu Tachi: “Telexistence 20 Years After”, Journal of the Virtual Reality Society of Japan, Vol.21, No.3, pp.34-37, 2016

- Kyosuke Yamazaki, Yasuyuki Inoue, MHD Yamen Saraiji, Fumihiro Kato, Susumu Tachi: “The Influence of Coherent Visuo-Tactile Feedback on Self-location in Telexistence”, Transactions of the Virtual Reality Society of Japan, Vol.23, No.3, pp.119-127, 2018

- Susumu Tachi: “Recent Development of Telexistence”, Journal of the Robotics Society of Japan,Vol.36, No.10, pp.2-6, 2018

- Yasuyuki Inoue, MHD Yamen Saraiji, Fumihiro Kato, Susumu Tachi: “Expansion of Bodily Expression Capability in Telexistence Robot using VR”, Transactions of the Virtual Reality Society of Japan, Vol.24, No.1, pp.137-140, 2019

- Susumu Tachi: “Forty Years of Telexistence—From Concept to TELESAR VI,” In Proceedings of the International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments (ICAT-EGVE 2019), pp 1-8, Tokyo, 2019

- Patent application 2018-172026, INFORMATION PROCESSING APPARTUS , ROBOT HAND CONTROL SYSTEM, AND ROBOT HAND CONTROL PROGRAM,Susumu Tachi, Yasuyuki Inoue, Fumihiro Kato

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Events

| 2015.10.23 | 1st Symposium in DCEXPO 2015 "Future perspective on Embodied Media content" Susumu Tachi ×Junji Nomura × Kouta Minamizawa |

| 2016.10.29 | 2nd Symposium in DCEXPO 2016 "Technology and the Human Body" Susumu Tachi × Toshiyuki Inoko × Kouta Minamizawa |

| 2016.11.19 | Haptic Design CAMP #1「HAPTIC DESIGN and Communication/Education /Toy」Shinji Kawamura × Yukio Oya × Shinpei Takahashi × Junji Watanabe × Kouta Minamizawa |

| 2016.11.19 | Haptic Design CAMP #2「HAPTIC DESIGN and Fashion/Space/Interior」 Eiichi Izumi × Shun Horiki × Junji Watanabe × Kouta Minamizawa |

| 2017.6.21 | Haptic Design Meetup vol.1 Haptic ×(Sound)Design Tatsuya Honda × Junichi Kanebako × Kouta Minamizawa |

| 2017.7.19 | Haptic Design Meetup vol.2 Haptic ×(Body)Design Yoshihiro Tanaka × Natsuko Kurasawa × Minatsu Takekoshi × Kouta Minamizawa |

| 2017.9.4 | Haptic Design Meetup vol.3 Haptic ×(Entertainment)Design Hiroyuki Kajimoto × Takashi Kawaguchi × Ryo Yokoyama × Kouta Minamizawa |

| 2017.10.4 | Haptic Design Meetup vol.4 Haptic ×(Emotion)Design Masashi Nakatani × TELYUKA(Teruyuki Ishikawa & Yuka Ishikawa) × Kouta Minamizawa |

| 2017.10.29 | 3rd Symposium in DCEXPO 2017 "XPRIZE Challenges: Making the Impossible Possible" Susumu Tachi × Takeshi Hakamada × Kouta Minamizawa |

| 2017.11.4 | Haptic Design Meetup vol.5 Haptic ×(Social)Design Junji Watanabe × Eisuke Tachikawa × Kouta Minamizawa |

| 2017.12.1 | Haptic Design Meetup vol.6 Haptic ×(Costume)Design Ichiro Amimori × Tamae Hirokawa × Kazuya Ohara × Junichi Kanebako |

| 2018.2.21 | HAPTIC DESIGN AWARD 2017 Award Ceremony,HAPTIC DESIGN MEETUP SPECIAL |

| 2018.2.27〜2018.3.31 | Research Complex NTT R&D @ICC x HAPTIC DESIGN PROJECT Towards Design of Touch: Starting from Zero Distance |

| 2018.9.7 | Haptic Design Meetup vol.7 Haptic ×(KIDS)Design Akihiro Tanaka × Takashi Usui × Kazuya Ohara × Kouta Minamizawa |

| 2018.11.6 | Haptic Design Meetup vol.8 Haptic ×(Material)Design Masaharu Ono × Masashi Kamijo × Kazuya Ohara × Kouta Minamizawa |

| 2018.11.15 | 4th Open Symposium in DCEXPO 2018「Telexistence Now Space - Challengeing time instantaneous movement industry and Telexistence Society-」 Susumu Tachi x Telexistence Inc. Model H |

| 2018.12.21 | Haptic Design Meetup vol.9 Haptic ×(Skill)Design Kohei Matsui × Kazuto Yoshida × Kazuya Ohara × Kouta Minamizawa |

| 2019.11.26 | JST ACCEL Embodied Media Project SYMPOSIUM & EXHIBITION |

[ Cover | For humans to live like human beings | Envisioning a new industry based on embodied media | The essence of reality brought to life with the power of the virtual | Investigating Haptic Primary Colors / Developing an Integrated Haptic Transmission Module | Wearable Haptic Interface/Embodied Content Platform Creation | Forming a co-creation community | Creating the Embodied Telexistence Platform | Turning ideas into reality | Research Organization | Research Period | Paper / Patent | Events | Researchers ]

Researchers

| The University of Tokyo | Susumu Tachi, Hiroyuki Shinoda, Yasuyuki Inoue, Fumihiro Kato, Yasutoshi Makino, Keisuke Hasegawa, Akihito Noda, Masashi Nakatani, Yuki Tajima, Kyosuke Yamazaki , Naka Ikumi , Fu Junkai, Rumiko Aoyama, Naoko Osada, Haruna Kozakai, Minori Oi |

|---|---|

| Keio University | Kouta Minamizawa, Charith Fernando, Roshan Peiris, Liwei Chan, MHD Yamen Saraiji, Youichi Kamiyama, Junichi Kanebako, Emily Kojima, Kazuya Ohara, Kazuya yanagihara, Tadatoshi Kurogi, Mina Shibasaki, Nobuhisa Hanamitsu, Mizushina Yusuke, HIrohiko Hayakawa, Marie-Stephanie Soutre, Hirokazu Tanaka, Tomosuke Maeda, Yukari Konishi , Satoshi Matsuzono, Reiko Shimizu, Aria Shinbo, Chen Zikun, Feng Yuan Ling, Amica Hirayama, Tanner Person, Haruki Nakamura, Kaito Hatakeyama, Takaki Murakami, Keitaro Tsuchiya, Taichi Furukawa, Junichi Nabeshima |

| The University of Electro-Communications | Hiroyuki Kajimoto, Yem Vibol, Takuto Nakamura, Ryuta Okazaki, Takahiro Shitara, Kenta tanabe |

| Nara Women's University | Katsunari Sato, Mai Shibahara, Moeko Kita, Ai Hattori |

| ALPS ELECTRIC CO., LTD. | Daisuke Takai, Yasuji Hagiwara, Kazuya Inagaki, Ikuo Sato, Jo Kawana, Futo Heishiro |

| NIPPON MEKTRON, LTD. | Keizo Toyama, Hidekazu Yoshihara, Kiyoshi Igarashi, Taisuke Kimura, Akio Yoshida |

Embodied Media Consortium Participating Companies

Lenovo Japan Corporation/Tec Gihan Co.,Ltd./Technology Joint Corporation/JGC Corporation/Recruit Career Co., Ltd./Tianma Japan, Ltd./MASAMI DESIGN co., ltd/NVIDIA Corporation/Mercedes-Benz Japan Co., Ltd./Shiseido Company, Limited/ASK Corporation/Solidray Co.,Ltd. /Ducklings inc./Sony Corporation/1-10,Inc./ismap LLC/Dentsu ScienceJam Inc./NISSHO CORPORATION/General Incorporated Association T.M.C.N/kuraray trading Co.,Ltd. Clarino Division/kyokko electric co. ltd/NIPPON MEKTRON, LTD./spicebox, inc./FIELDS CORPORATION/ALPS ELECTRIC CO., LTD./NTT Communications Corporation/Fuji Xerox Co., Ltd. Key Technology Laboratory/Fujitsu Limited/Foster Electric Company, Limited/Toppan Printing Co., Ltd/P.I.C.S. Co., Ltd./CRI Middleware Co., Ltd./NHK ENTERPRISES, INC./DENTSU TEC INC. /FUJIFILM Holdings Corporation/Miletos inc./AOI Pro. Inc./OKAMURA CORPORATION/Dverse Inc./Teijin Limited/TELEXISTENCE Inc./Ricoh Company, Ltd./Yamaha Corporation/Toyoda Gosei Co., Ltd./Nintendo Co., Ltd./SMK Corporation Touch Panel Division /Mizuno Corporation Global footwear prodcuct division/Taica Corporation/EXRinc. (Order of membership・2019.11)

FACTBOOK Editorial Team

Kazuya Ohara(Loftwork Inc.,Researcher at Keio University Graduate School of Media Design Researcher)

Kazuya Yanagihara(Loftwork Inc.,Researcher at Keio University Graduate School of Media Design Researcher)

Mami Jinno(Director)

Chika Goto

Aki Sugawara(Translator)

Design Atsushi Honda(sekilala)

Cover Mutsumi Kawazoe (StudioSnug illustration)

Publisher Japan Science and Technology Agency FY2014 Selected Research Project JST ACCEL Embodied Media Technology based on Haptic Primary Colors

Date of issue 2019.11.26