The mobile mutual telexistence system, TELESAR IV, which is equipped with master-slave manipulation capability and an immersive omnidirectional autostereoscopic 3D display with a 360◦ field of view know as TWISTER, was developed in 2010. It has a projection of the remote participant’s image on the robot by RPT. Face-to-face communication was also confirmed, as local participants at the event were able to see the remote participant’s face and expressions in real time. It was further confirmed that the system allowed the remote participant to not only move freely about the venue by means of the surrogate robot, but also perform some manipulation tasks such as a handshake and several gestures.

Details:

Mobile Mutual Telexistence Communication System: TELESAR IV

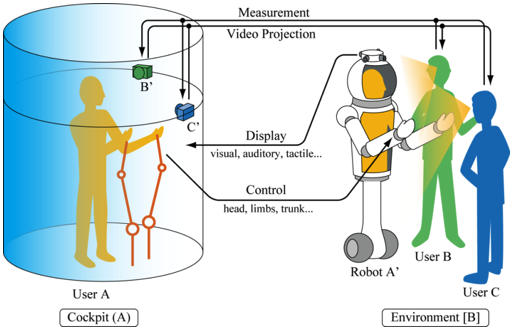

Figure 1 shows a conceptual sketch of mobile mutual telexistence using TWISTER and a surrogate robot. User A can observe remote environment [B] using an omnistereo camera mounted on surrogate robot A'. This provides user A with a panoramic stereo view of the remote environment displayed inside the TWISTER booth. User A controls robot A' by using the telexistence master-slave control method. Cameras B' and C' mounted on the booth are controlled by the position and orientation of users B and C relative to robot A', respectively. Users B and C can observe different images of user A projected on robot A' by wearing their own Head Mounted Projector (HMP) to provide the correct perspective. Since robot A' is covered with retroreflective material, it is possible to project images from both cameras B' and C' onto the same robot while having both images viewed separately by users B and C.

Fig. 1. Conceptual sketch of mobile mutual telexistence system using TWISTER as a cockpit.

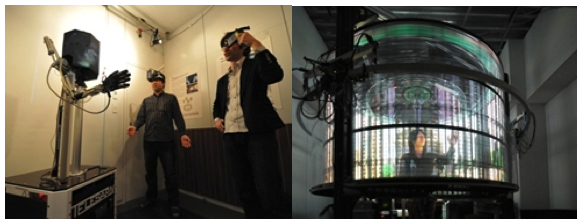

The mobile mutual telexistence system, TELESAR IV, which is equipped with master-slave manipulation capability and an immersive omnidirectional autostereoscopic three-dimensional display with a 360° field of view known as TWISTER, was developed in 2010. It projects the remote participant’s image onto the robot using RPT. Face-to-face communication was also confirmed, as local participants at the event were able to see the remote participant’s face and expressions in real time. It was further confirmed that the system allowed the remote participant to not only move freely about the venue by means of the surrogate robot but also perform some manipulation tasks such as a handshake and other gestures. Figure 2 shows a general view of the system.

Fig. 2. General view of TELESAR IV system.

The TELESAR IV system consists of a telexistence remote cockpit system, telexistence surrogate robot system, and RPT viewer system. The telexistence remote cockpit system consists of the immersive 360° full-color autostereoscopic display known as TWISTER, a rail camera system installed outside TWISTER, an omnidirectional speaker system, an OptiTrack motion capture system, a 5DT Data Glove 5 Ultra, a joystick, and a microphone.

The telexistence surrogate robot system consists of an omnidirectionally mobile system, the 360° stereo camera system known as VORTEX, a retroreflective screen, an omnidirectional microphone system, a robot arm with a hand, and a speaker. The RPT viewer system consists of a handheld RPT projector, head-mounted RPT projector, and OptiTrack position and posture measurement system.

Stereo images captured by the 360° stereo camera system VORTEX are fed to a computer (PC1) mounted on the mobile robot. Real-time compensation and combination of the images are performed to transform these into 360° images for TWISTER. The processed images are sent to TWISTER and unprocessed sound from the omnidirectional microphone system is sent directly to the corresponding speaker system in TWISTER.

Cameras are controlled to move along the circular rail and to imitate the relative positions of the surrogate robot and the local participants at the venue. The images of the remote participant taken by these cameras outside TWISTER are fed to a computer (PC3) and sent to another computer (PC2) mounted on the mobile surrogate robot. These images are sent to the handheld and head-mounted projectors once they are adjusted using the posture information acquired by the position and posture measurement system (OptiTrack), which consists of seven infrared cameras installed on the ceiling of the venue. These processed images are in turn projected onto the retroreflective screen atop the mobile robot. The voice of the remote participant is also sent directly to the speaker of the mobile surrogate robot.

The joystick is located inside TWISTER and is controlled by the left hand of the remote participant. The data of the joystick are fed to PC3 and sent to PC1 via the network. Based on the information received, PC1 generates and executes a motion instruction to have the mobile surrogate robot move in any direction or turn freely on the spot; i.e., omnidirectional locomotion is possible. Using the motion capture system (OptiTrack) installed on the ceiling of TWISTER, the position and posture of the right arm of the remote participant are obtained. The position and posture data are also fed to PC3 and sent to PC2 via the network. Based on the received data, PC2 controls the robot arm to imitate the arm motion of the remote participant. In addition, hand motion data acquired by the 5DT Data Glove 5 Ultra are sent to the hand of the surrogate robot to imitate the hand motion of the remote participant.

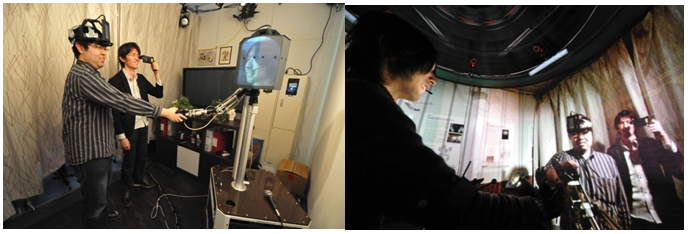

A remote participant inside TWISTER joined a gathering by means of his surrogate robot and moved freely in a room with two local participants, who were able not only to see his face in real time through handheld and head-mounted RPT projectors but also to communicate with him naturally. The local participant in front of the robot saw the full face of the remote participant, while the local participant on the right side of the robot saw his right profile at the same time. The remote participant was able not only to see and hear the environment as though he were there but also to communicate face-to-face with each local participant. Moreover, he was able to shake hands and express his feelings with gestures, as shown in Fig.3. Total latency of the visual-auditory system was less than 100ms including data acquisition, transmission, and rendering. The total control cycle time of the robot was around 60ms including measurement of the human operator, transmission, and control of the robot’s arm and hand.

Fig. 3. Demonstration of TELESAR IV system at work.

Susumu Tachi, Kouichi Watanabe, Keisuke Takeshita, Kouta Minamizawa, Takumi Yoshida and Katsunari Sato: Mutual Telexistence Surrogate system: TELESAR4 -telexistence in real environments using autostereoscopic immersive display -, Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, pp.157-162, San Francisco, USA (2011. 9) [PDF]