Since 2016, the first year of VR revival, there have been signs of a second boom in VR, and Goldman Sachs and others have predicted that VR will rival television as an industry in the 2020s, and we are now in the midst of that trend.

In 2012, four years before 2016, Professor Emeritus of the University of Tokyo, Susumu Tachi, gave a historical review of 3D and VR in his keynote speech at the Virtual Reality Society of Japan, and discovered a 30-year cycle of 3D and VR technologies. As a result, he made the following prediction that VR would rise again in the 2020s.

Susumu Tachi: Returning to the Origin - Looking to the Future of Virtual Reality and Telexistence, Journal of the Virtual Reality Society of Japan, Vol. 17, No. 4, pp.6-17 (2012.12) [in Japanese] [PDF]

If you go back and look at the history of 3D, you will see that there has been a boom in 3D every 30 years, and after the boom in 3D, there has been a boom in VR. Looking at the history, 3D first appeared in the 19th century, but it took until the 1920s for 3D to reach its dawn. After the dawn of 3D, the first boom in 3D came in the 1950s, and the name "3D" became established at that time. Then came the second 3D boom in the 1980s and the third boom in the 2010s. The dawn of VR was in the 1960's, 10 years after the first 3D boom, and the first VR boom was in the 1990's, 10 years after the second 3D boom, indicating that the VR boom was born almost 10 years after the 3D boom. From this, we can expect that the next VR boom will be in the 2020s (Tachi Conjecture). Translated from Japanese.

He made the same conjecture in English in his ICAT keynote speech below.

Susumu Tachi: From 3D to VR and further to Telexistence, Proceedings of the 23rd International Conference on Artificial Reality and Telexistence (ICAT), Tokyo, Japan, pp.1-10 (2013.12) [PDF]

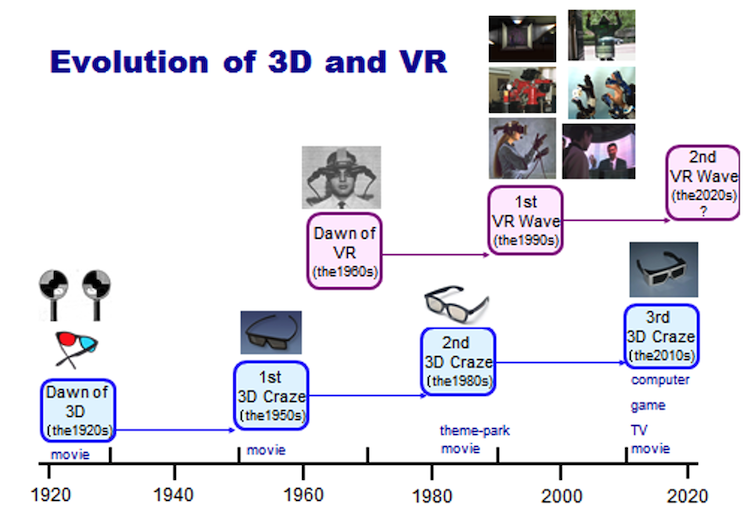

3D and virtual reality (VR) are two closely related areas of science and technology. As shown in Figure 1, an examination of the history of 3D reveals that 3D crazes have occurred every thirty years. It is also apparent that a VR craze has emerged after each 3D craze. Although historically 3D first appeared in the 19th century, the true dawn of the technology did not arrive until the 1920s. After this early stage, the first 3D craze came in the 1950s, when the term “3D” was first used and the term “3D craze” became widespread. Subsequently, there was a second 3D craze in the 1980s, followed by a third in the 2010s. The dawn of VR came in the 1960s, 10 years after the first 3D craze. The first VR craze was observed in the 1990s, 10 years after the second 3D craze. Since this trend shows VR crazes occurring approximately 10 years after 3D crazes, the author expects a second VR craze to occur in the 2020s (Tachi conjecture).

Figure 1: Evolution of 3D and VR.

As he predicted in 2012, we are now entering the second era of the rise of VR. And there is a new trend on the horizon. This is the trend of telexistence (avatar).

The concept of telexistence was conceived by Susumu Tachi on September 19, 1980, and the first device was fabricated and published in 1982. More than 40 years have passed since then, and the technology has grown, and telexistence is about to enter its first phase of prosperity. In other words, in the current 2020s, the AVATAR XPRIZE was launched globally, Avatar was taken up as the first goal of Japanese Moonshot Project, and many startups have been born aiming for avatars (telexistence). It can be said that progress is moving toward telexistence society.

Tachi's conjecture on telexistence or avatars can be found in the following prospective paper.

Susumu Tachi: Telexistence and Virtual Teleportation Industry, Journal of Society of Automotive Engineers of Japan, vol.37, no.12, pp.17-23 (2019.12) [in Japanese][PDF]

In the article, Tachi makes a prediction that telexistence (avatars) will have its first rise in the 2020s, followed by a second rise again in 2050, at which time telexistence will permeate and take root in society. The following is a portion of that prediction translated from Japanese.

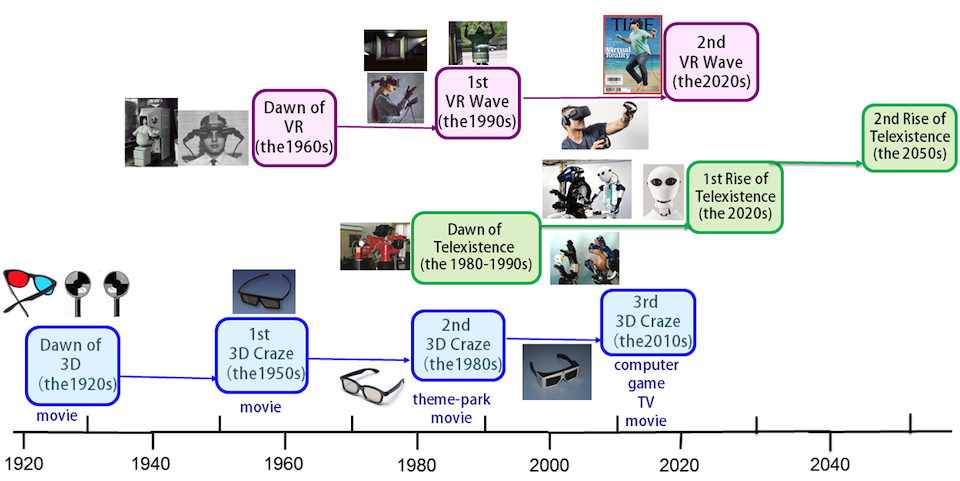

Figure 8 shows an overview of the 30-year cycle related to 3D, VR, and telexistence. The dawn period is the period of the origin when the history is unraveled, i.e., when the people concerned know about it but it is not generally known.

Fig. 8 History of the rise of 3D, VR, and telexistence and future predictions.

The 30 years from the 1980s to the 2000s can be called the dawn of telexistence. While it has been recognized in the academic world and project research has progressed, it has yet to be implemented in society and cause a boom.

Currently, the world is moving towards telexistence. In the 2020s, this technology will enable people to move freely and instantaneously regardless of various limitations such as environment, distance, age, and physical ability. In addition to remote employment and leisure, avatars can be used in areas where doctors, teachers, and skilled technicians are in short supply, and at disaster sites where human access is difficult, thereby contributing to both the resolution of social issues and economic development. Furthermore, based on the 30-year cycle forecast, it is expected that a major step-up will occur again in the 2050s, and society will undergo major changes.

The following is a web version of the ICAT paper that can be said to be the origin of these predictions in English.

Susumu Tachi: From 3D to VR and further to Telexistence, Proceedings of the 23rd International Conference on Artificial Reality and Telexistence (ICAT), Tokyo, Japan, pp.1-10 (2013.12) [PDF]

From 3D to VR and further to Telexistence

Susumu Tachi

Keio University / The University of Tokyo

Abstract

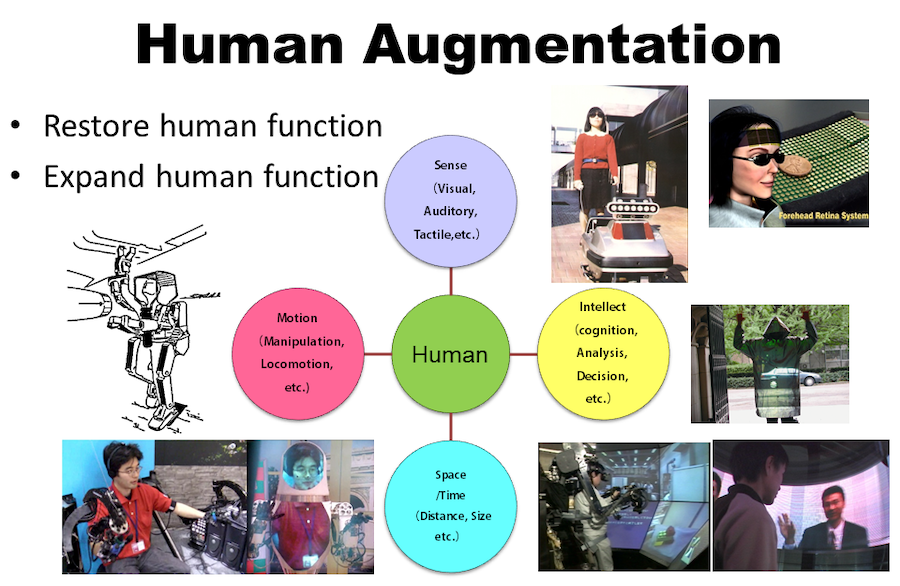

The history of 3D elucidates that it has a thirty-year cycle of evolution. Further, it was discovered that VR (virtual reality) and AR (augmented reality) evolved almost a decade after the 3D crazes occurred. This leads to the conjecture that the next VR and AR craze will be in the 2020s. AR augments the sense and intellect of a human. Human augmentation or augmented human (AH) augments humans not only in their senses and intellect but also in their motions and abilities to transcend time and space. Human augmentation in time and space is termed as telexistence. In addition, the evolution of telexistence is reviewed along with a glance at its future prospects.

Keywords: 3D, virtual reality, VR, augmented reality, AR, human augmentation, augmented human, AH, cyborg, telexistence

Index Terms: A.1 [INTRODUCTORY AND SURVEY]; I.2.9 [Robotics]: Operator interfaces; I.3.7 [Three-Dimensional Graphics and Realism]: Virtual reality; H.4.3 [Communications Applications]: Computer conferencing, teleconferencing, and videoconferencing

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

1 Introduction

Virtual reality (VR) captures the essence of reality; it represents reality very effectively. VR enables humans to experience events and participate in a computer-synthesized environment as though they are physically present in that environment. On the other hand, augmented reality (AR) seamlessly integrates virtual environments into a real environment to enhance the real world.

AR achieves human augmentation by enhancing the senses and intellect of humans. Augmented human (AH) augments humans in their motions and their ability to transcend time and space besides enhancing their senses and intellect. Cyborgs and telexistence would mark the peak of AH development.

Telexistence is a concept that can free humans from the restrictions of time and space by allowing humans to exist virtually in remote locations without travel. Besides, it can enable interactions with remote environments that are real, computer-synthesized, or a combination of both.

This keynote reviews the past and present of 3D, VR, AR, AH, cyborg, and telexistence to study the mutual relations and the future of these technologies. Further, it introduces the recent advancements and future prospects of telexistence. TELESAR IV, which is a mutual telexistence system, TELESAR V, which is known for achieving haptic telexistence, and TWISTER, which is a telexistence wide-angle immersive stereoscope that provides a full color autostereoscopic with a 360 degree field of view, have been given special emphasis.

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

2 3D MOVES IN 30-YEAR CYCLES

3D and virtual reality (VR) are two closely related areas of science and technology. As shown in Figure 1, an examination of the history of 3D reveals that 3D crazes have occurred every thirty years. It is also apparent that a VR craze has emerged after each 3D craze. Although historically 3D first appeared in the 19th century, the true dawn of the technology did not arrive until the 1920s. After this early stage, the first 3D craze came in the 1950s, when the term “3D” was first used and the term “3D craze” became widespread. Subsequently, there was a second 3D craze in the 1980s, followed by a third in the 2010s. The dawn of VR came in the 1960s, 10 years after the first 3D craze. The first VR craze was observed in the 1990s, 10 years after the second 3D craze. Since this trend shows VR crazes occurring approximately 10 years after 3D crazes, the author expects a second VR craze to occur in the 2020s (Tachi conjecture).

Figure 1: Evolution of 3D and VR.

2.1 Origin of 3D

3D originally began in 1838 with the invention of binocular stereo in the UK [1]. It was the brainchild of Sir Charles Wheatstone, known for the invention of the Wheatstone bridge used in the field of measurement. Binocular stereo was the first stereo viewer technology presented to the Royal Society of London in the form of a mirror stereoscope. It was subsequently refined in 1849 by a renowned Scottish physics professor Sir David Brewster, who changed the mirrors used in a binocular stereo to prisms. Oliver Wendell Holmes in the US further modified Brewster’s refinement into a practical product that enjoyed worldwide popularity. However, even Holmes’s product only showed pictures or photos in three dimensions when the user looked through an apparatus resembling binoculars. It was unable to show movement and the observed 3D images were quite small; therefore, the 3D images that could be seen looked like miniatures.

2.2 Lead-up to the Dawn of 3D

In 1853, a method for generating 3D images called “anaglyph” was created [2]. This method applied red and blue filters to the left and the right eyes, respectively, and showed each eye a red or blue image having parallax. Subsequently, the filter colors were changed to red and green (a better match for the physiological structure of the human retina than red and blue).

Magic lantern slideshows first appeared in Germany in 1858. These slideshows enlarged the screen but still had no movement. Images first started to move with the 1895 invention of what is considered the first moving picture technology, the cinématographe Lumière. Although Thomas Edison had actually invented and demonstrated in public a moving picture technology called the "kinetoscope" in 1891, it was still a technology that required the user to look through a peephole. Inspired by Edison, the Lumière brothers created a technology that replaced the peephole with a large image projected onto a screen that could be seen by multiple viewers at once—the cinématographe Lumière.

Although the cinématographe Lumière itself was not 3D, it was combined with anaglyph to become 3D, leading to the dawn of 3D in the 1920s.

2.3 Dawn of 3D

Practical anaglyph-format 3D movies first appeared in the 1920s. Although the format enabled 3D projection, there was a high demand for a technology that would show 3D images in natural color instead of the anaglyph’s red and green.

The technology created to meet this demand was time-division 3D movies. Lawrence Hammond (of Hammond Organ fame) created 3D images by rapidly switching between the right and the left eyes using a mechanical shutter. Hammond invented a system that used a disc-shaped shutter that spun to rapidly switch the light reaching the right and left eyes. At the same time, a projector was synchronized to the shutter disc and switched the right and left eye images, projecting different images to the right and left eyes and making the image appear 3D. This technology is a very well-conceived 3D system even by today’s standards. Although it amazed large numbers of viewers, unfortunately, it soon fell into disuse because of its poor cost effectiveness [3].

2.4 First 3D Craze

The Polaroid format (a polarized light-based format) came into widespread use in the 1950s. Polarized stereoscopic pictures themselves had first been demonstrated in 1936 by Edwin H Land. The format came into widespread use in the 1950s, giving rise to a 3D movie craze that lasted from 1952 to 1955. Linear polarization was used as the polarization format. The term “3D” is said to have been coined at this time. The advances that enabled 3D technology were polarized stereoscopic glasses and a silver screen that could preserve polarization. However, the 3D craze did not last long. Despite reaching a fever pitch at one point, it was over in two or three years. However, even though the craze passed, the technology associated with it developed by leaps and bounds with each new craze, and since then, the field of 3D technologies has grown steadily.

2.5 Second 3D Craze

Thirty years after the 1950s, the 1980s saw a second 3D craze. Instead of linear polarization, the method used was circular polarization, which enabled the viewer to retain the 3D effect even if the head was tilted. This method made 3D images very easy to view, as there was no restriction on head movement; thus, the method was widely used for projecting movies and videos at events such as expositions.

2.6 Third 3D Craze

The third 3D craze comprises 3D movies now complemented by 3D TV, which got fully underway in 2010. This craze appears to have died down fairly rapidly, as is the fate of 3D. However, today’s 3D display technology is expected to continue spreading to various areas. It is expected to serve as a major step toward the second VR craze that should follow the current 3D craze, which is how it is positioned.

2.7 Reason for the Short Lifespan of 3D Crazes

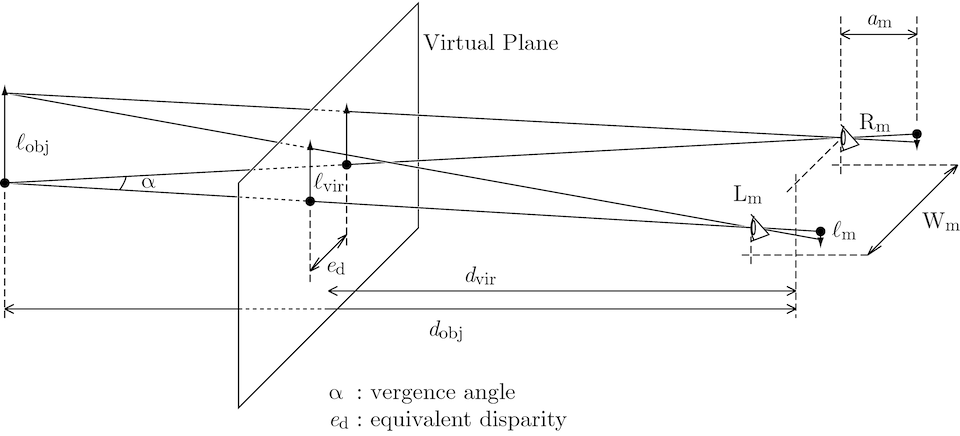

Why are 3D crazes always so short-lived? The reason is that since the 3D technology aims to reproduce the visual experience of the real world, an ideal 3D technology cannot be achieved without perfecting VR. Figure 2 illustrates why this is true. In two dimensions, the size of an object and the distance to it cannot be determined uniquely, and they are arbitrarily determined by a human viewer by his/her estimation. Therefore, when viewing a 2D photo or movie, the viewer estimates the object’s size and distance to it from the size of a known object using his/her past experience. Thus, human figures are estimated to be of a human size, however small the 2D image is.

As soon as a photo or movie is created in 3D, both the size of an object and the distance to it become uniquely determined.

Figure 2: Both the size of an object and the distance to it become uniquely determined in 3D.

When an entire scenery of a person shot with a stereo camera is viewed on 3D displays of different sizes, the same person in the image will appear large when the 3D display is large and will appear small when the display is small. This problem is inevitable for 3D.

In order to have natural 3D such as that observed in the real world, only part of the entire scenery captured by the camera must be displayed when the display is small. The display cuts out a portion of the entire 3D world; therefore, natural 3D projection requires only a portion of the entire scenery that was captured by the camera when the image is viewed on a display with a smaller angle of view than the camera that it was shot with. Doing so ensures that sizes and distances are kept the same as in the actual scene. To view the entire scenery on a small display, the display must be moved left/right and up/down.

As mentioned before, discrepancy occurs as soon as the entire image is crammed onto a small display without trimming. Distances and sizes are no longer accurate, and the result starts to resemble the world as depicted in a miniature. However, this problem does not exist in 2D as mentioned before. When the same image is sent to small and large displays such as a smartphone screen and a large TV screen, the viewer infers the original sizes of the subjects in their head, enabling them to correctly read the image without difficulty. When a broadcast becomes 3D, this method of 2D is no longer possible. When the same content is sent to a smartphone and a large-screen TV, the same person can appear to be a dwarf or a giant. This problem results from the essential nature of 3D per se.

Therefore, it is ultimately not possible to achieve well-defined 3D without the knowledge of VR. As with the current technology, the objects appear unnatural, and also, the result causes eyestrain. Movies released in theaters are shown on large screens of roughly the same size; therefore, well-made movies such as Avatar created by the director with a solid knowledge of 3D projection, James Cameron, appear perfectly natural when viewed in a theater. However, when the same movies are viewed on a display with the size of a household 3D TV, the result starts to look very unnatural.

Moreover, pseudo-3D created by reworking 2D is unnatural even when viewed on a large screen in a theater. The reason is that pseudo-3D is created by shooting the movie in 2D instead of 3D. Zooms and other techniques commonly used in 2D are entirely useless in 3D. Objects in 3D should become larger when the viewer moves toward them or when they approach the viewer; hence, zooming (in which objects do not move physically closer to the viewer) is a technique that only works in 2D and is useless as well as harmful for 3D. In light of all these issues, 3D movies can only succeed when shot with a thorough knowledge of VR. Unnatural 3D can therefore look more unnatural than 2D or cause eyestrain.

Further, poorly shot 3D movies are rejected by the human sensory organs. As humans, we spend our lives in the 3D real world; hence, our sensory organs are highly attuned to it, enabling us to instantly and instinctively recognize and reject poorly executed 3D projections.

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

3 VR MOVES 10 YEARS AFTER 3D MOVES

3.1 Dawn of VR

In the 1960s, 10 years after the first 3D craze, Ivan Edward Sutherland created 3D images using computer graphics (CG). Sutherland displayed CG images in the real world as augmented reality (AR), although the term AR had not yet been coined. The images were linked to human movements to enable displays of 3D images incorporating the viewer’s perspective. This technology was presented in 1968 in a paper titled “A Head-Mounted Three Dimensional Display [4].” Sutherland’s head-mounted display came 130 years after the Wheatstone stereoscope in 1838. Although the images themselves were still line drawings, they were expressed using computer graphics, and since objects were seen from the viewer’s perspective in correspondence with viewer head movements, the technology has been considered the beginning of VR (although again, the term VR was not in use at that time). Sutherland is therefore considered to be the father of both CG and VR.

3.2 First VR Craze

The first VR craze came in the 1990s, 10 years after the second 3D craze. The craze was initiated by a renowned head-mounted display (HMD) product called Eyephone that was jointly developed by VPL and NASA. It was created in 1989, approximately 20 years after Sutherland. Sutherland’s system enabled the user to move his/her head independently but did not give the impression of being in a separate location generated by the computer. In today’s terminology, Sutherland’s head-mounted 3D display was an AR system that showed the real world on a see-through display, and only created an environment with artificial computer-generated objects added to the real world that the user occupied. Therefore, it still did not go sufficiently far to be considered VR. In contrast, VPL Eyephone worked together with a device called a data glove to project the user into a VR world. In other words, the user entered the VR world.

Further, the user could view his/her own hand in this world, which was temporally and spatially synchronized to his/her movements, creating an impression of being immersed in this world.

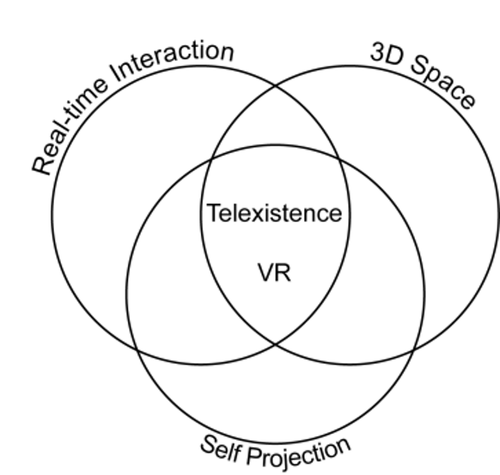

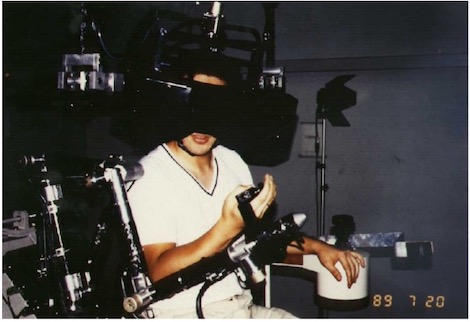

The VPL system in 1989 was the one that attained the three elements of VR, i.e., life-size 3D space, real-time interaction, and self-projection (Figure 3). On the other hand, Susumu Tachi proposed the concept of telexistence in 1980, and in 1988, he constructed a system attaining the abovementioned three elements, known as TELESAR (Telexistence Surrogate Anthropomorphic Robot). In this case, user hand operations and other aspects of system performance were verified both in real-space and in CG environments. Figure 3 illustrates the three elements of VR [5].

Figure 3: The three elements of virtual reality and/or telexistence.

In the 1990s, another useful system incorporating the three VR elements was completed. Known as CAVE (CAVE Automatic Virtual Environment), the system was exhibited and presented by Carolina Cruz-Neira, Thomas A. DeFanti, and Daniel Sandin at SIGGRAPH in 1993 [6]. The system turned the room itself into a 3D interactive environment. Subsequently, a five-surface system called CABIN was created in Japan by a team led by Michitaka Hirose. When viewing three dimensions, CAVE switched the image using LCD shutter glasses. Coming 70 years after Hammond’s 1922 mechanical shutter, it replaced the mechanical shutter with an LCD shutter. Making the shutter electronic using an LCD made it easier to use than the previous mechanical version, enabling commercial release. Incidentally, most of today’s 3D TVs and 3D movies use this LCD shutter method.

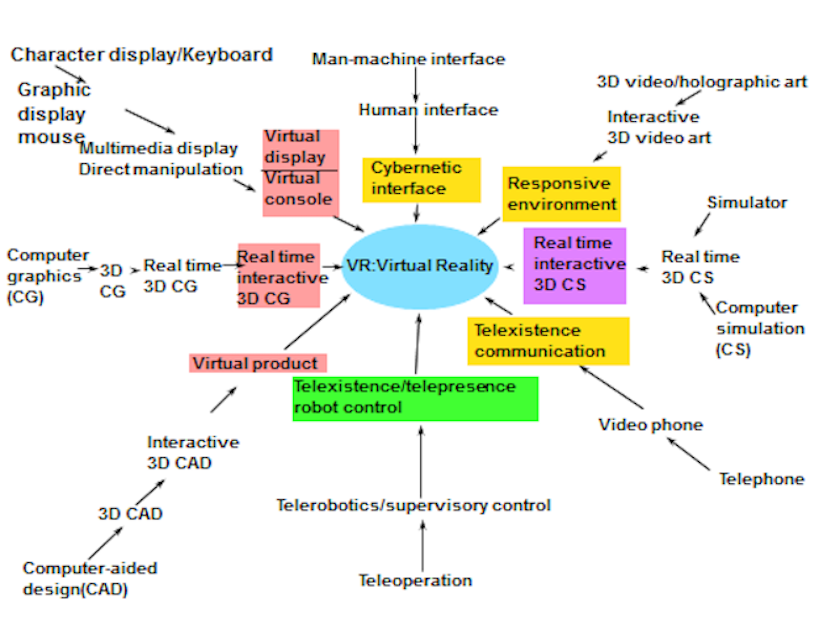

3.3 Roots of VR Advance from Several fields in the 1980s

The roots of VR rose from several fields during the second 3D craze of the 1980s. As shown in Figure 4, researchers such as Scott Fisher advanced VR in the context of virtual displays and virtual consoles. In the field of CG, the progression proposed by Sutherland is as follows: CG→ 3D CG → real-time 3D CG → real-time interactive 3D CG [5]. The idea of making 3D versions of applications such as CAD was advanced by Frederick Brooks, Jr. In the field of art, Myron Krueger and others coined the term “responsive environment” for interactive art.

In the field of computer simulations, researchers such as Thomas Furness led efforts to propose a “super cockpit” that further refined flight simulators enabling operations with the same feeling as operating an actual aircraft. Immersive communication-based conferencing applications driven by conventional phones and video phones, and immersive teleoperation applications giving users the experience of being at a particular location, such as telexistence and telepresence, were all created in the 1980s [5]. These applications were refined separately and independently in different fields, without much knowledge of similar trends in other fields.

Amid these developments, a conference was held in Santa Barbara in 1990. Letters arrived from an MIT-affiliated engineering foundation. They came by regular mail (air mail), not by email as is common today. They contained an invitation urging attendance at a research gathering of teleoperators and virtual environment researchers. Leading researchers from several different fields came together in Santa Barbara, presented their research, and held brainstorming sessions and discussions.

As they shared their research, they found many common elements across different fields. Completely different fields were seeing a variety of advances that all had a very similar aspect in common. The similarity was the pursuit of life-size 3D. Moreover, the type of 3D being sought was interactive 3D that could change, react, and morph in response to human movements.

The researchers were also looking for technology that enables a user to experience the sensation of being in a computer-generated environment or a remote environment with a seamless link between the user and the environment. Seen from this perspective, research that at first glance appeared to be different for different fields was actually pursuing the same goal. Thus, it is clear that technology originating in one field could be applied to other fields, and that in terms of the academic field, the goals being sought formed a single unified academic discipline.

During the conference, the participants reached a consensus that devising a name for this field (VR: virtual reality) would help advance this discipline. They also decided to publish Presence (an MIT scientific journal). The 1990 Santa Barbara Conference was therefore the “big bang” that marked the first moment of VR—the inception of the academic field of VR.

Figure 4: Evolution and development of virtual reality.

3.4 The meaning of “virtual”

According to the American Heritage Dictionary (3rd edition), “virtual” is defined as existing in essence or effect though not in actual form or fact. The term “virtual” signifies the real more than it does the imaginary. Naturally, the virtual is not simply the same as reality itself. Instead, it is the essence of reality, with the same basic substance and effects as reality. The essence of something is its basic substance. The essence is not at all absolute and unique—the essence to be embodied will vary to meet the goal at hand. VR is created by focusing on the aspects that need to be reproduced to meet the desired goal; hence, humans who are experiencing the thus created VR will experience the same effect as from reality. Therefore, a VR system with the essence of reality provides the same sensations as reality and produces in humans the same effects as those produced by reality per se.

3.5 The three elements of VR

As mentioned before, there are three elements required for the creation of VR. The first is a 3D space that maintains the distances and the size of a real space. The second is real-time interaction with this space. The third is self-projection, which assures that the user’s real body coincides spatially with the user’ virtual body and with full synchronization. This requires the existence of a virtual body in the virtual environment that represents the user. For example, a very important aspect is that the user’s hands in the generated space exist at the user’s actual location and that they move in conjunction with the user’s hands. Hands that look the same must move in spatial and temporal synchronization. Being able to see and touch target objects that interact with the hands gives the user the sensation of being inside the virtual environment—of existing at that location. Therefore, the three elements of VR are life-size 3D space, real-time interaction with this space, and self-projection into this space.

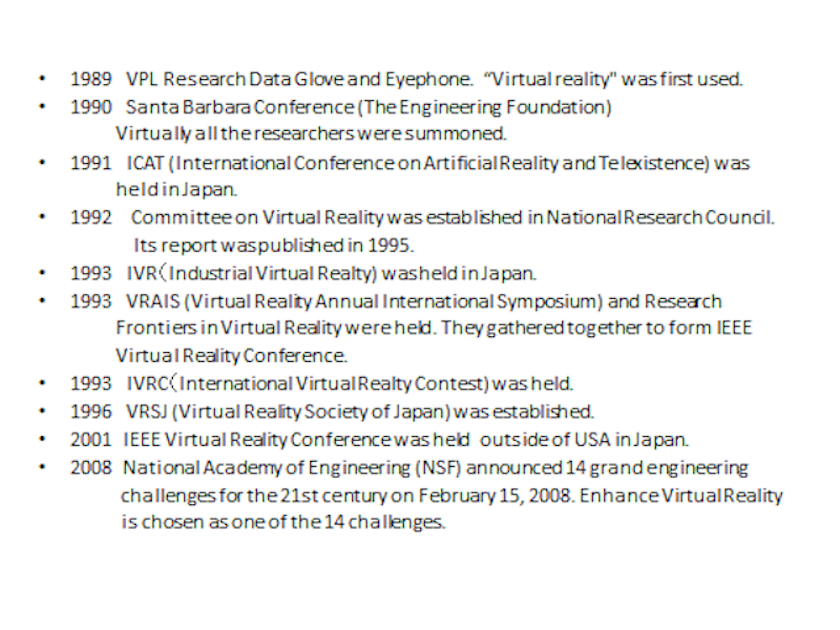

3.6 VR milestones

Figure 5 shows the major chronological milestones in the development of VR. In 1989, VPL released Data Glove and Eyephone, and the term “VR” became widespread. Subsequently, the Santa Barbara Conference was held in 1990, and the International Conference on Artificial Reality and Telexistence (ICAT) was held in Japan in 1991. (The use of “artificial reality” instead of “virtual reality” in the conference title is an interesting historical artifact.) ICAT is the oldest international conference in the field of VR. In the US, the National Research Council created the NRC Committee on VR. In 1993, the Industrial Virtual Reality Show (IVR) was held in Japan. In USA, two forerunners of the IEEE VR International Conference were held in 1993, i.e., VRAIS (Virtual Reality Annual International Symposium) and Research Frontiers in Virtual Reality. Further, in 1993, the first International collegiate Virtual Reality Contest (IVRC) was held in Japan—a contest by, of, and for students. These events still continue to be held.

There have also been several milestones in academia. In 1996, the Virtual Reality Society of Japan (VRSJ) was created. In 2001, the IEEE Virtual Reality International Conference was held in Japan, the first time the event had been held outside the US. More recently, in 2008, the US National Science Foundation (NSF) selected “Enhance virtual reality” as one of 14 major technological goals to achieve in the current century. Therefore, it can be said that VR is a technology for which continued future growth is expected.

Figure 5: Chronological milestones in the development of VR.

3.7 Natural 3D converges on VR

When a 3D work has been more or less made although still not in the final form, the urge that always comes next is to view it from various angles around the circumference other than just the front or looking around. What inevitably results is a craving for large-field of view 3D that surrounds the viewer as in VR. However, looking at various parts of an incomplete 3D image while moving the head can lead to the feeling of nausea. Achieving natural 3D projection is the key to the prevention of nausea, and the development of VR technology designed with this need in mind is definitely inevitable.

Viewing a 3D work also creates the urge to touch 3D objects and have them feel real. VR is the technology that can satisfy this urge. However, touching alone is not sufficient to feel it as though an object is real. The VR hands corresponding to the user’s hands need to be in the 3D space and need to move in spatial and temporal synchronization with the user’s hands. The user will only feel actually present and touching things in the virtual location when his/her hands move in synchronization with the VR hands in the 3D space, and VR objects move as a result of the interaction with the VR hands. The reasons that 3D crazes do not last long are that 3D engineers and 3D content creators still do not fully understand VR and that the VR technology needed to perfect 3D projection has itself not yet been perfected. The first of these two problems can be solved by 3D engineers and 3D content creators gaining an appropriate understanding of the requisite VR technology, which they can then apply to their 3D work. The second can be solved by VR engineers and researchers including myself by making further advances in VR technology, which is the duty imposed on us.

VR crazes that started not long after 3D crazes have peaked in popularity resulting from an understanding of the limitations of 3D technology and a demand for VR to solve them. As described previously, after the first appearance of true 3D projection in the 1920s, 3D crazes have occurred in roughly 30-year cycles. The first 3D craze was in the 1950s, the second was in the 1980s, and the third (current) craze began in 2010. These crazes always end in two or three years. The two most recent crazes have each been followed roughly 10 years later by the dawn of VR and then by the first VR craze. As mentioned before, this pattern suggests that the next wave of VR will come in the 2020s. To prepare for it, VR engineers and researchers need to redouble their efforts at perfecting VR.

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

4 From augmented reality to human augmentation

VR applied to the real world is known as augmented reality (AR). AR augments the real world by adding computer-provided information to it. The computer information is added three dimensionally to a real-world scene in front of the user in order to create a virtual space (information space) and to assist various human behaviors and movements. Combining a wearable computer with an eyewear-sized display device, an AR application verifies the position of people by GPS, obtains information from a mobile phone, and communicates with an IC-driven ubiquitous computing device to write the information that it has obtained into the real world. For example, AR can three-dimensionally draw a map or contour lines on the real-world scene in front of the user, display names or descriptions of buildings, superimpose a procedure display for a worker, or create a visual display of hidden pipes in walls. AR is starting to be used at modern medical sites to assist in diagnosis or treatment by three-dimensionally superimposing displays of Computer Tomography (CT), Magnetic Resonance Imagery (MRI), or ultrasonic images.

This approach to AR is an environment-centered approach of augmenting the real world with the world of information. However, by adopting a new approach centered on the people who use AR, AR can be used for augmenting people. Such an approach, based on a fusion of the human-centered real world and the information world, is called “human augmentation.”

Since human augmentation extends all human abilities, it not only augments sensory and intellectual abilities but also augments motion and spatial/temporal abilities. Therefore, human augmentation goes beyond the scope of AR, which augments sensory and intellectual abilities. Not only does human augmentation go beyond normal abilities, but it also encompasses the recovery of abilities that unfortunately have been lost.

Figure 6 illustrates the recovery and extension of human sensory, intellectual, motion, and spatial/temporal abilities.

Figure 6: Human augmentation.

Human augmentation users are known as “augmented humans” (an annual Augmented Human International Conference has been held since 2010). Augmented humans (AHs) are similar to the cyborgs that have been common in science fiction for many years. However, since the term “cyborg” has been so overused in science fiction novels and comic books, relatively few people are aware of its origin as a genuine scientific term.

The term first appeared in the September 1960 issue of Astronautics, in an article titled “Cyborgs and Space” by Manfred Clynes and Nathan S. Kline [7]. The article describes a system using a Rose-Nelson osmotic pump to make continuous subdermal injections of chemicals into rats at a slow, controlled rate without the organism’s awareness. Such a system, able to organically link an organism and a machine, was dubbed a “cybernetic organism”—“cyborg” for short. The term “cybernetics” was famously coined earlier by Norbert Wiener in his book of the same name. The book’s subtitle, Control and Communication in the Animal and the Machine gives a clear description of the issues that cybernetics deals with. A system that has reached a state in which an organism and a machine (particularly human and machine) function as a single entity instead of as separate entities is a cybernetic organism, or cyborg. As is evident from the origin of the term, a cyborg is a system with a strong, organic link between human and machine. In an augmented human, however, the link does not need to be either strong or organic. The term “augmented human (AH)” signifies a broader concept that extends from cyborg-like entities to humans who use wearable devices.

Since ancient times, one of mankind’s two major dreams has been to have a servant with the ability to faithfully carry out all tasks that the user commands of it. An age in which this dream is achieved through robots rather than in the unhappy and inhumane form of slavery is becoming a possibility through advances in mechatronics.

The other dream that humans have had since ancient times is to obtain a thorough training to raise their abilities up to their highest possible level and become superhuman—the idea of augmenting and extending human abilities. One embodiment of this dream is seen in the heroes of ancient mythology or in modern-day comic book superheroes. While robots are mainly linked to human-as-social-entity, augmenting and extending human abilities have strong ties to human-as-individual-entity.

Human’s efforts to make up for the abilities that he/she lacks or to raise his/her strength to great heights began with the use of tools and weapons. Lacking fangs, ancient human turned to spears and swords to fight animals. However, tool use only creates a superficial connection between a human and a tool. While skilled tool users are said to become one with the tool, tools generally do not become part of the human body.

In a way, a human’s first experience of becoming a superhero may have been when he/she first rode a horse. Being able to skillfully control a horse allowed humans to quickly cover vast distances at will. Modern engine-powered vehicles and private cars in particular have enabled almost everyone to experience a taste of what it first felt like to become a superhero. However, there is a fundamental difference between private cars and public transport that is evident here—while public transport is an outgrowth of automation technology, private cars are the successors to the horse, or the forerunners of cyborgs and AHs.

Needless to say, a vehicle is an individual entity that does not form an organic link with its driver; therefore, the vehicle and the driver are not a cyborg. However, the feeling of unity with the vehicle that anyone who has ever driven has felt, and the sensation and freedom of being able to drive to see a sunrise at the beach on a whim may be precisely the mindset of a cyborg. Users who want to routinely wear mobile devices such as pocket calculators, pocket electronic interpreters, and mobile PCs are the forerunners of cyborgs and come close to being augmented humans (AHs). They are intellectual AHs.

As machines become intelligent and the link between humans and machine grows ever closer, the potential for supplementing and expanding the abilities of the individual will increase. Cyborgs and AHs are entities that have put these abilities to maximum use.

4.1 Sensory augmentation

Sensory augmentation is the extension of human sensory abilities. Normal human vision can only recognize a part of the spectrum known as visible light, which has wavelengths from the violet to the red end of the spectrum (380 to 780 nm). However, the world is filled with various other types of light, such as light of shorter and longer wavelengths than humans can perceive. Such information could be captured by sensors worn on the body, converted into visible light, and immersively presented to a human user by superimposing a model of the outside world on it. For example, a completely dark scene viewed in infrared would be seen as no longer dark. By presenting the information immersively to the human user, a user in complete darkness could work as though he/she were in a well-lit environment or could see objects through smoke.

Another example application might be radiation sensing. Although radiation is hazardous to humans, we have no natural ability to sense it. Such hazard information could also be captured by sensors worn on the body and presented to the human user, enabling the user to avoid hazardous situations while working.

Applications might include giving the user the ability to see an object inside a wall by presenting them with an internal image shot beforehand, or to see what is on the outside of a wall by projecting a real-time image of the outside scene on the wall. These applications are extensions of the human visual function.

Other applications could extend hearing by immersively presenting sound of audible wavelengths in place of information obtained using ultrasound or low-frequency vibrations. The sense of touch could also be extended in a similar fashion. For example, imperceptibly fine textures and indentations could be magnified and presented as sensations that humans can feel. Another example is a wearable sensor that could capture its radiant temperature and present it to the user before the user makes direct contact with an object of unknown temperature.

There are also types of human augmentation used for recovering abilities that have unfortunately been lost. A good example is a guide dog robot for the visually impaired that was researched and developed from 1977 to 1983. The final prototype model was known as MELDOG Mark IV, which enhanced the mobility of the visually impaired by providing guide dog functions. It could obediently guide a blind user in response to user commands, exhibit intelligent disobedience when detecting and avoiding obstacles in the user’s path, and provide well-organized man-machine communication not interfering with the user’s remaining senses [8].

Released in 2005, AuxDeco is another example of a human augmentation tool for the visually impaired. A small camera built into a headband worn by the user acquires an image of the immediate surroundings, which is preprocessed and then “displayed” on the user’s forehead in the form of tactile sensations generated by electrocutaneous stimulation. Forehead stimulation serves as a substitute for the retinal stimulation experienced by the normally sighted. The forehead is better adapted to this application than other areas of the body since it is easier for the user’s brain to coordinate the scene with his/her body posture in response to head movements.

4.2 Intellectual augmentation

It is also possible to intellectually augment humans by superimposing various types of information not related to physical quantities. Intellectual augmentation is almost the same as what is generally referred to as AR. The only difference is that intellectual augmentation applications are expressed from a different perspective—human-centered instead of environment-centered. As previously described in the section on AR, computer information is three-dimensionally added to the real world to assist various human conducts and performances. Examples of intellectual augmentation applications include the following: (1) guiding a walking human user, (2) displaying attributes of a building in view, (3) displaying information about the shops and offices located in a building, (4) displaying the name and other personal information of a person in view, (5) displaying operating instructions for an apparatus in view, (6) displaying the temperature of an object in view, and (7) presenting real-time emergency information.

Another example could be an application that after hearing a piece of music, can display information such as the title or the composer of the piece in goggles worn by the user, or can whisper the information in word form into the user’s ear. Another application could inform the user of what a touched object is made of.

When a user is operating something, intellectual augmentation applications could indicate answers to questions of the user or could provide assembly or cooking instructions to the user while the task is being performed, superimposing the information on the physical object that the user is operating.

Wearing an intellectual augmentation system could enable a user to record his/her daily activities to create a “life log.” The data could be organized for easy use and stored for many years. It could be retrieved and used as needed for providing a window into the past to find out what the user has experienced and learned.

Several of these types of functions have already been implemented on today’s smartphones. Various apps used on today’s smartphones could be considered to have reached the level of apps for intellectually augmented humans if they are modified from a handheld to a hands-free format by enabling them to present information in goggles.

4.3 Motion augmentation

Typical examples of devices for augmenting human motion are cybernetic prostheses such as prosthetic limbs, and orthotic devices such as walkers. Another typical example is the exoskeleton human amplifiers that were researched and developed in the 1960s.

Exoskeleton human amplifiers were wearable robotic devices that ensured safety by covering the user in a shell- or armor-like covering, enabling humans to be placed directly in hazardous environments. They could also be used for amplifying the user’s strength. A good example was Hardyman, which was the result of research led by the US Army and General Electric (GE) in connection with a project called Mechanical Aid for the Individual Soldier (MAIS). The Hardyman project started in 1966, whose goal was to enable the user to walk at a speed of approximately 2.7 km/h while raising an object of approximately 680 kg to a height of approximately 1.8 m.

In exoskeleton human amplifiers, human motion is detected by sensors, and an exoskeleton-type robot moves in accordance with the detected movement by using servo control. The robot moved freely in conformance with human limb movements, while amplifying human strength.

Despite the large amount of money and the considerable number of man-hours that went into this research, it ended in failure. There were several reasons for its failure. One was that the humans placed in the robots were still in very hazardous environments irrespective of the amount of armor they were encased in. A single shield rupture could expose them to a very hazardous environment. Moreover, a crushing accident caused by an equipment malfunction would result in the human also being crushed by the robot’s amplified power.

The second reason was that the humans could not do anything without moving of their own volition. When the machines moved automatically, the humans were moved along with them. The humans were forced to be in constant motion while using the robots, making the robots very demanding devices for the humans to use.

Japan has recently been experiencing renewed interest in developing exoskeleton human amplifiers, and several product launches for applications such as nursing care and agriculture have attracted attention. In conjunction with research into areas such as cybernetic augmentation, this technology could lead to the development of cyborgs.

4.4 Space/time augmentation

The space/time augmentation technology frees humans from the conventional restrictions of space and time, enabling them to effectively exist in an environment removed from time, space, or both time and space. In a sense, the technology can make humans ubiquitous, echoing the concept known as “telexistence.”

Telexistence is a technology that augments humans to give them the sensation of existing in a location other than the location that they are currently in, and enabling them to act freely at that location. For example, a robot placed in a remote location is operated in synchronization with the user’s body movements. The user sees from the robot’s perspective, hears what the robot hears, and feels through the robot’s skin, giving them the sensation of becoming the robot or of being inside the robot. This technology gives the user the highly immersive sensation of being at the robot’s location instead of at their actual location. Moreover, human movements are faithfully conveyed to the robot, and human speech emanates from the robot’s mouth, enabling the user to act and work freely at the robot’s location while remaining at their actual location.

Telexistence enables the same state achieved by wearing an exoskeleton human amplifier (described in the section on motion augmentation) to be achieved without the user physically going to the location. Therefore, space augmentation could be considered a virtual exoskeleton human amplifier. However, telexistence is superior to exoskeleton human amplifiers in two respects—robot failure poses no danger to the human user, and the robot can be operated automatically for simple jobs that can be done by the robot alone.

Creating a microrobot with a telexistence link to a human user would let the user perform tasks as a virtual miniaturized version of themselves. For example, applications of this technology could place robots in normally inaccessible locations such as inside the human body, enabling the robots to carry out various types of diagnosis and treatment. Since diagnosis and treatment require human experience and knowledge, it would be very effective to enable medical doctors to use robots with the feeling that they virtually enter the human body, where they could make observations and assessments, and carry out treatment.

Entertainment applications of the technology could place surrogate robots shaped as land or marine animals within flocks of the animals so that the users have experience of playing with the animals as their mates.

Spatial human augmentation can also be understood in terms of the advances made in telecommunication devices such as the telephone. Famous as the inventor of telephone and for the first successful experimental demonstration of the telephone in Boston in March 1876, Alexander Graham Bell was a Scottish-born American who was passionate about education for the deaf and the dumb and a good adviser to Helen Keller. One of the first telephone users is said to have felt as though her grandmother were present and whispering in her ear. The telephone could be considered a telexistence device for sound; hence, “telephone” was certainly an appropriate choice of name.

More than 135 years after Bell’s invention, the rise of mobile phones and/or smart phones has now enabled almost everyone to experience auditory telexistence—the telephone—while walking. The invention of the telephone was followed by the invention of television (TV). However, television was not an appropriate choice of name. TV is a broadcasting device and the successor of the radio, and not at all the successor of the telephone. Video phones are the successor of the telephone. They are appearing in the latest-model mobile phones or smartphones and are starting to be used worldwide. Teleconferencing systems are also aiming at visual telexistence. Despite their lack of immersiveness, applications such as Polycom and Skype are practical and widespread. However, 3D images can still not be conveyed freely.

Research is underway on telexistence booth systems aiming to enable “mutual telexistence” that realizes life-size 3D face-to-face conferences among multiple users. Placed in public places, offices, or homes, telexistence booths would convey life-size 3D images in real-time. Telexistence booths would be similar to public telephone booths. Each member of a conference would enter a telexistence booth in a public place or office. Once a communication path had been established, face-to-face communication would be possible. Each member would be able to see the other members’ faces in a simulated conference room in a VR environment set in common for all the members. They can move around the room freely, and gestures and other body language would also be conveyed.

Time-domain human augmentation is also possible. Telexistence, which enables instantaneous transportation of humans between places with a time difference, can be considered to transcend space and time in a sense. Moreover, the life log application described previously would record the world of the past, creating a space/time augmentation that would let the user return to that past world.

An implementation of telexistence would be to create a record of the user’s behavior by using telexistence avatar robots that could be reproduced by a robot at any time. Records of people are now stored as pictures, photos, sculptures, and videos. It may be possible to store these records and memories in the form of telexisting avatar robots. Even after a person passed away, the person can exist for a long time as the form of his/her telexistence avatar robot, which has memorized fully his/her behavior and performances, adding to his/her appearance and voices.

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

5 Telexistence

5.1 What is Telexistence

Telexistence is a fundamental concept that refers to the general technology that allows a human being to experience a real-time sensation of being in a place other than his/her actual location and to interact with the remote environment, which may be real, virtual, or a combination of both [5]. It also refers to an advanced type of teleoperation system that allows an operator at the controls to perform remote tasks dexterously with the feeling of being in a surrogate robot working in a remote environment. Telexistence in the real environment through a virtual environment is also possible.

Sutherland [4] proposed the first head-mounted display system, which led to the birth of virtual reality in the late 1980s. This was almost the same concept as telexistence in computer-generated virtual environments. However, it did not include the concept of telexistence in real remote environments.

The concept of providing an operator with a natural sensation of existence in order to facilitate dexterous remote robotic manipulation tasks was called "telepresence" by Minsky [9] and "telexistence" by Tachi [10]. Telepresence and telexistence are very similar concepts proposed independently in the USA and in Japan, respectively. However, telepresence does not include telexistence in virtual environments or telexistence in a real environment through a virtual environment.

5.2 How Telexistence was Conceptualized and Developed

The concept of telexistence was proposed by the author in 1980 [4], and it was the fundamental principle of the eight-year Japanese national large scale “Advanced Robot Technology in Hazardous Environment” project, which began in 1983, together with the concept of “Third Generation Robotics.” The theoretical consideration and systematic design procedure of telexistence were established through the project. An experimental hardware telexistence system was developed and the feasibility of the concept was demonstrated.

Our first report [11, 12] proposed the principle of a telexistence sensory display and explicitly defined its design procedure. The feasibility of a visual display providing a sensation of existence was demonstrated through psychophysical measurements performed using an experimental visual telexistence apparatus.

In 1985, a method was also proposed for developing a mobile telexistence system that can be driven remotely with both auditory and visual existence sensations. A prototype mobile televehicle system was constructed and the feasibility of the method was evaluated [13].

5.3 Telexistence Manipulation System: TELESAR

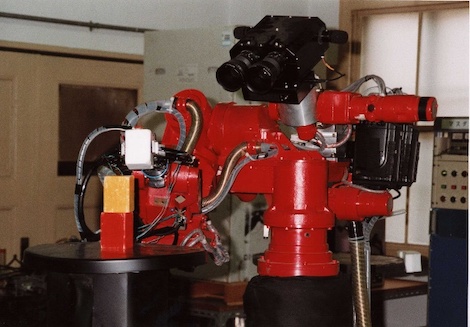

The first prototype telexistence master-slave system for performing remote manipulation experiments was designed and developed, and a preliminary telexistence evaluation experiment was conducted [14, 15, 16]. The slave robot employs an impedance control mechanism for contact tasks. An experimental block-building operation was successfully conducted using a humanoid robot called TELESAR (TELExistence Surrogate Anthropomorphic Robot). Experimental studies of the tracking tasks quantitatively demonstrated that a human can telexist in a remote environment through a dedicated telexistence master-slave system (Figure 7) [16].

Figure 7: Original TELESAR.

5.3.1 Augmented Telexistence

Telexistence can be divided into two categories: telexistence in a real remote environment which is linked via a robot to the place where the operator is located, and telexistence in a virtual environment which does not actually exist but is created by a computer. Telexisting in a real remote environment with a computer-synthesized environment combined or superimposed on it is referred to as augmented telexistence. Sensor information in the remote environment is used to construct the synthesized environment.

Augmented telexistence can be used in a variety of situations, such as controlling a slave robot in an environment with poor visibility. An experimental operation in an environment with poor visibility was successfully conducted by using TELESAR and its dual anthropomorphic virtual telexistence robot named Virtual Telesar (Figure 8) [17, 18].

Figure 8: Virtual Telesar at work.

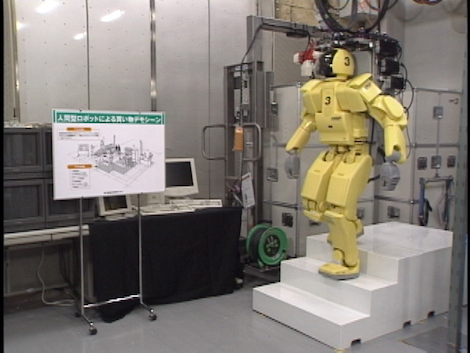

5.3.2 Telexistence into a Humanoid Biped Robot

Based on the telexistence technology, telexistence into a humanoid biped robot was realized in 2000 under the “Humanoid Robot Project” (HRP) [19]. Figure 9 presents the robot descending stairs. Since the series of real images presented on the visual display are integrated with the movement of the motion base, the operator experiences a real-time sense of walking or stepping up and down. This was the first experiment in the world which succeeded in controlling a humanoid biped robot by using telexistence (Figure 9).

Figure 9: Telexistence using HRP Biped Robot.

5.4 Mutual Telexistence: TELESAR II & IV

A method for mutual telexistence based on the projection of real-time images of the operator onto a surrogate robot using retroreflective projection technology (RPT) was first proposed in 1999, and the feasibility of this concept was demonstrated by the construction of experimental mutual telexistence systems using RPT in 2004 [20]. In 2005, a mutual telexistence master-slave system called TELESAR II was constructed for the Aichi World Exposition. Nonverbal communication actions such as gestures and handshakes could be performed in addition to conventional verbal communication because a master-slave manipulation robot was used as the surrogate for a human [21]. Moreover, a person who remotely visited the surrogate robot’s location could be seen naturally and simultaneously by several people standing around the surrogate robot (Figure 10).

Figure 10: TELESAR II.

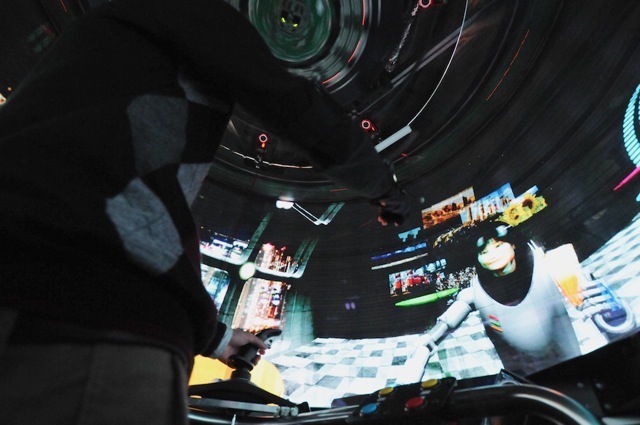

The mobile mutual telexistence system, TELESAR IV, which is equipped with master-slave manipulation capability and an immersive omnidirectional autostereoscopic 3D display with a 360° field of view know as TWISTER, was developed in 2010 [22]. It has a projection of the remote participant’s image on the robot by RPT (Figure 11).

Figure 11: TELESAR IV.

Face-to-face communication was also confirmed, as local participants at the event were able to see the remote participant’s face and expressions in real time. It was further confirmed that the system allowed the remote participant to not only move freely about the venue by means of the surrogate robot, but also perform some manipulation tasks such as a handshake and several gestures.

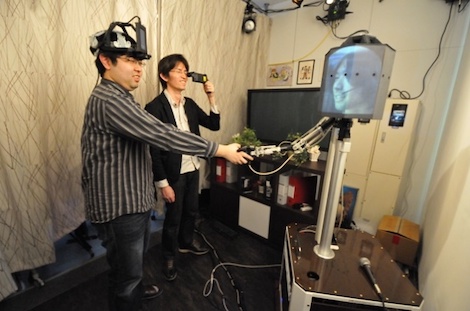

5.4.1 Face-to-Face Telexistence Communication using TWISTER Booths

The following systems were added to original TWISTER’s omnidirectional 3D autostereoscopic display: 3D facial image acquisition system that captures expressions and line of sight, and a user motion acquisition system that captures information about arm and hand position and orientation. An integrated system was constructed whereby communication takes place via an avatar in a virtual environment. The results of having two participants engage in TWISTER-to-TWISTER telecommunication verified that participants can engage in telecommunication in the shared virtual environment under mutually equivalent conditions (Figure 12) [23].

Figure 12: TWISTER to TWISTER Telexistence.

5.5 Telexistence Avatar: TELESAR V

In telexistence, an operator can feel his/her slave robot as an expansion of his/her bodily consciousness and has the ability to move freely and control the slave robot in a similar way to his/her body movement. In order to certify this concept in telexistence, the TORSO (TELESAR III) system, which can acquire visual information in a more natural and comfortable manner by accurately tracking a person’s head motion with 6 DOF, was constructed [24].

TELESAR V, a master-slave robot system for performing full-body movements including 6-DOF head movement as TORSO, was developed in 2011 (Figure 13) [25]. In July, 2012, it was successfully demonstrated that TELESAR V master-slave system can transmit fine haptic sensation such as texture and temperature of the material from an avatar robot’s fingers to a human user’s fingers. The avatar robot is a telexistence anthropomorphic robot with 53-degree-of-freedom body and limbs and it can also transmit visual and auditory sensation of presence to the human adding to the haptic sensation [26, 27].

Figure 13: TELESAR V.

5.6 Telexistence in the Future

Telexistence technology has a broad range of applications such as operations in dangerous or poor working conditions within factories, plants, or industrial complexes; maintenance inspections and repairs at atomic power plants; search, repair, and assembly operations in space or the sea; and search and rescue operations for victims of disasters and repair and reconstruction efforts in the aftermath. It also has applications in areas of everyday life, such as garbage collection and scavenging; civil engineering; agriculture, forestry, and fisheries industries; medicine and welfare; policing; exploration; leisure; and substitutes for test pilots and test drivers. These applications of telexistence are inclined toward the concept of conducting operations as a human-machine system, whereby a human compensates for features that are lacking in a robot or machine.

There is also a contrasting perspective on telexistence, according to which it is used to supplement and extend human abilities. This approach involves the personal utilization of telexistence, that is, liberating humans from the bonds of time and space by using telexistence technology.

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

6 Conclusion

1) The history of 3D elucidates that it has a thirty year cycle of evolution. Its dawn was in the 1920s, followed by the first 3D craze in the 1950s, the second 3D craze in the 1980s, and the most recent 3D craze in the 2010s.

2) History of VR has revealed that VR evolves about a decade after the 3D crazes, i.e., the dawn of VR was in the 1960s, and the first VR craze was in the 1990s following the first 3D craze and the second 3D craze, respectively.

3) This leads to the conjecture that the next VR / AR craze will be in the 2020s.

4) AR supplements humans in their senses and intellect, while human augmentation or AH (augmented human) augments humans not merely in their senses and intellect but also in their motions and abilities to transcend time and space.

5) Human augmentation in time and space is called telexistence. Telexistence frees humans from the constraints of time and space. It allows them to exist virtually in remote locations, as well as to interact with remote environments that are real, computer-synthesized, or a combination of both.

| INTRODUCTION | 3D MOVES IN 30-YEAR CYCLES | VR MOVES 10 YEARS AFTER 3D MOVES |

| FROM AUGMENTED REALITY TO HUMAN AUGMENTATION | TELEXISTENCE | CONCLUSION | REFERENCES |

REFERENCES

[1] J. A. Norling: The Stereoscopic Art – A Reprint. J. of the SPMTE, vol.60, no.3, pp.286-308, 1953.

[2] W. Rollmann: Zwei neue stereoskopische Methoden, Annalen der Physik, vol.166, pp.186–187, 1853.

[3] R. Zone: Stereoscopic Cinema and the Origins of 3-D Film, 1838-1952, University Press of Kentucky, pp. 107-109, 2007.

[4] I. E. Sutherland: A Head-Mounted Three Dimensional Display, Proceedings of the Fall Joint Computer Conference, pp.757-764, 1968.

[5] S. Tachi: Telexistence, World Scientific, ISBN-13 978-981-283-633-5, 2009.

[6] C. Cruz-Neira, D. J. Sandin and T. A. DeFanti: Surrounded-screen Projection-based Virtual Reality: The Design and Implementation of the CAVE, Proceedings of ACM SIGGRAPH’93, pp.135-142, 1993.

[7] M. Clynes and N. S. Kline: Cyborgs and Space, Astronautics, September, pp.26-27; 74-76, 1960.

[8] S. Tachi, T. Tanie, K. Komoriya and M. Abe: Electrocutaneous Communication in a Guide Dog Robot (MELDOG), IEEE Transaction on Biomedical Engineering, vol. BME-32, no.7, pp.461-469, 1985.

[9] M. Minsky: Telepresence, Omni, vol.2, no.9, pp.44-52, 1980.

[10] S. Tachi, K. Tanie, and K. Komoriya: Evaluation Apparatus of Mobility Aids for the Blind, Japanese Patent 1462696, filed on December 26, 1980; An Operation Method of Manipulators with Functions of Sensory Information Display, Japanese Patent 1458263, filed on January 11, 1981.

[11] S. Tachi and M. Abe: Study on Tele-Existence (I), Proceedings of the 21st Annual Conference of the Society of Instrument and Control Engineers (SICE), pp.167-168, 1982 (in Japanese).

[12] S. Tachi, K. Tanie, K. Komoriya, and M. Kaneko: Tele-Existence (I): Design and Evaluation of a Visual Display with Sensation of Presence, Proceedings of the 5th Symposium on Theory and Practice of Robots and Manipulators (RoManSy ’84), pp.245-254, Udine, Italy, (Published by Kogan Page, London), June 1984.

[13] S. Tachi, H. Arai, I. Morimoto, and G. Seet: Feasibility Experiments on a Mobile Tele-Existence System, The International Symposium and Exposition on Robots (19th ISIR), Sydney, Australia, November 1988.

[14] S. Tachi, H. Arai, and T. Maeda: Development of an Anthropomorphic Tele-Existence Slave Robot, Proceedings of the International Conference on Advanced Mechatronics (ICAM), pp.385-390, Tokyo, Japan, May 1989.

[15] S. Tachi, H. Arai, and T. Maeda: Tele-Existence Master Slave System for Remote Manipulation, IEEE International Workshop on Intelligent Robots and Systems (IROS’90), pp.343-348, 1990.

[16] S. Tachi and K. Yasuda: Evaluation Experiments of a Tele-Existence Manipulation System, Presence, vol.3, no.1, pp.35-44, 1994.

[17] E. Oyama, N. Tsunemoto, S. Tachi and T. Inoue: Experimental Study on Remote Manipulation using Virtual Reality, Presence, vol.2, no.2, pp.112-124, 1993.

[18] Y. Yanagida and S. Tachi: Virtual Reality System with Coherent Kinesthetic and Visual Sensation of Presence, Proceedings of the 1993 JSME International Conference on Advanced Mechatronics (ICAM), pp.98-103, Tokyo, Japan, August 1993.

[19] S. Tachi, K. Komoriya, K. Sawada, T. Nishiyama, T. Itoko, M. Kobayashi, and K. Inoue: Telexistence Cockpit for Humanoid Robot Control, Advanced Robotics, vol.17, no.3, pp.199-217, 2003.

[20] S. Tachi, N. Kawakami, M. Inami, and Y. Zaitsu: Mutual Telexistence System using Retro-Reflective Projection Technology, International Journal of Humanoid Robotics, vol.1, no.1, pp. 45-64, 2004.

[21] S. Tachi, N. Kawakami, H. Nii, K. Watanabe, and K. Minamizawa: TELEsarPHONE: Mutual Telexistence Master Slave Communication System based on Retro-Reflective Projection Technology, SICE Journal of Control, Measurement, and System Integration, vol.1, no.5, pp.1-10, 2008.

[22] S. Tachi, K. Watanabe, K. Takeshita, K. Minamizawa, T. Yoshida, and K. Sato: Mutual Telexistence Surrogate System: TELESAR4 - Telexistence in Real Environments using Autostereoscopic Immersive Display, Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, USA, 2011.

[23] K. Watanabe, K. Minamizawa, H. Nii and S. Tachi: Telexistence into cyberspace using an immersive auto-stereoscopic display, Transactions of the Virtual Reality Society of Japan, vol.17, no.2, pp. 91-100, 2012 (in Japanese).

[24] K. Watanabe, I. Kawabuchi, N. Kawakami, T. Maeda, and S. Tachi: TORSO: Development of a Telexistence Visual System using a 6-d.o.f. Robot Head: Advanced Robotics, vol.22, pp.1053-1073, 2008.

[25] S. Tachi, K. Minamizawa, M. Furukawa, and K. Sato: Study on Telexistence LXV - Telesar5: Haptic Telexistence Robot System, Proceedings of EC2011, Tokyo, Japan, 2011 (in Japanese).

[26] S. Tachi, K. Minamizawa, M. Furukawa and C. L. Fernando: Telexistence - from 1980 to 2012, Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2012), pp.5440-5441, Vilamoura, Algarve, Portugal, 2012.

[27] C. L. Fernando, M. Furukawa, T. Kurogi, S. Kamuro, K. Sato, K. Minamizawa and S. Tachi: Design of TELESAR V for Transferring Bodily Consciousness in Telexistence, Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2012), pp.5112-5118, Vilamoura, Algarve, Portugal, 2012.