| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

Summary [VIDEO]

"Telexistence is a concept that denotes an extension of human existence, wherein a person exists wholly in a location, other than his or her actual current location, and can perform tasks freely there. The term also refers to the system of science and technology that enables realization of the concept."

Research and Development of Telexistence

Telecommunication and remote-controlled operations are common in our daily lives. While performing these operations, users want to have a feeling of being present on site and directly performing jobs that they would like to do, instead of controlling them remotely. However, the present commercially available telecommunication systems and/or telepresence systems do not provide the sensation of self-presence or self-existence, and hence, users do not get the feeling of being present or existing in remote places. Moreover, these systems do not provide haptic sensation, which is necessary for the direct manipulation of remote operations, resulting in not only the lack of reality, but also difficulty in performing tasks.

Prof. Tachi and his team have been working on telexistence, in which the aim is to enable a human user to have the sensation of being present on site, and to perform tasks as if he is directly performing them there. By using a telexistence master-slave system, the human user can have a feeling of being present in a remote environment or have a sensation of self-existence or self-presence in the remote environment, and would be able to perform tasks directly as though he is present there. The telexistence master–slave system is a virtual exoskeleton human amplifier, by which a human user would be able to operate a remote avatar robot as if it is his or her own body; he or she can have the feeling of being inside the robot or wearing it as a garment.

The concept of telexistence was invented by Dr. Susumu Tachi in 1980, and it was the fundamental principle of the eight-year Japanese national large-scale "Advanced Robot Technology in Hazardous Environment" project, which began in 1983, together with the concept of third generation robotics. Theoretical considerations and the systematic design procedure for telexistence systems were established through the project. Since that time, experimental hardware for the telexistence systems have been developed, and the feasibility of the concept has been demonstrated.

Two important problems that remained to be solved are mutual telexistence and haptic telexistence. Mutual telexistence is a telexistence system that can provide the sensations of both self-presence and their presence, and is used mainly for communication purposes, while haptic telexistence adds haptic sensation to the visual and auditory sensations of self-presence, and is used mainly for remote operations of real tasks.

Recent advancements in telexistence have partly solved the above problems. TELESAR II (telexistence surrogate anthropomorphic robot version II) is the first system that provided the sensations of both self-presence and their presence for communication purpose using RPT (retroreflective projection technology). For remote operation purposes, Prof. Tachi and his team have developed a telexistence master-slave system named TELESAR V, which can transmit not only visual and auditory sensations, but also haptic sensation. Haptic sensation is displayed based on the principle of haptic primary colors.

(Revised from Susumu Tachi,"Telexistence: Enabling humans to be virtually ubiquitous," Computer Graphics and Applications, vol. 36, No.1, pp.8-14, 2016.)

Figure A: Mutual Telexistence System TELESAR II.

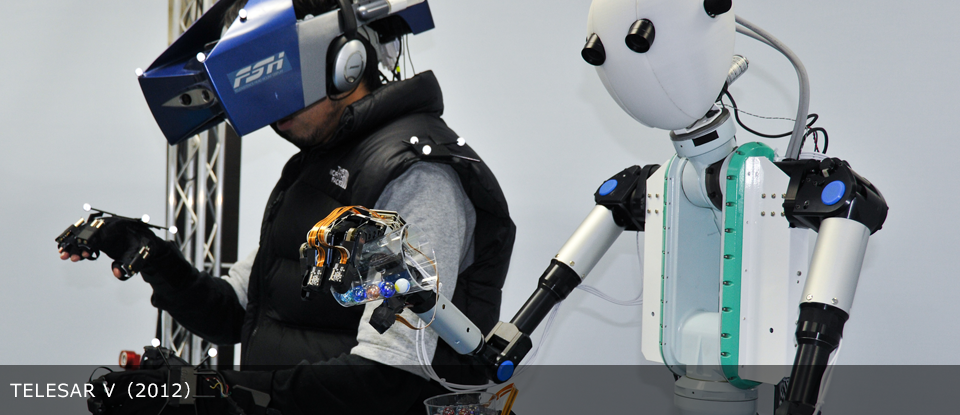

Figure B: Haptic Telexistence System TELESAR V.

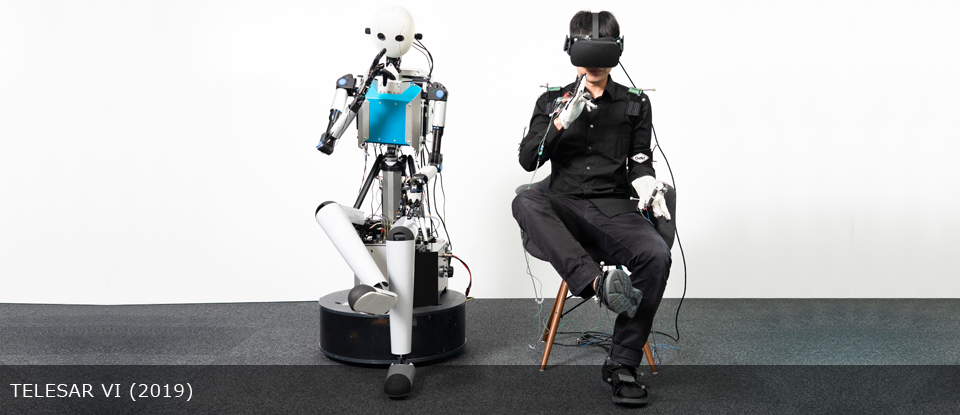

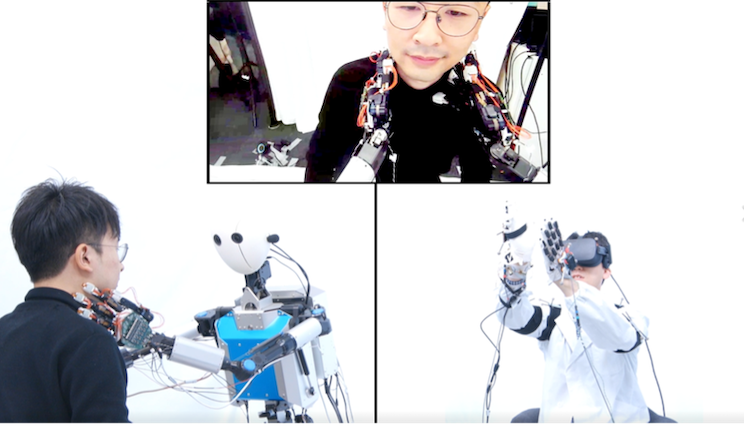

Figure C: TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI

TELESAR VI is a newly developed telexistence platform for the ACCEL Embodied Media Project. It was designed and implemented with a mechanically unconstrained full-body master cockpit and a 67 degree of freedom (DOF) anthropomorphic avatar robot. The avatar robot can operate in a sitting position since the main area of operation is intended to be manipulation and gestural. The system provides a full-body experience of our extended body schema," which allows users to maintain an up-to-date representation in space of the positions of their different body parts, including their head, torso, arms, hands, and legs. All ten fingers of the avatar robot are equipped with force, vibration, and temperature sensors and can faithfully transmit these elements of haptic information. Thus, the combined use of the robot and audiovisual information actualizes the remote sense of existence, as if the users physically existed there, with the avatar robot serving as their new body. With this experience, users can perform tasks dexterously and feel the robot's body as their own, which provides the most simple and fundamental experience of a remote existence.

(Excerpted from Susumu Tachi, Yasuyuki Inoue and Fumihiro Kato, "TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI," International Journal of Humanoid Robotics, Vol. 17, No. 5, p.2050019(1-33), 2020.) [PDF] video

Social Implementation of Telexistence

Telexistence, which had been in the R&D stage for nearly 40 years, began to be commercialized in the US around 2007 under the name Telepresence. However, these were mobile "skype" systems that allowed communication but not a sense of presence, and did not allow for work. Under such circumstances, the Visioneers Summit, a competition organized by the non-profit XPRIZE Foundation, founded in 1995 by Peter Diamandis, who was named one of the 50 greatest leaders in the world in 2014 and is regarded as a charismatic figure in the world of innovation, was held in October 2016. The goal of the Summit was to select the next XPRIZE theme from a list of nine candidates, and the nine teams' proposals were reviewed over two days by a jury of about 300 Mentors, including academics, corporate CEOs, and VC decision makers. It was a two-day process.

Susumu Tachi, Professor Emeritus at the University of Tokyo, was asked by the Avatar team of the XPRIZE Foundation to "demonstrate TELESAR V, the most advanced Avatar system in the world, at the Visioneers Summit" and did so for two days (Figure D). As a result, Avatar was selected as the theme for the next XPRIZE over other themes, and the competition for the AVATAR XPRIZE began with participants from all over the world.

https://www.youtube.com/watch?v=jWNm_vAGr3I

Through this competition, the foundation aims to industrialize what could be called the virtual teleportation industry, where humans can exist in multiple locations using robotic bodies and perform physical tasks using cutting-edge technologies such as VR, robotics, AI, and networking.

Figure D: TELESAR V demonstration at the Visioneers Summit organized by the XPRIZE Foundation.

In response to this movement, 2017 saw the emergence of start-up companies such as telexistence inc. that aim to industrialize telexistence.

https://tachilab.org/jp/about/company.html

In 2018, KDDI, Nippon Steel & Sumikin Solutions, NTT DoCoMo, Toyota, and other major companies started to publish prototypes of their products that aim to create realistic and workable telexistence-oriented products. In this context, social implementation is now steadily beginning. In industries such as convenience stores, which can be said to be the social infrastructure that supports society, telexistence is making it possible to save manpower by remotely controlling and automating product inspection and display operations, as well as to create new store operations that allow a high degree of freedom in hiring staff without being restricted by the physical location of the store. New store operations are becoming possible with telexistence.

A good example of this is Telexistence Inc.'s use of telexistence to display products at the Lawson Tokyo Port City Takeshiba Store, which opened on September 14, 2020. This is noteworthy in the sense that it is the first actual installation and operation in an actual store. The avatar robot Model-T is placed in the store's backyard, and the display work is done remotely from the head office via the Internet. Specifically, Model-T uses telexistence to display products such as bottled and canned beverages, which account for a large percentage of convenience store sales, as well as boxed lunches, rice balls, sandwiches, and other snacks.

The avatar robot Model-T has 22 degrees of freedom in its body and arms to display products in a narrow retail space such as a convenience store. In the video transmission between the robot and the operator, an end-to-end delay of 50ms has been achieved from the camera on the robot side to the display on the operator side. This makes it possible to operate fast-moving objects accurately and in accordance with physical intuition (Figure E).

In addition, by accumulating data on the movements of operators and robots during remote operations, such as picking and installing products, and having artificial intelligence (AI) learn from this data, we are also conducting research and development on automation to increase the ratio of automatic control and processing without remote operations. This research is being conducted within the framework of the New Energy and Industrial Technology Development Organization's (NEDO) project to promote the development of collaborative data sharing and AI systems for the promotion of connected industries.

Figure E: Display of products by telexistence avatar robot in a LAWSON store. https://youtu.be/WLDucRUwJbo

In the future, a completely new type of store operation will be possible, in which store staff can work from anywhere via robots. By reducing human-to-human contact, the robots will not only help prevent the spread of various infectious diseases that may occur in the future, such as the new coronavirus, but will also be introduced into stores that are suffering from a labor shortage due to the declining birthrate and aging population, as well as a decrease in the working population. In addition, by combining remote work and automation, one person can be in charge of multiple stores, thus contributing to solving the social issue of Japan's declining working population.

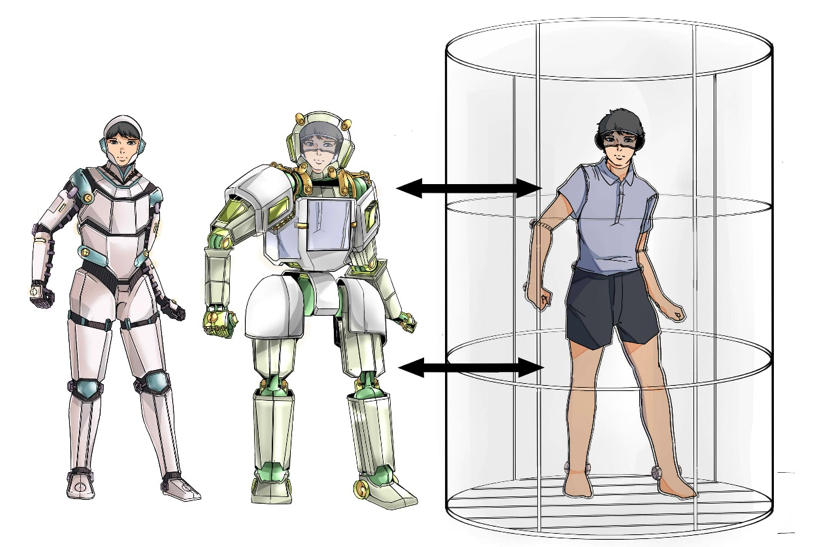

As a result of the AVATAR XPRIZE, Japan's science and technology policy has also begun to take AVATAR or Telexistence into consideration. The first of the six goals of the Moonshot R&D System, which the Cabinet Office has launched in fiscal 2020 with a fund of 115 billion yen, is to "realize a society in which people are free from the constraints of body, brain, space, and time. We will challenge to realize a cybernetic avatar society and a cybernetic avatar life. In other words, we are aiming to realize a telexistence society.

Globally, the AVATAR XPRIZE, and domestically, the moonshot-type research and development will be the driving force, and by 2030, telexistence technology that enables people to move freely and instantaneously regardless of various limitations such as environment, distance, age, and physical ability will be put to practical use, and a virtual teleportation industry will be born and grow. In addition to remote employment and leisure, avatars can be used in areas where there is a shortage of doctors, teachers, and skilled technicians, and at disaster sites where human access is difficult. Furthermore, based on the 30-year cycle prediction, it is expected that a major step up will occur again in the 2050s, and society will change drastically, at which time a full-fledged telexistence society will be realized (Tachi prediction).

Telexistence, which gives humans a new body as a prosthesis and enables them to virtually travel through time and space at a moment's notice, will not only contribute to the construction of a strong society that can cope with crises such as pandemics and large-scale disasters, but will also make a great contribution to people's ability to live more human lives.

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

How Telexistence was evolved

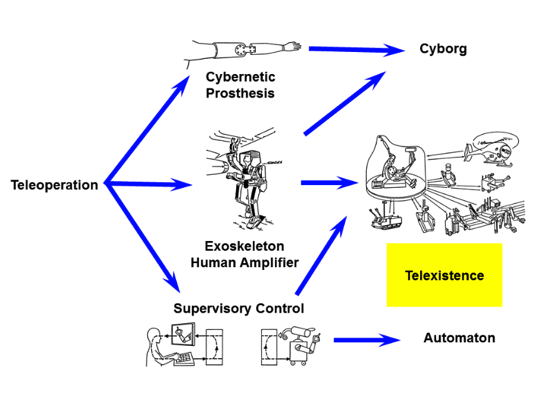

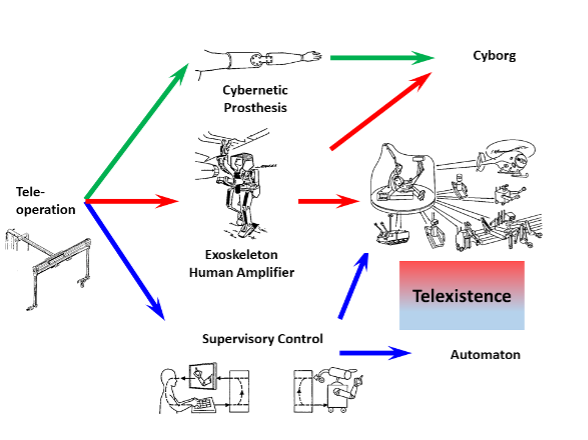

Figure 1 illustrates the emergence and evolution of the concept of telexistence. Teleoperation emerged in Argonne National Laboratory soon after World War II to manipulate radioactive materials [Goertz 1952]. In order to work directly in the environment rather than work remotely, an exoskeleton human amplifier was invented in the 1960s. In the late 1960s, a research and development program was planned to develop a powered exoskeleton that an operator could wear like a garment. The concept for the Hardiman exoskeleton was proposed by General Electric Co.; an operator wearing the Hardiman exoskeleton would be able to command a set of mechanical muscles that could multiply his strength by a factor of 25; yet, in this union of a man and a machine, the man would feel the object and forces almost as though he is in direct contact with the object [Mosher 1967]. However, the program was unsuccessful for the following reasons: (1) wearing the powered exoskeleton was potentially quite dangerous for a human operator in the event of a machine malfunction; and (2) it was difficult to achieve autonomous mode, and every task had to be performed by the human operator. Thus, the design proved impractical in its original form. The concept of supervisory control was proposed by T. B. Sheridan in the 1970s to add autonomy to the human operations[Sheridan 1974].

Figure 1: Evolution of the concept of telexistence through Aufheben or sublation of contradictory concepts of exoskeleton human amplifier and supervisory control.

In the 1980s, the exoskeleton human amplifier evolved into telexistence, i.e., into the virtual exoskeleton human amplifier [Tachi 1984]. In using a telexistence system, since it is not necessary for a human user to wear exoskeleton robot and be actually inside the robot, the human user can avoid the danger of crashing or exposure to hazardous environment in the case of a machine malfunction, and also make the robot work in its autonomous mode by controlling several robots in the supervisory control mode. Yet, when the robot is used in the telexistence mode, the human user feels as if he is inside the robot, and the system works virtually as an exoskeleton human amplifier, as shown in Fig. 2.

Figure 2: Exoskeleton Human Amplifier (left), and telexistence virtual exoskeleton human amplifier (middle and right), i.e., a human user is effectively inside the robot as if wearing the robot body. Avatar robots can be autonomous and controlled by supervisory control mode while the supervisor can use one of the robots as his virtual exoskeleton human amplifier by using telexistence mode.

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

Telexistence vs. Telepresence

Telexistence is a concept that denotes an extension of human existence, wherein a person exists wholly in a location, other than his or her actual current location, and can perform tasks freely there. The term also refers to the system of science and technology that enables realization of the concept.

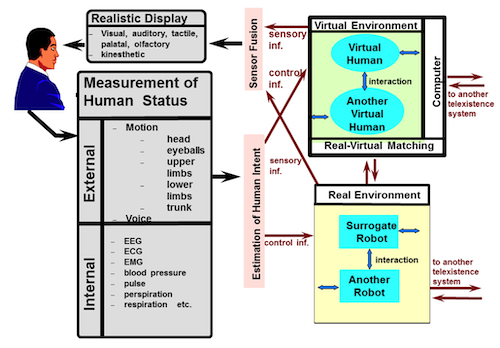

Telexistence allows human beings to experience real-time sensation of being in a place different from their actual location and interact with such remote environment, which can be real, virtual, or a combination of both. It is also an advanced type of teleoperation system that allows operators to perform remote tasks dexterously with the perception of being in a surrogate robot working in a remote environment. Telexistence in the real environment through a virtual environment is also possible (Fig. 3).

Figure 3: Telexistence in real and virtual environments and also telexistence in real environment through virtual environment.

S. Tachi: Artificial Reality and Tele-existence --Present Status and Future Prospect (Invited), Proceedings of the 2nd International Conference on Artificial Reality and Tele-Existence (ICAT '92), pp.7-16, Tokyo, Japan (1992.7) [PDF]

Ivan Sutherland proposed the first head-mounted display system that led to the birth of virtual reality in the late 1980s [Ivan E. Sutherland: The Ultimate Display, Proceedings of IFIP Congress, pp. 506-508, 1965]. This was the same concept as telexistence in computer-generated virtual environments. However, Sutherland’s system did not include the concept of telexistence in real remote environments. The concept of providing an operator with a natural sensation of existence in order to facilitate dexterous remote robotic manipulation tasks was called "telepresence" by Marvin Minsky [Marvin Minsky: Telepresence, OMNI magazine, June 1980], and "telexistence" by Susumu Tachi [Susumu Tachi et al.: Evaluation Device of Mobility Aids for the Blind (Japanese Patent 1462696 filed 1980.12.26) [PDF] ]; Susumu Tachi et al.: Operation Method of Manipulators with Sensory Information Display Functions (Japanese Patent 1458263 filed 1981.1.11) [PDF] ]. Telepresence and telexistence are very similar concepts proposed independently almost at the same time in the USA and in Japan, respectively.

However, telepresence does not include telexistence in virtual environments or telexistence in a real environment through a virtual environment. Telexistence includes both telepresence and virtual reality (VR) [Jaron Lanier: Dawn of the New Everything, p.238, Vintage, ISBN 978-1-784-70153-6 (2017)].

Secondly, the concept of telepresence is an extension of teleoperation, while the concept of telexistence is a conceptual evolution through Aufheben or sublation of contradictory concepts of exoskeleton human amplifier and supervisory control as is explained in the previous section (How Telexistence was evolved). Thus, the telepresence robot has no intelligence although it might be eventually controlled by computers rather than by people, while the telexistence robots (avatars) are intelligent autonomous robots in nature (Fig. 4). Telexistence avatars can be not only robots but also virtual humans, physical humans, animals, or any creatures [Susumu Tachi: Tele-existence− future dream and present technology−, Journal of the Robotics Society of Japan, Vol.4, No.3, pp.295-300 (1986.6) in Japanese [PDF] ].

Figure 4: Evolution of telexistence.

S.Tachi: Tele-Existence and/or Virtual Reality, Proceedings of The International Conference on Virtual Systems and MultiMedia (VSMM'95), pp.9-16, Gifu, Japan, (1995.9) (Invited Keynote Paper) [PDF]

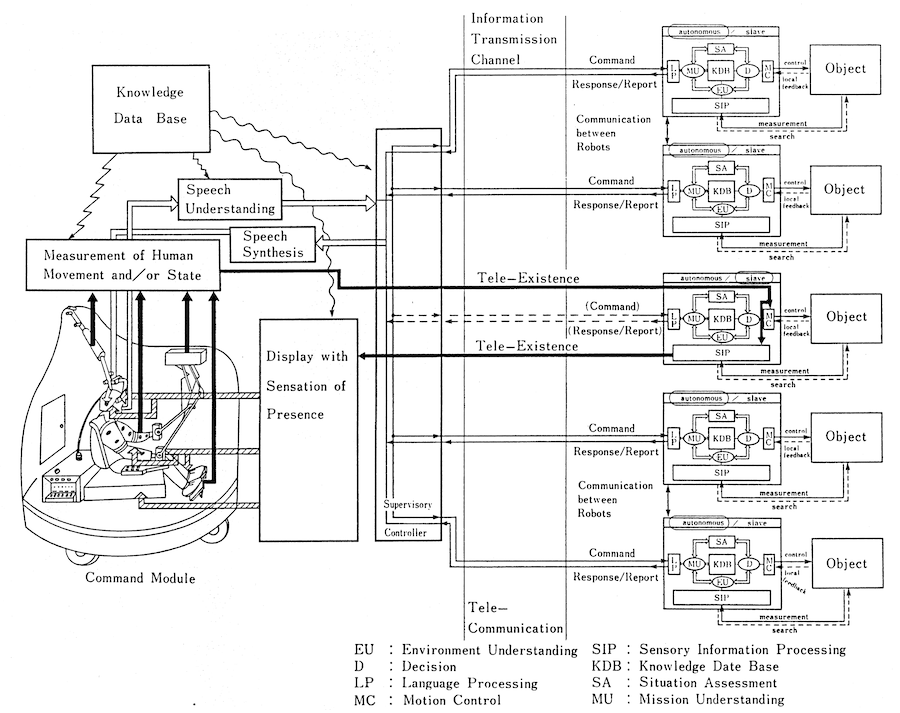

In Tachi’s first paper in English, he wrote how the final version of the telexistence system would be: [ Susumu Tachi, Kazuo Tanie, Kiyoshi Komoriya and Makoto Kaneko: Tele-existence(I): Design and Evaluation of a Visual Display with Sensation of Presence, in A.Morecki et al. ed., Theory and Practice of Robots and Manipulators, pp.245-254, Kogan Page, 1984. [PDF] ]

The final version of the tele-existence system will consist of intelligent mobile robots, their supervisory subsystem, a remote-presence subsystem and a sensory augmentation subsystem, which allows an operator to use robot’s ultrasonic, infrared and other, otherwise invisible, sensory information with the computer· graphics-generated pseudorealistic sensation of presence.

Figure 5: Telexistence system organization.

Susumu Tachi and Kiyoshi Komoriya: Third Generation Robot, Journal of the Society of Instrument and Control Engineers, Vol.21, No.12, pp.1140-1146 (1982.12) (in Japanese) [PDF]

Susumu Tachi: The Third Generation Robot, TECHNOCRAT, Vol.17, No.11, pp.22-30 (1984.11) [PDF]

The following description of the system in Fig.5 is excerpted from the above papers.

An example of a work system consisting of a human and several robots is shown in Fig. 5. Several autonomous intelligent mobile robots are working in a dangerous and harsh work environment, following the orders of the operator (supervisor) in the control capsule, sharing the work and collaborating as necessary. The supervisory controller is in charge of the division of work and the scheduling, and each intelligent robot sends the supervisor reports on the progress of its work. These reports are organized by the supervisory controller and are transmitted to the operator by voice (or visual or tactile).

The operator gives commands in a language similar to natural language, and the results of the judgment are transmitted as commands to each intelligent robot through the voice recognition device and supervisory controller, and the intelligent robot understands the purpose and utilizes its own intelligence and knowledge to accomplish the purpose. The information from the robot's sensor is an important source of information for the intelligent operation of the robot, but it is also monitored by the operator at any time. Also, safety is checked at three levels -the intelligent robot itself, supervisory controller, and operator- and has thus been greatly improved.

When an intelligent robot encounters any difficult work which it cannot handle by its own ability, the operation mode of the robot is switched to remote control mode either at the robot's request or by judgement of the operator. At that time, instead of conventional remote-control system, advanced type of the teleoperation system, called tele-existence system is adopted. This enables the operator to control the robot as if he were inside the robot. In this case each subsystem of an intelligent robot works like a slave-type robot which is freely controlled by man's instructions.

In tele-existence, the internal state of the operator is estimated by measuring the operator's human motion and force state in real time. The internal state of the operator is transmitted to the robot and directly controls the robot's motion control circuit. In the figure, the third robot is controlled by the tele-existence mode, and the bold line shows the information flow between the human and the robot. The robot's artificial eyes, neck, hands, and feet are controlled by faithfully reproducing the human's movements. All the information from the artificial sensory organs of the robot is sent to the corresponding human sensory organs in a direct presentation manner.

For example, if the operator turns his head in the direction he wants to see, the robot also turns its head in the same direction, and the image corresponding to the scene that would be seen if a person were there is formed as a real image on the human's retina. When the operator brings his arm in front of his eyes, the robot's hand appears in the same position in the field of view. Therefore, a person can work by perceiving the relationship between his or her hand and the object, as well as the surrounding space, in the same way as in his or her past experience. The sensation of the robot touching an object is presented to the human hand as a skin stimulus, and the operator can work with a sensation similar to that of touching the object by himself, and can conduct such work that will promote human's skillfulness with operability, ideally to the same extent with a human's direct work, or practically of the same extent with a human working inside a robot.

The robot's sensor information such as radiation, ultraviolet rays, infrared rays, microwaves, ultrasonic waves, and ultra-low frequencies (called extrasensory information) can also be used by the operator. For example, infrared sensor information is converted to visible light at night to see an object in the dark or ultrasonic information can be converted to audio frequency to obtain sound information which is not available in normal conditions. In addition, superimposing extrasensory information on an ordinary visual display, or using skin sensory channels that are not normally used, enables the operator to make effective use of such information to expand human capabilities. In addition, if the maneuverability of the robot's arms is enhanced and it can be controlled as if it were its own arm, a human-powered amplifier will be realized in the sense that it can hold objects that it cannot normally hold. From this point of view, human augmentation system can be realized.

Furthermore, if the knowledge base in the capsule is used in a highly accessible manner, such as in MIT's Media Room, the operator's judgment will be more reliable. In addition to the use of the knowledge base as an aid to human memory and computation, we are also interested in the possibility of modifying human movements into those of a skilled operator, rather than sending them directly to the robot.

These system technologies have potential applications in hazardous and degraded environments in factories, plants, and industrial complexes; inspection, repair, and hazardous work in nuclear plants; radiation waste disposal; search, repair, and assembly work in space and ocean; search, rescue, and recovery work in disaster situations; and, under normal circumstances, in cleaning services, civil engineering and construction work, agriculture, forestry, and fisheries, law enforcement, exploration, leisure, and replacement of test pilots and test drivers.

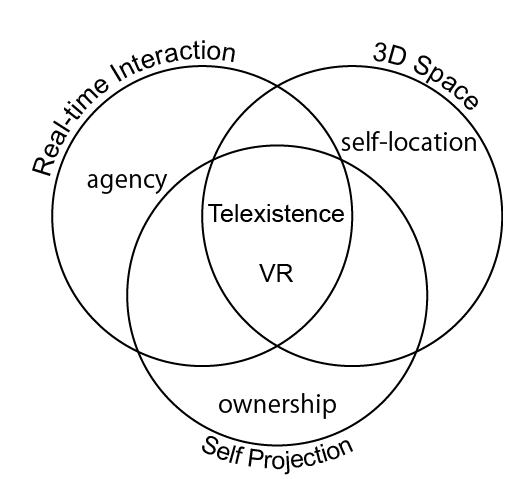

Thirdly, telexistence is a system that has or aims to have the three elements of virtual reality and telexistence.

The three elements of virtual reality and telexistence are: (1) the environment constitutes a natural three-dimensional space for human beings, (2) human beings can act freely in the environment while interacting with it in real time, and (3) the environment and the human being using it are seamless providing the user with a realistic projection of himself/herself as a virtual human or a surrogate robot. The above environment can be a computer-generated virtual environment or a real remote environment.

These are called "three-dimensional spatiality," "real-time interactivity," and "self-projection," respectively, and an ideal virtual reality system or telexistence system is one that combines all three of these elements (Figure 6).

Figure 6: Three elements of virtual reality and telexistence.

These three elements express the requirements of a system, but if we read them from the standpoint of the person using the system, they become the three requirements of self-location, agency, and ownership.

The sense of self-location is the recognition that a person clearly exists in the new place where he or she is now located. In self-location, first-person vision is dominant in location recognition.

In telexistence, the image from the viewpoint of the surrogate robot is linked to the head movement of the user to present the first-person viewpoint without any discrepancy to the user (life-size three-dimensional space).

The sense of agency is the feeling that a certain bodily movement and the action performed by that movement are being performed by oneself. In other words, in order to obtain this sense, the "prediction of the outcome of one's own actions" and the "actual outcome of one's own actions" must be consistent.

In telexistence, the surrogate robot is made to follow the user with a reduced time delay and positional shift to the extent that the user does not feel it (real time interaction).

The sense of ownership is the feeling that one's body is one's own, and Rubber Hand Illusion is an illusory phenomenon related to this feeling. In order to create a sense of body ownership, it is necessary to match the body schema between the user's physical body and the body of the surrogate robot, the avatar. In telexistence, the system is designed so that there is no contradiction between visual and proprioception sensory information (self-projection).

The localization of the self, the sense of agency, and the sense of self-ownership are important requirements that must be secured in both VR and telexistence. By guaranteeing these three requirements, we can feel the virtual avatar or avatar robot as our new body, and act on that body as our new body in VR space or remote environment.

In telexistence, in addition to these requirements, when you act as if the avatar robot is your own body and see yourself in that state, you will experience so-called heautoscopy or out-of-body sensation. This is a phenomenon unique to telexistence that does not exist in VR.

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

Mutual Telexistence

There have been several commercial products with the name telepresence, such as Teliris telepresence videoconferencing system, Cisco telepresence, Polycom telepresence, Anybots QB telepresence robot, Texai remote presence system, Double telepresence robot, Suitable Beam remote presence system, and VGo robotic telepresence.

Current commercial telepresence robots that are controlled from laptops or intelligent-pads could provide a certain sense of their presence on the side of the robot, but the remote user has a poor sense of self-presence. As for the sense of their presence, commercial products have problems that the image presented on a display is only a two-dimensional face, which is far from real, and that multi-viewpoint images are not provided; this results in the same front face being seen even when viewed from the side. The ideal systems should be mutual telexistence systems, which provide both the sense of self-presence and their presence, i.e., the user should get a feeling of being present in the remote environment where his or her surrogate robot exists, and at the same time, the user who remotely visited the surrogate robot’s location could be seen naturally and simultaneously by several people standing around the surrogate robot, as if he actually exists there. However, almost none of the previous systems could provide both the sense of self-presence and the sense of their presence.

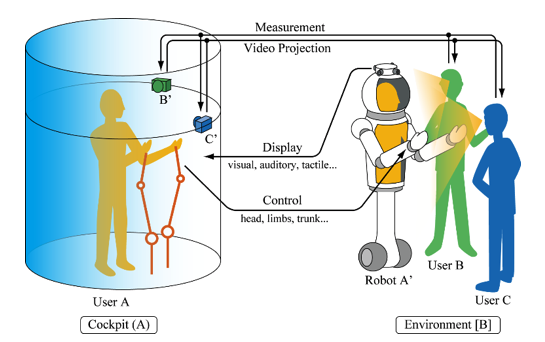

Figure 7 shows the conceptual sketch of an ideal mutual telexistence system using a telexistence cockpit and an avatar robot. User A can observe remote environment [B] using an omnistereo camera mounted on surrogate robot A. This provides user A with a panoramic stereo view of the remote environment displayed inside the cockpit. User A controls robot A using the telexistence master-slave control method. Cameras B' and C' mounted on the booth are controlled by the position and orientation of users B and C, respectively, relative to robot A'. Users B and C can observe different images of user A projected on robot A by wearing their own head mounted projectors (HMP) to provide the correct perspective. Since robot A' is covered with retroreflective material, it is possible to project images from both cameras B' and C' onto the same robot while having both images viewed separately by users B and C.

Figure 7: Proposed mutual telexistence system using RPT.

A method for mutual telexistence based on the projection of real-time images of the operator onto a surrogate robot using the RPT was first proposed in 1999 [Tachi 1999b],together with several potential applications such as transparent cockpits [Tachi et al. 2014], and the feasibility of the concept was demonstrated by the construction of experimental mutual telexistence systems in 2004 [Tachi et al. 2004].

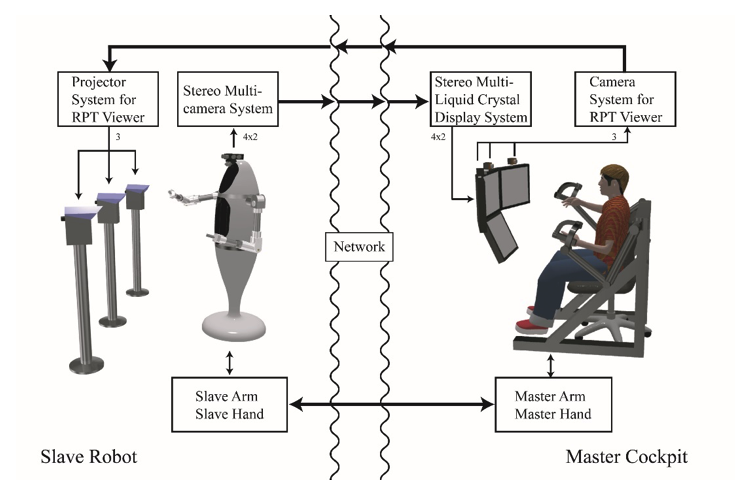

In 2005, a mutual telexistence master-slave system called TELESAR II was constructed for the Aichi World Exposition. TELESAR II is composed of three subsystems: a slave robot, a master cockpit, and a viewer system, as shown in Figure 8.

Figure 8: Schematic diagram of mutual telexistence system TELESAR II.

The robot has two human-sized arms and hands, a torso, and a head. Its neck has two degrees-of-freedom (DOFs), by which it can rotate around its pitch and roll axes. The robot has four pairs of stereo cameras located on top of its head for a three-dimensional surround display, so that an operator can see the remote environment naturally with a sensation of presence. A microphone array and a speaker are also employed for auditory sensation and verbal communication. Each arm has seven DOFs, and each hand has five fingers with a total of eight DOFs [Tadakuma et al. 2005].

The cockpit consists of two master arms, two master hands, the aforementioned multi-stereo display system, speakers, a microphone, and cameras for capturing the images of the operator in real time. For the operator to move smoothly, each master arm has a six-DOF structure so that the operator's elbow is free of constraints. To control the redundant seven DOFs of the anthropomorphic slave arm, a small orientation sensor is mounted on the operator's elbow. Therefore, each master arm can measure the seven-DOF motions of the corresponding slave arm, whereas the force from each slave arm is transmitted back to the corresponding master arm with six DOFs.

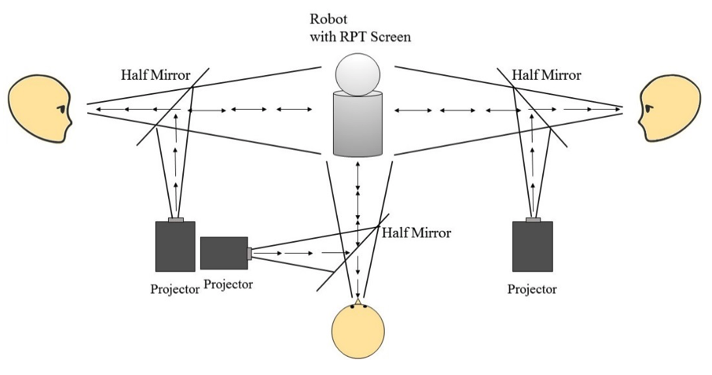

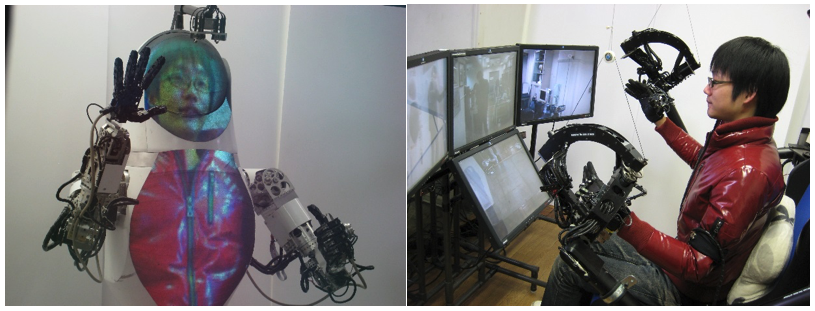

The most distinctive feature of TELESAR II is the use of an RPT viewer system. Both the motion and visual image of the operator are important factors to be determined for the operator to perceive existence at the place where the robot is working. In order to view the image of the operator on the slave robot as if the operator is inside the robot, the robot is covered with a retroreflective material, and the image captured by the camera in the master cockpit is projected on TELESAR II.

TELESAR II acts as a screen, and a person seeing through the RPT viewer system observes the robot as though it were the operator because of the projection of the real image of the operator on the robot. The face and chest of TELESAR II are covered with retroreflective material. A ray incident from a particular direction is reflected in the same direction from the surface of the retroreflective material. Because of the characteristics of retroreflective materials, an image is projected on the surface of TELESAR II without distortion. Since many RPT projectors are used in different directions, and different images are projected corresponding to the cameras located around the operator, the corresponding images of the operator can be viewed (Figure 9).

Figure 9: Principle of the Retroreflective Projection Technology (RPT) and multi-projection images from different angles at the same time.

Figure 10 (left view) illustrates the telexistence surrogate robot TELESAR II as a virtual exoskeleton human amplifier of a remote operator by showing his image as if he is inside the robot, and Figure 10 (right view) shows the operator who is telexisting in the TELESAR II robot, feeling as if he were inside the robot. Nonverbal communication such as gestures and handshakes could be performed in addition to conventional verbal communication, because a master-slave manipulation robot was used as the surrogate for a human [Tachi et al. 2004]. Moreover, a person who remotely visited the surrogate robot’s location could be seen naturally and simultaneously by several people standing around the surrogate robot, so that mutual telexistence is attained.

Figure 10: Mutual telexistence using avatar robot (left) and operator at the control (right).

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

Haptic Telexistence

Although the ideal telexistence should provide haptic sensations, conventional telepresence systems provide mostly visual and auditory sensations with only incomplete haptic sensations. TELESAR V, a master-slave robot system for performing full-body movements, was developed in 2011[Tachi et al. 2012]. In August 2012, it was successfully demonstrated at SIGGRAPH that the TELESAR V master-slave system can transmit fine haptic sensations, such as the texture and temperature of a material, from an avatar robot’s fingers to the human user’s fingers [Fernando et al. 2012] based on our proposed principle of haptic primary colors [Tachi et al. 2013].

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

Haptic Primary Colors

Humans do not perceive the world as it is. Different physical stimuli give rise to the same sensation in humans, and are perceived as identical. A typical example of this fact is color perception in humans. Humans perceive light of different spectra as having the same color if the light has the same proportion of red, green, and blue (RGB) spectral components. This is because the human retina typically contains three types of color receptors called cone cells or cones, each of which responds to a different range of the RGB color spectrum. Humans respond to light stimuli via three-dimensional sensations, which generally can be modeled as a mixture of red, blue, and green, which are the three primary colors.

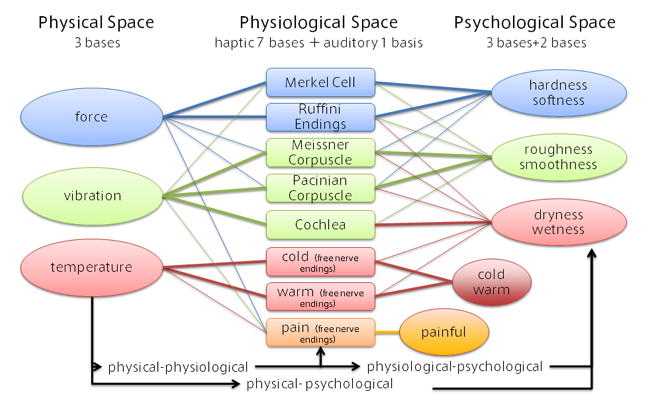

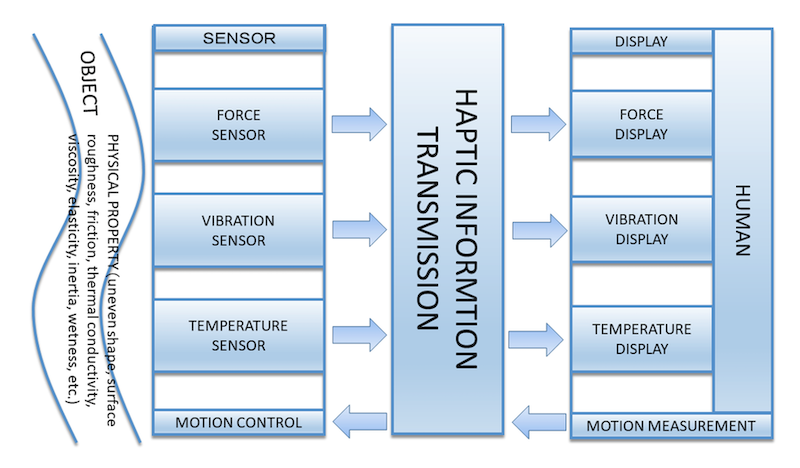

This many-to-one correspondence of elements in mapping from physical properties to psychophysical perception is the key to virtual reality (VR) for humans. VR produces the same effect as a real object for a human subject by presenting its virtual entities with this many-to-one correspondence. We have proposed the hypothesis that cutaneous sensation also has the same many-to-one correspondence from physical properties to psychophysical perception owing to the physiological constraints of humans. We call this the “haptic primary colors” [Tachi et al. 2013]. As shown in Figure 11, we define three spaces: the physical space, physiological space, and psychophysical or perception space. Different physical stimuli give rise to the same sensation in humans and are perceived as identical.

Figure 11: Haptic primary color model.

In physical space, human skin physically contacts an object, and the interaction continues with time. Physical objects have several surface physical properties such as surface roughness, surface friction, thermal characteristics, and surface elasticity.

We hypothesize that at each contact point of the skin, the cutaneous phenomena can be resolved into three components: force f (t), vibration v (t), and temperature e (t), and objects with the same f (t), v (t), and e (t) are perceived as the same, even if their physical properties are different.

We measure f (t), v (t), and e (t) at each contact point with sensors that are mounted on the avatar robot’s hand, and transmit these pieces of information to the human user who controls the avatar robot as his or her surrogate. We reproduce these pieces of information at the user’s hand via haptic displays of force, vibration, and temperature, so that the human user has the sensation that he or she is touching the object as he or she moves his or her hand controlling the avatar robot’s hand. We can also synthesize virtual cutaneous sensation by displaying the computer-synthesized f (t), v (t), and e (t) to the human users through the haptic display.

This breakdown into force, vibration, and temperature in physical space is based on the human restriction of sensation in physiological space. Human skin has limited receptors, as is the case in the human retina. In physiological space, cutaneous perception is created through a combination of nerve signals from several types of tactile receptors located below the surface of the skin. If we consider each activated haptic receptor as a sensory base, we should be able to express any given pattern of cutaneous sensation through synthesis by using these bases.

Merkel cells, Ruffini endings, Meissner’s corpuscles, and Pacinian corpuscles are activated by pressure, tangential force, low-frequency vibrations, and high-frequency vibrations, respectively. On adding cold receptors (free nerve endings), warmth receptors, and pain receptors to these four vibrotactile haptic sensory bases, we have seven sensory bases in the physiological space. It is also possible to add the cochlea, to hear the sound associated with vibrations, as one more basis. This is an auditory basis and can be considered as cross-modal.

Since all the seven receptors are related only to force, vibrations, and temperature applied on the skin surface, these three components in the physical space are enough to stimulate each of the seven receptors. This is the reason why in physical space, we have three haptic primary colors: force, vibrations, and temperature. Theoretically, by combining these three components we can produce any type of cutaneous sensation without the need for any “real” touching of an object.

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

Telexistence Avatar Robot System: TELESAR V

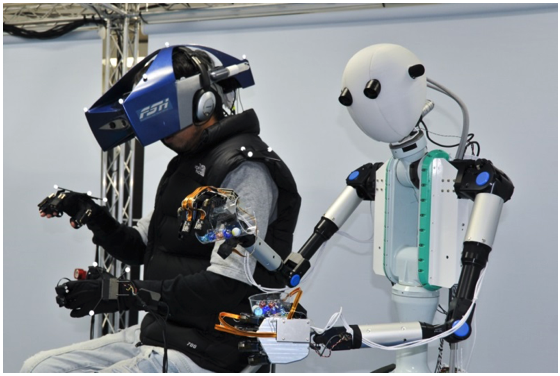

TELESAR V is a telexistence master–slave robot system that was developed to realize the concept of haptic telexistence. TELESAR V was designed and implemented with the development of a robot with high-speed, robust and full upper body, mechanically unconstrained master cockpit, and a 53-DOF anthropomorphic slave robot. The system provides an experience of our extended “body schema,” which allows a human to maintain an up-to-date representation of the positions of his or her various body parts in space. Body schema can be used to understand the posture of the remote body and to perform actions with the perception that the remote body is the user’s own body. With this experience, users can perform tasks dexterously and perceive the robot’s body as their own body through visual, auditory, and haptic sensations, which provide the simplest and fundamental experience of telexistence. TELESAR V master–slave system can transmit fine haptic sensations such as the texture and temperature of a material from an avatar robot’s fingers to a human user’s fingers.

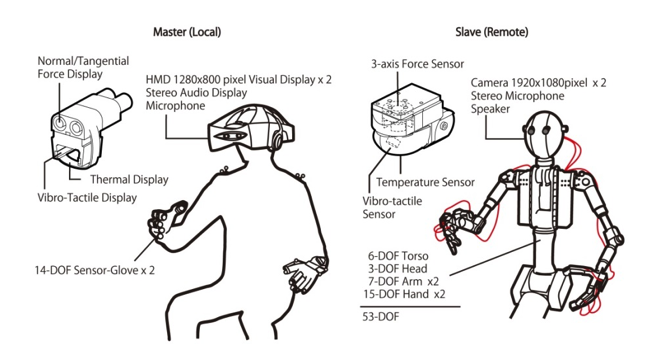

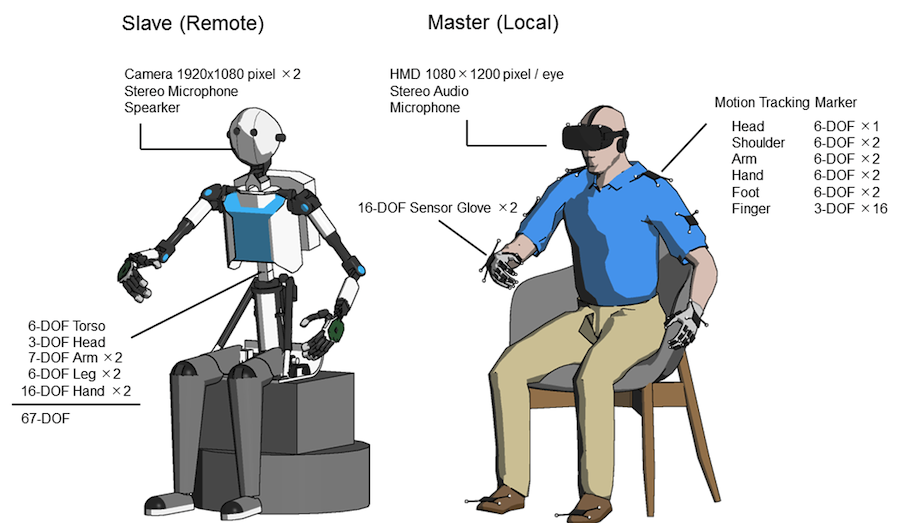

As shown in Figs. 12 and 13, TELESAR V system consists of a master (local) and a slave (remote). The 53-DOF dexterous robot was developed with a 6-DOF torso, a 3-DOF head, 7-DOF arms, and 15-DOF hands. The robot has Full HD (1920 × 1080 pixels) cameras also for capturing wide-angle stereovision, and stereo microphones are situated on the robot’s ears for capturing audio signals from the remote site. The operator’s voice is transferred to the remote site and output through a small speaker installed near the robot’s mouth area for conventional verbal bidirectional communication. On the master side, the operator’s movements are captured with a motion-capturing system (OptiTrack). Finger bending is captured with 14-DOF using modified 5DT Data Glove 14.

Figure 12: General view of TELESAR V master (left) and slave robot (right).

Figure 13: TELESAR V system configuration.

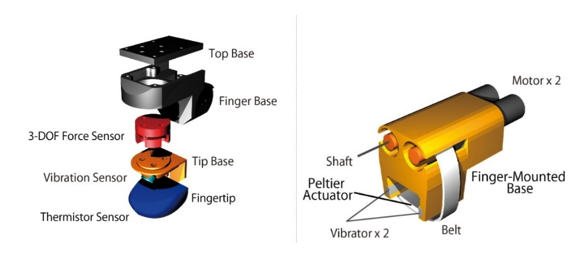

The haptic transmission system consists of three parts: a haptic sensor, a haptic display, and a processing block. When the haptic sensor touches an object, it obtains haptic information such as contact force, vibration, and temperature based on the haptic primary colors. The haptic display provides haptic stimuli on the user’s finger to reproduce the haptic information obtained by the haptic sensor. The processing block connects the haptic sensor with the haptic display and converts the obtained physical data into data that include the physiological haptic perception for reproduction by the haptic display. The details of the scanning and displaying mechanisms are described below.

First, a force sensor inside the haptic sensor measures the vector force when the haptic sensor touches an object. Then, two motor-belt mechanisms in the haptic display reproduce the vector force on the operator’s fingertips. The processing block controls the electrical current drawn by each motor to provide the target torques based on the measured force. As a result, the mechanism reproduces the force sensation when the haptic sensor touches the object.

Second, a microphone in the haptic scanner records the sound generated on its surface when the haptic sensor is in contact with an object. Then, a force reactor in the haptic display plays the transmitted sound as a vibration. Since this vibration provides a high-frequency haptic sensation, the information is transmitted without delay.

Third, a thermistor sensor in the haptic sensor measures the surface temperature of the object using a thermistor sensor. The measured temperature is reproduced by a Peltier actuator mounted on the operator’s fingertips. The processing block generates a control signal for the Peltier actuator. The signal is generated based on a PID control loop with feedback from a thermistor located on the Peltier actuator. Figures 14 and 15 show the structures of the haptic sensor and the haptic display, respectively.

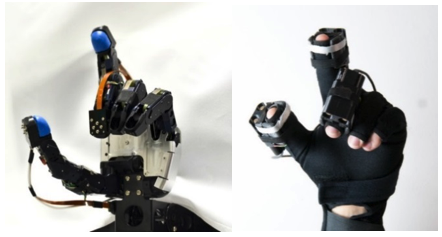

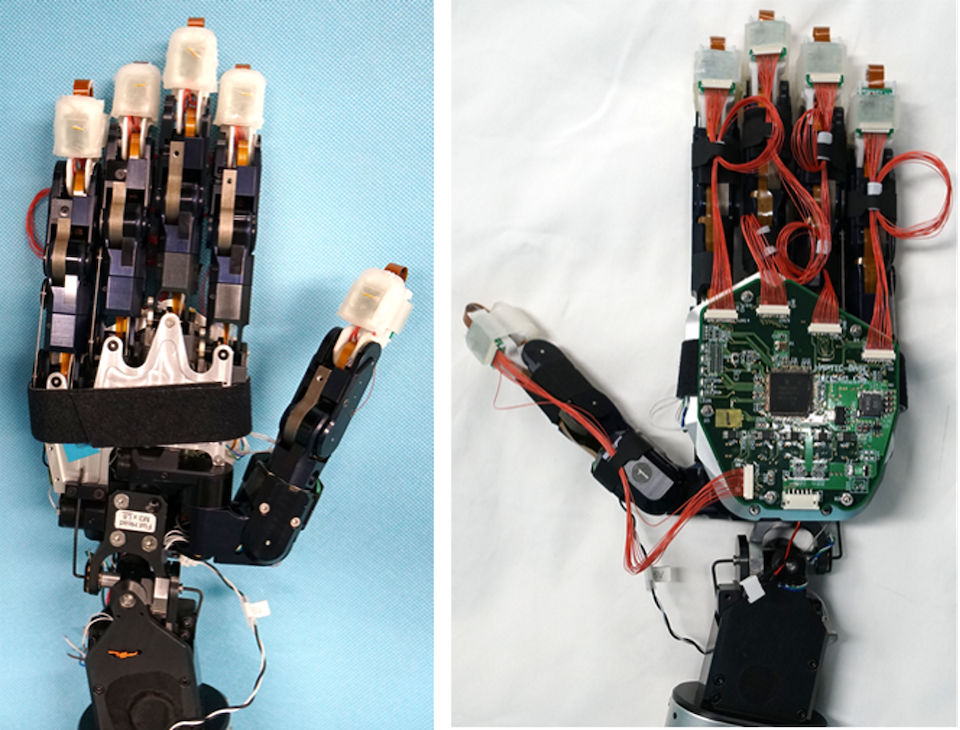

Figure 14: Structure of haptic sensor. Figure 15: Structure of haptic display.

Figure 16 shows the left hand of TELESAR V robot with the haptic sensors, and the haptic displays set in the modified 5DT Data Glove 14.

Figure16: Slave hand with haptic sensors (left) and master hand with haptic displays (right).

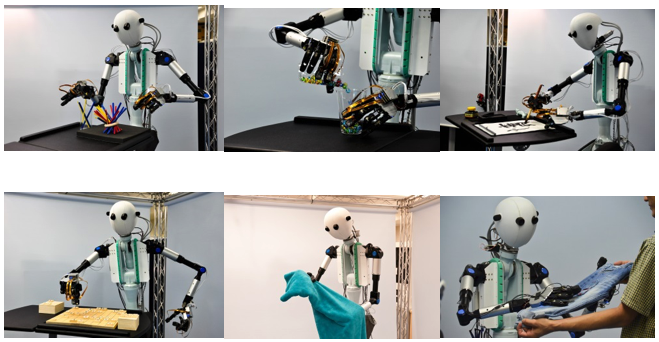

Figure 17 shows TELESAR V conducting several tasks such as picking up sticks, transferring small balls from one cup to another cup, producing Japanese calligraphy, playing Japanese chess (shogi), and feeling the texture of a cloth.

Figure 17: TELESAR V conducting several tasks transmitting haptic sensation to the user

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI [VIDEO]

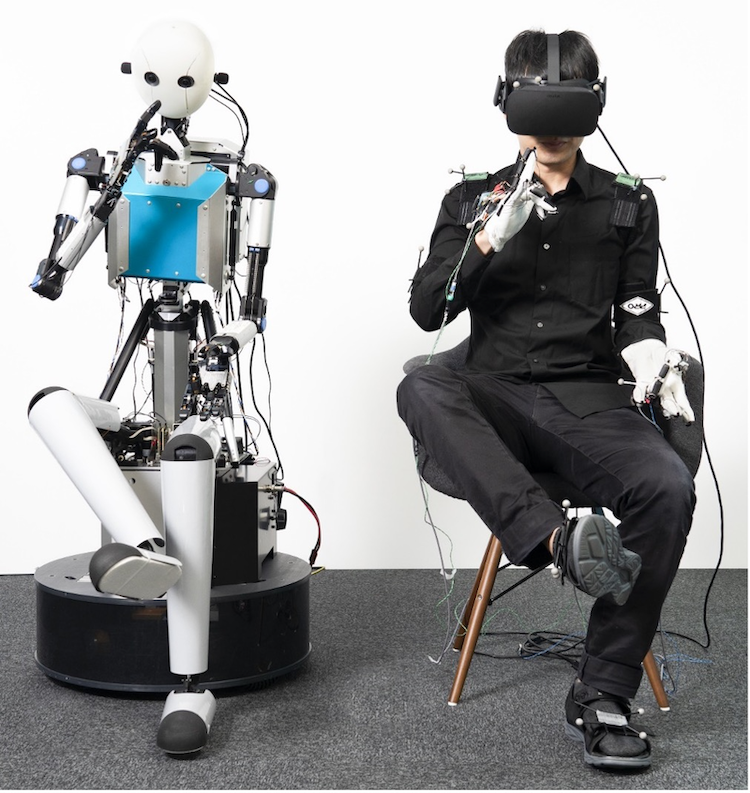

TELESAR VI is an avatar robot system developed as an embodied telexistence platform in the Embodied Media Project. As a self-incarnation (avatar), it enables a person to perform various actions ranging from watching and listening to speaking, from a remote location, as if he/she were physically present.

Summary

TELESAR VI is a successor to TELESAR V, which played a major role in the selection of avatar as the next theme of XPRIZE. One feature of TELESAR VI is that it realizes 67 DOFs, an unparalleled feat in the world of telexistence robots. As an index evaluating the extent to which the body can freely move, DOF, which represents the number of joints that can be independently controlled, is used. TELESAR V had 53 DOFs; in TELESAR VI, on the other hand, we successfully increased it to as much as 67. In the past, there were 64- and 114-DOF humanoid robots. However, they did not permit telexistence. In TELESAR VI, telexistence was realized by controlling the 67 DOFs of an avatar robot concurrently with the unconstrained measurements of 134 DOFs of humans, together with the transmission of the realistic sense of vision and hearing. The 67 DOFs can be broken down as follows: three in the head, six in the torso, seven for each arm, six for each leg, and 16 for each hand. With the avatar robot having almost as many DOFs as human beings, human-like actions can be naturally conveyed to it. Further, having legs that can move at one's disposal largely increases the sense of possession of a body, enabling operators to experience their new robotic body more vividly. The avatar robot can operate in a sitting position since the main area of operation is intended to be manipulation and gestural.

System Configuration

Figures 18 and 19 show the configuration and the general view of the TELESAR VI system, respectively. This system is a master-slave system consisting of a visuoauditory information system, where visuoauditory information is measured, transmitted, and presented in real-time to the operator; the movement measurement and control system, where the operator's movement is measured and transmitted in real-time to control the robot such that its movement tracks that of the operator, and the haptic information system, where haptic information is measured, transmitted, and presented to the operator in real-time.

Figure 18: System diagram of TELESAR VI.

Figure 19: TELESAR VI master slave system.

TELESAR VI realized full embodiment with 67 degrees of freedom by adding legs and improving hand motor functions, and expanded haptic information transmission by integrating haptic primary color modules.

Control Principle

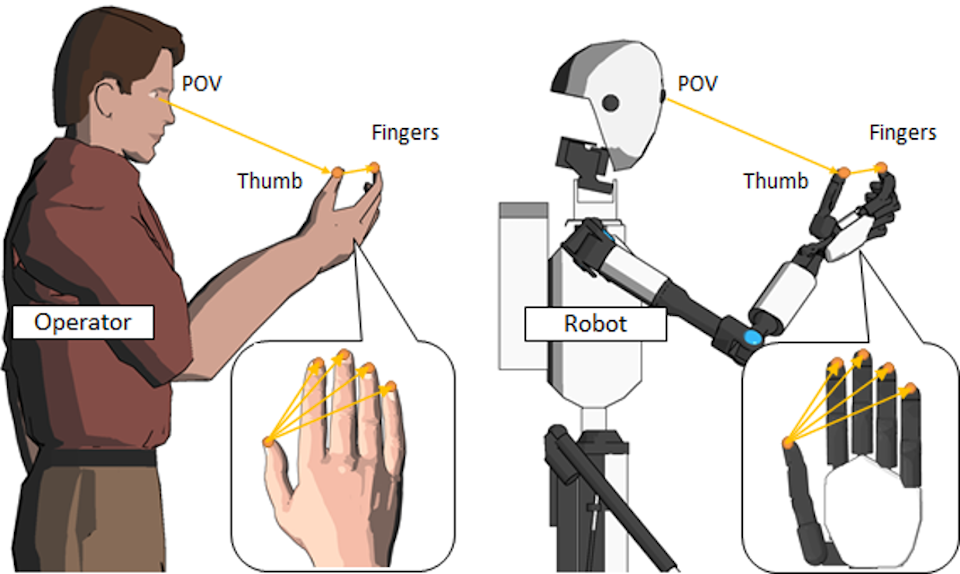

Since the size and degrees of freedom of the human and robot bodies are different, even if the angles of each joint are identical, the robot will not be able to move as expected. What is important is to control the vector from the camera to the hand of the robot so that it matches the vector from the human eye to the hand. The same is true in controlling the fingers. TELESAR VI focuses on the vectors between the thumb and the other fingers, and devising and introducing a new control method to make them coincide in master and slave (Figure 20).

Figure 20: Congruence of POV to thumb/finger vectors between operator and robot.

Haptic Sensing, Transmssion and Display

A 6-axis force sensor, an acceleration sensor, and temperature sensors are embedded in every fingertip. Vibration information is obtained by processing the accelerometer information. The left and right boards can acquire 3-axis acceleration sensor information for five fingers in parallel at 1 kHz or higher. The 3-axis force sensor information can also be acquired for five fingers at 1 kHz or higher in parallel. The temperature sensor information can be acquired for five fingers in parallel at about 50 Hz. The size of the device is equivalent to the back of a human hand, i.e., less than 800 mm in height, less than 700 mm in length, and about 50 mm in thickness.

Figure 21: Slave hand with haptic sensors for each finger.

The sensing results are transmitted immediately, and the data is sent to the computer of the slave unit via Ethernet connection. The slave computer sends the data to the master computer via the Internet (Figure 22). The data is processed by the master computer and presented on the master glove.

Figure 22: Haptic sensing, transmission, and display.

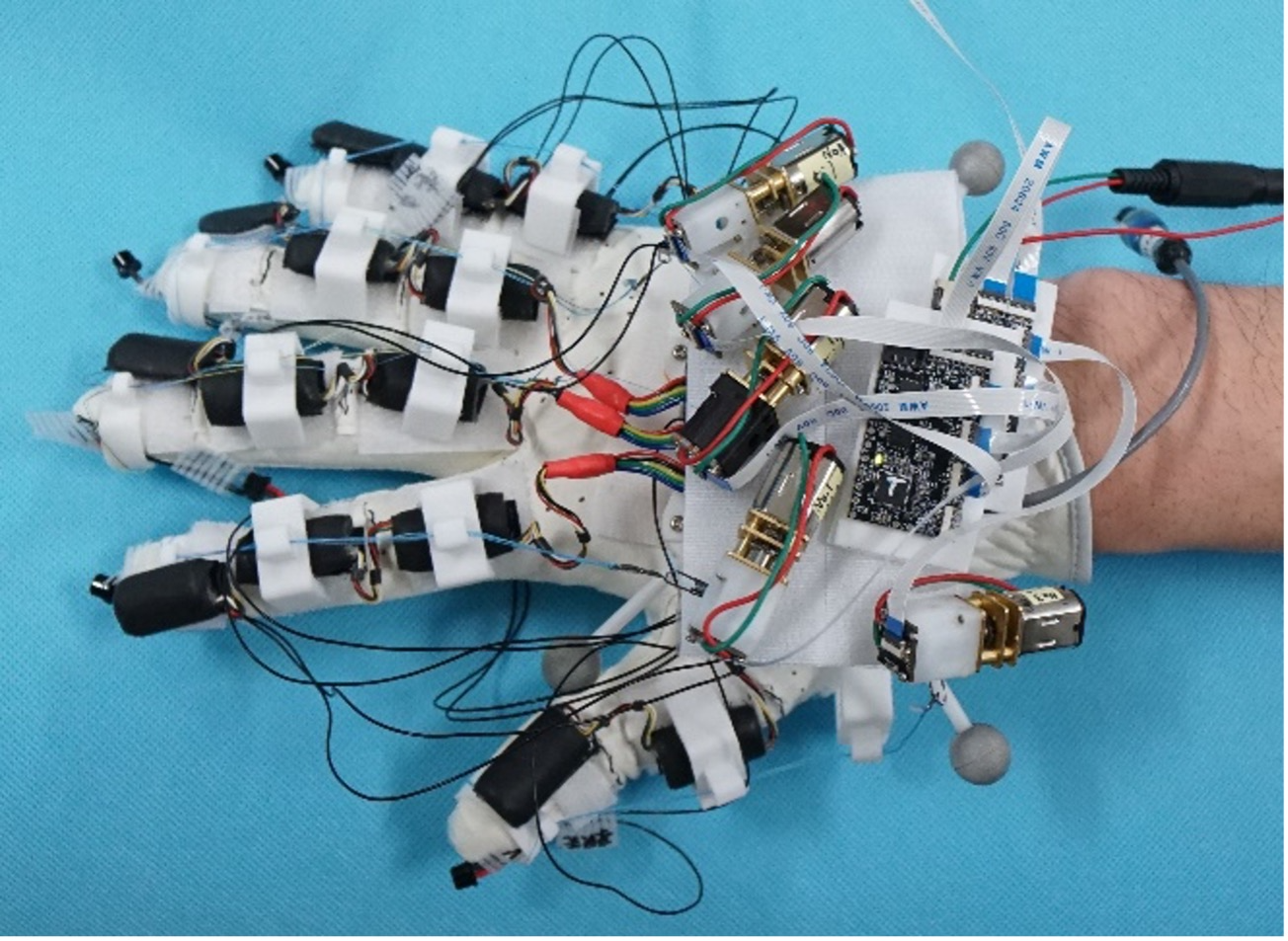

Each finger is displayed with a di-haptic module that presents vibration and temperature, and a force display device. The force display device consists of a finger pad, a thread, a threader, and a small motor. The finger pad is pulled by the force of the motor, and the force sensation is first generated by the skin sensation caused by the deformation of the skin on the belly of the finger. When the finger pad is pulled with a larger force, the force is applied to the finger joint and force sensation is generated by proprioception receptors such as the joint capsule (Figure 23).

Figure 23: Encounter-type haptic display mounted on the master glove.

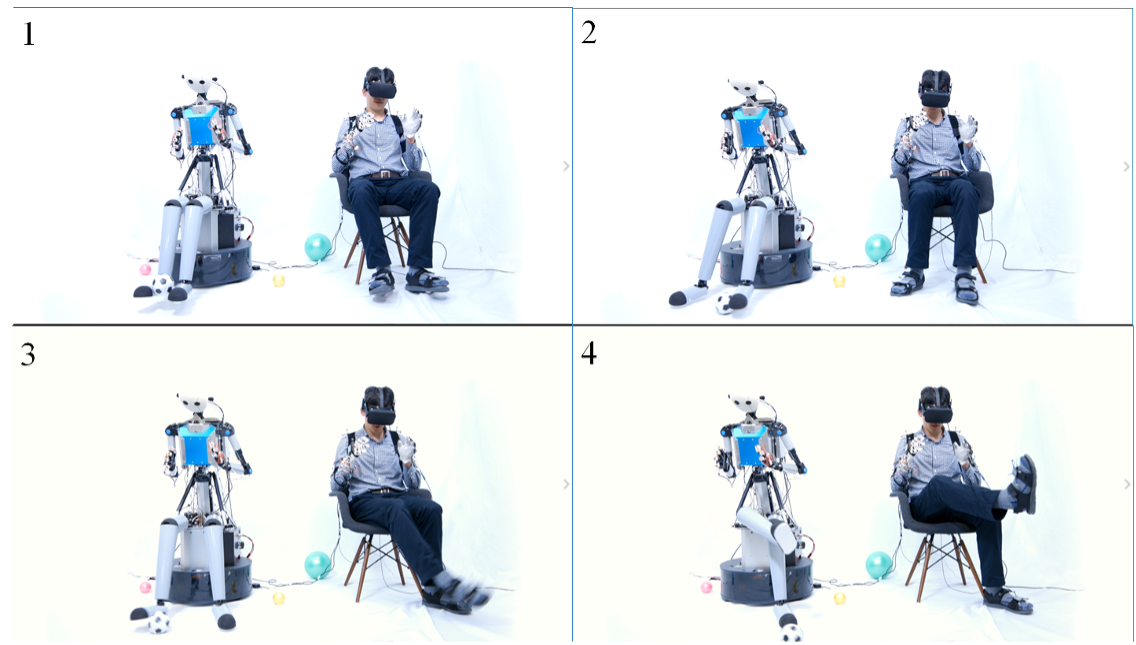

Feasibility Experiments (Demonstration)

In order to show the feasibility of TELESAR VI in the real world, demonstrations were conducted for four different situations. The demonstration video is open to the public.

Keyboard typing

Keyboard operation was chosen to show that all 10 fingers can be used. It enables the operator to type the keys with the same feeling as if he/she were typing with the finger remotely (Figure 24).

Figure 24: Typing HELLO.

Palpation

Palpation was chosen to demonstrate that TELESAR VI system can transmit tactile sensations using 10 fingers. There is currently an accelerated search for remote means of healthcare delivery in response to the new virus (COVID-19) around the world. One of the problems with current remote diagnosis is that it cannot convey tactile information while it can convey audiovisual information. We demonstrated the use of palpation to provide medical interviews/cares in such situations where direct medical treatment is a risk. It is supposed that a medical doctor touches the lymph nodes around throat and checks the body temperature when a patient complains about throat pain (Figure 25).

Figure 25: Tele-palpation for lymph node.

Trapping and shooting

The robot's operator can move freely while looking at the robot's legs as if they were his own legs. As a demonstration of how the whole-body coordinates including legs, the action of trapping and shooting a ball is chosen (Figure 26).

Figure 26. Trapping and shooting a ball.

Ikebana

As an example of performing delicate work using visual, auditory, and tactile senses, a demonstration was conducted by selecting Japanese fresh flowers including artistic aspects. Figure 27 shows the robot doing a Japanese-style flower arrangement. The avatar robot picked up a tulip stalk lying on a desk, moved the stalk above a tray and put it to a water-absorbing sponge base in the tray.

Figure 27. Ikebana-style flower arrangement.

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

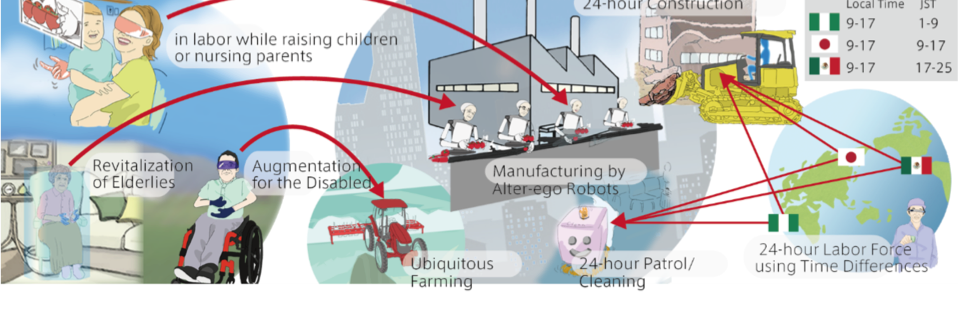

Toward a "Telexistence Society"

At present, in Japan, people are faced with many difficult problems, such as an increasing concentration of population in the metropolitan areas, an increase in the number of elderly persons and decrease in the number of workers due to the trend toward smaller families, dilemmas posed by concurrent child-rearing and work, and the fact that a great deal of time is required for commuting to and from work and the resulting lack of personal time. If it were possible to create innovative technology that changes the conventional conceptualization of movement by transferring physical functions without this being accompanied by actual travel, these difficulties could be overcome.

Working at home remotely to date has been limited to communications and/or paperwork that transmit audio-visual information and data, as well as conversations. It was impossible to carry out the physical work at factories or operations at places such as construction sites, healthcare facilities, or hospitals; that cannot be accomplished unless the person in question is actually on site. Telexistence is a technology that departs from the conventional range of remote communications that transmit only the five senses, and it realizes an innovative method that transmits all the physical functions of human beings and enables the engagement of remote work accompanying labor and operations that was impossible until now.

If a telexistence society that can delegate physical functions were realized, the relationship between people and industry and the nature of society would be fundamentally changed. The problems of the working environment would be resolved, and it would no longer be necessary to work in adverse environments. No matter where a factory is located, workers would be assembled from the entire country or the entire world, so the conditions for locating factories will see revolutionary changes compared to the past, and population concentration in the metropolitan area can be avoided. Since foreign workers would also be able to attend work remotely, the myriad problems accompanying immigration as a mere labor force, separate from humanitarian immigration, can be eliminated. Moreover, it will be possible to ensure a 24-hour labor force at multiple overseas hubs by making use of time differences, rendering the night shift unnecessary. Both men and women will be able to participate in labor while raising children, and this will help to create a society in which it is easier to raise children.

The time-related costs due to travel in global business will be reduced. Commuting-related travel will become unnecessary, and transportation problems can be alleviated. It is predicted that it will no longer be necessary to have a home near one’s workplace, the concentration of population in the metropolis will be alleviated, the work-life balance will be improved, and the people concerned will be able to live where they wish and lead fulfilling lives.

In addition, owing to additional functions of an avatar robot, which is the body of the virtual self, even the elderly and handicapped will not be at a disadvantage physically compared to young people, since they can augment and enhance their physical functions to surpass their original bodies, and thus they can participate in work that gives full play to the abundant experience amassed over a lifetime. The quality of labor will rise greatly, thereby reinvigorating Japan. The hiring of such specialists as technicians and physicians with world-class skills will also be facilitated, and optimal placement of human resources according to competence can also be achieved.

With a view to the future, it will be possible to respond instantly from a safe place during disasters and emergencies, and this technology can also be used routinely to dispatch medical services, caregivers, physicians, and other experts to remote areas. In addition, owing to the creation of new industries such as tourism, travel, shopping, and leisure, it will greatly improve convenience and motivation in the lives of citizens, and it is anticipated that a healthy and pleasant lifestyle will be realized in a clean and energy-conserving society.

In this manner, it goes without saying that the realization of a ‘‘telexistence society’’ that makes it possible for human beings to virtually exist in remote places is an extremely high-impact challenge technologically. One can even conclude it that it is a non-continuous innovation that differs from novel improvements involving progress of existing work equipment and environments insofar as it radically alters both the nature of labor per se and people’s lifestyles.

(Excerpted from Susumu Tachi, “Memory of the Early Days and a View toward the Future,” Presence, Vol.25, No.3, pp.239-246, 2016.)

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

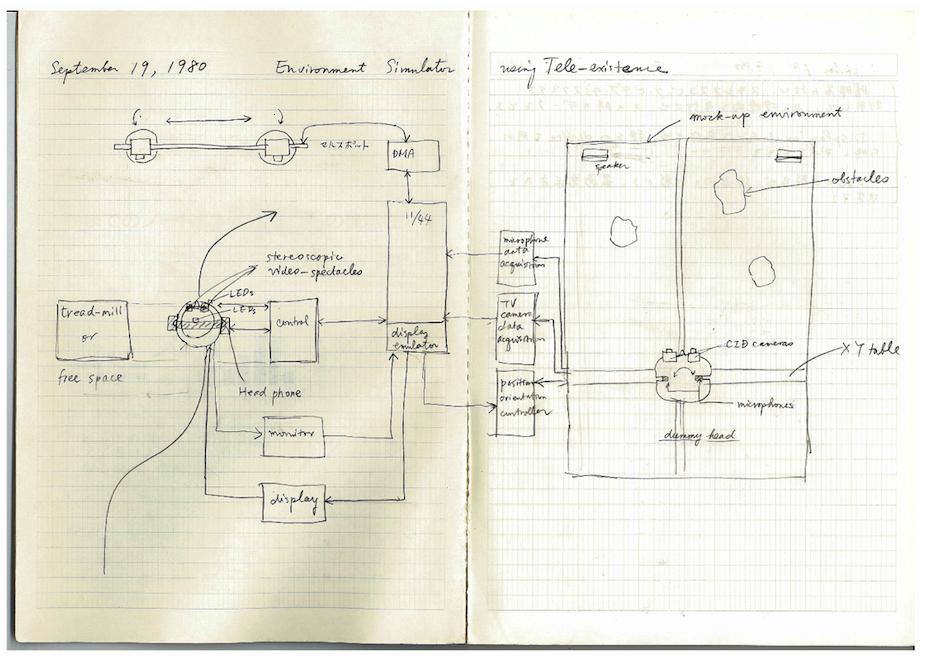

The Hour I First Invented Telexistence

From Susumu Tachi and Michitaka Hirose ed.: Virtual Technology Laboratory, p.102, Kogyo Chosa Kai, 1992. (ISBN4-7693-5054-6)

The idea occurred to me in the late summer of 1980, when I had returned to the laboratory in Tsukuba after a year of research at MIT where I worked as a senior visiting scientist. My research toward the world’s first Guide Dog Robot, invented by me in Japan, was at its final stage and I spent all my energy into it while incorporating research results from MIT. At MIT, I worked with Professor Robert W. Mann, a renowned researcher known for Boston Arm, with whom I proposed the systematic and quantitative evaluation system of mobility aids for the blind by using a mobility simulator with virtual apparatus.

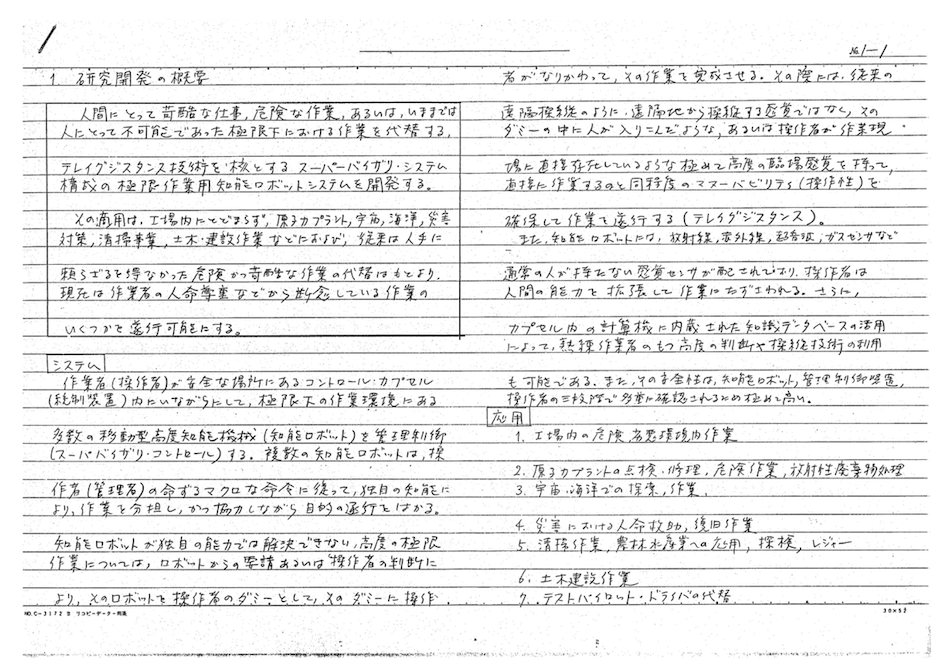

I came up with the idea of telexistence as a new development in the research toward Guide dog robot and that from MIT as I was walking one morning in the corridor in the laboratory on September 19, 1980. I was suddenly reminded of the fact that human vision is based on light waves and that humans only use two images that are projected on the retina; they construct a three-dimensional world by actively perceiving how these images change with time. The robot would only need to provide humans, by measurement and control, with the same image on the retina as the one that would be perceived by human eyes. As I somewhat rediscovered this fact, all my problems dissipated and I started to tremble with excitement. I went back to my office immediately and wrote down what I had just discovered and all the ideas that sprang up following that discovery (Fig.1). Surprising enough, I simultaneously came up with the idea of how to design telexistence visual display.

The invention of telexistence changed the way I see the world. I believe that telexistence and virtual reality will free people from temporal and spatial constraint. Telexistence will also allow us to understand the essence of existence and reality. Virtual reality and telexistence show ever more possibilities today and the world watches them as the key technology of the twenty-first century. I will continue to explore the boundless world of telexistence and virtual reality for a while.

Fig.1 Sketch of the idea when I invented the concept of telexistence.

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

How Telexistence was Invented

From Susumu Tachi: Telexistence and Virtual Reality, pp.148-15, The Nikkan Kogyo Shimbun, 1992. ISBN4-526-03189-5

Telexistence is a concept that originated in Japan. I would like to introduce how I invented the concept.

In 1976 I started the world-first research on a Guide Dog Robot dubbed MELDOG. MELDOG was a research and development project to assist vision-impaired people by providing a robot with the functions of a guide dog. This is a system whereby the robot recognizes the environment and communicates information about it to the human, to help vision-impaired people walk. One issue that arose in our research into guide dog robots was, when a mobility aid like the guide dog robot obtains environmental information, how should it present that information to the human to enable them to walk?

The following type of issue was also important. Supposing there is a parked car on the left 3m ahead, or a person walking in the same direction 1m in front, or a crossroads 10m ahead. How can a mobility aid for the blind like the Guide Dog Robot inform the human of this information? This information needs to be properly communicated to the user through their remaining senses, such as sound or cutaneous stimulation. This communication method is the design technique of mobility aids for vision-impaired people. In other words, we want to know what kind of information, communicated in what way, will allow people to walk freely. However, such a method was not established. It was difficult to even investigate how to communicate information to make it easier for people to walk.

All the equipment at that time was designed based on a repeated process of somebody thinking “I wonder if this will work”, designing a device based on that idea, trying it out and modifying it if it didn’t work. With such a method, it takes time to design, make, try out the device and then remake it again and again. In addition, even if a device was easy to use for one person who evaluated it, when a different user tried it they would not find it easy to use, and so on. So, at the same time as designing a device more systematically that would be easy for anyone to use, in fact we also needed to make a device that would suit each individual user. In reality, making an actual device based on this process was easier said than done, not just in terms of time and economics, but without even having a design and development methodology.

One method that was devised to solve this problem was using a computer to make a system to communicate the information to allow the person to walk, and presenting this to the person artificially while systematically changing the walking information and evaluating it. Professor Robert W. Mann, the well-known professor who developed the Boston Arm, the world’s first myoelectric-controlled prosthetic arm, was at Massachusetts Institute of Technology (MIT). I researched with Professor Mann at MIT from 1979 to 1980 as a senior visiting scientist. Our research was based on the following idea.

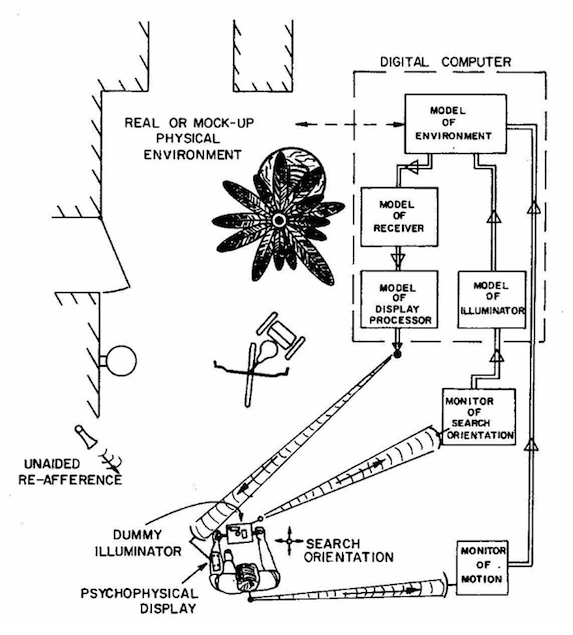

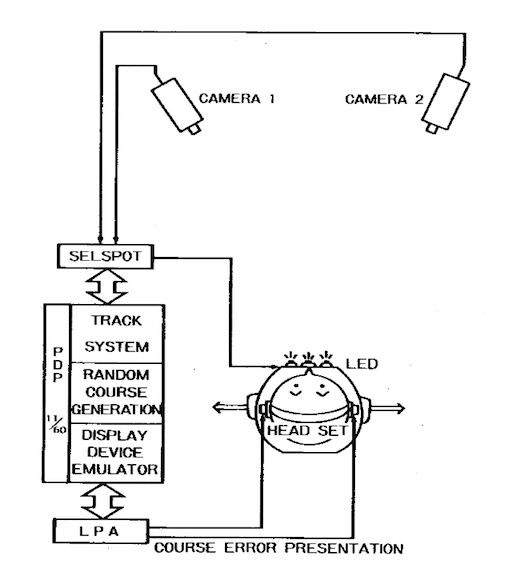

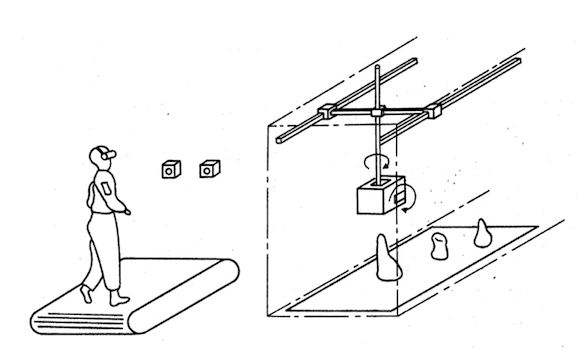

This was “basically, rather than actually making a device, make a transmission method inside the computer and use this to communicate information to the human. This can be optimized for each individual user, and will make it possible to create a device that is easy for anyone to use.” To explain in more detail, what we were thinking during my time at MIT was as follows. Using the mobility aid, a person walks around an actual-size model space similar to an actual space. Measure the person’s movements at that time. Perform a simulation on the computer, and work out what kind of environmental information is being obtained by the mobility aid from the person’s movements. Then communicate that information to the person in every way we could think of. Try changing the communication method and measure how the person walks. By systematically repeating this, we would quantitatively investigate what information conveyed in what way makes it easier to walk, by evaluating how the person walks (Figures 2 and 3).

Figure 2 Human / equipment / environment simulator. (From Robert W. Mann: The Evaluation and Simulation of Mobility Aids for the Blind, American Foundation for the Blind Research Bulletin, No.11, pp.93-98, 1965.)

Figure 3 Experimental arrangement for real-time evaluation of sensory display devices for the blind. (From Susumu Tachi, Robert W. Mann and Derick Rowell: Quantitative Comparison of Alternative Sensory Display for Mobility Aids for the Blind, IEEE Transactions on Biomedical Engineering, Vol.BME-30, No.9, pp.571-577, 1983.)

In the summer of 1980 I returned to Japan and attempted to develop this a bit further. This was a ground-breaking method, but it had its disadvantages. When walking in an actual space, it is dangerous to bump into things. What is more, actual spaces cannot easily be changed. This means that once you have moved in a space, the next time you can walk in the same space even without a mobility aid.

This was no good. We needed to be able to fully evaluate the mobility aid while freely changing the space in different ways. So, we needed to freely change the space using something like a computer. That was one of the ideas behind the concept of creating a virtual space on a computer that you could walk around. But this was before the term “virtual reality” had even been coined, and it was almost impossible to create both mobility aid and the space on a computer.

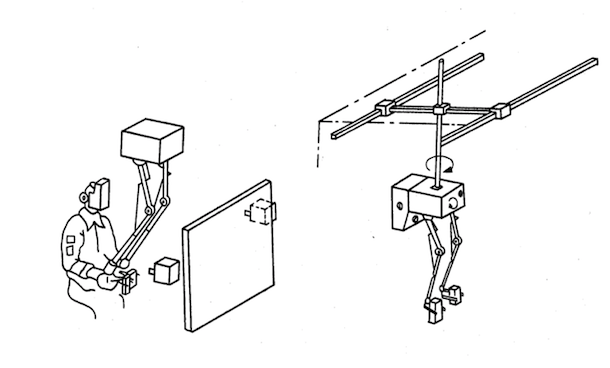

So, the idea I came up with as the next stage was that, instead of making it all on the computer, we would make the actual space, but get a robot to walk around it because it was dangerous for a human. Then, the person would walk in a safe area, and we could communicate these human movements to the robot, to get the robot to move. If we could evaluate it using this system, it would not put the human in the dangerous position of bumping into obstacles, and the person could walk in one place but have the sensation of being where the robot was. Another advantage of this method was that it allowed us to extend our research to look at how sighted people, as well as vision-impaired people, walk.

In other words, we were giving a robot a sense of sight and getting it to walk. Even for a sighted person, if the robot’s sight is made worse, the person gradually becomes unable to see. How does their way of walking change when this happens? What kind of information needs to be extracted and provided to the human to allow them to walk as they did before? I devised apparatus to make such research possible. This was proposed as a patent for “Evaluation Apparatus of Mobility Aids for the Blind” (Figure 4).

Figure 4 Evaluation Apparatus of Mobility Aids for the Blind (patent filed 1980.12.26);

Science and Technology Agency Featured Invention No. 42 (1983.4.18).

At the same time, I realized that by making a robot move in a different space in this way, it might be possible to do various work using a robot in place of a human. My group had been studied robots, so I had already been familiar with teleoperation for a long time. By using this new technology, what had previously been thought of as teleoperation would advance to a new stage. With this method, unlike conventional teleoperation, the operator is able to work with the sensation as if he or she is where the robot is. I realized that this would efficiently and dramatically improve work. This was on September 19, 1980. This was the idea for the patent for “Operation Method of Manipulators with Sensory Information Display Functions” relating to manipulation (Figure 5).

Figure 5 Operation Method of Manipulators with Sensory Information Display Functions (patent filed 1981.1.11).

This is how the concept of telexistence originated. What is more, it came together with the Advanced Robot Technology in Hazardous Environments project, which started in 1983. During preparation for the national project on Advanced Robot Technology in Hazardous Environments, telexistence was recognized as a very important technology by the Machinery and Information Industries Bureau of the Ministry of International Trade and Industry, so it was developed as one of the core techniques of Advanced Robot Technology in Hazardous Environments. In fact, it would be more accurate to say that the National Large Scale Project on Advanced Robot Technology in Hazardous Environments was focused on telexistence as a key technology. The first conference presentation was held at the annual conference of the Society of Instrument and Control Engineers in July 1982. This is when the concept of telexistence was first introduced to the academic world with the world-first apparatus of telexistence.

As you can see from this background, telexistence came about completely independently from the American concept of telepresence. It is an interesting historical coincidence that around the same time in the USA, focusing on space development, scientists like Marvin Minsky were coming up with the ideas that led to telepresence. Perhaps technology also includes the timing when it is born and raised.

National Large-scale Project: Advanced Robot Technology in Hazardous Environments

Just at that time, the MITI (Ministry of International Trade and Industry) was investigating a new national large-scale project concerning robots. Twenty years after the dawn of robotics in 1960 when Joseph Engelberger made the first industrial robot UNIMATE, 1980 was being called the first year of the robot, and Japan was known as the robot kingdom. However, the robots that were in use in 1980 were the so-called first-generation playback robots, and second-generation robots were just starting to appear in factories. Mr. Uehara, who was the Deputy Director of MITI at that time, knew that I was studying telexistence, and asked me to talk to him. I explained various ideas, and he told me to put them together and bring them to MITI at once, so I gave up my holiday and wrote the proposal shown in Figure 6. Based on this proposal, the Advanced Robot Technology in Hazardous Environments project was launched in 1983.

Figure 6 Proposal for large-scale project “Advanced Robot Technology in Hazardous Environments.”

When the Development Office for the Advanced Robot Technology in Hazardous Environments project was established in 1983, I worked at the office for the first year to develop the precise planning of the project including what the final goal of this eight-year project should be, how the results should be evaluated, what kind of technologies should be developed to attain the goal, which companies should participate, etc. Advanced Robot Technology in Hazardous Environments is used in nuclear power, ocean and oil facilities and so on. Robots with legs were particularly important. This is because conventional robots with wheels could not step over pipelines or go up and down stairs, so the robots had to have legs. They also needed hands as well as arms to move around while working, and of course they needed three-dimensional vision and sense of touch. Through this research, autonomous intelligent robots are to be developed.

However, autonomous intelligent robots can only work in structured environments. In an unstructured environment like an extreme work environment, supervisory control is necessary. But there are limits also to supervisory control, so there always remain aspects that need to do be done directly by humans. However, the environments in the Advanced Robot Technology in Hazardous Environments project included dangerous locations or situations where the operator was far away, so a person could not get there immediately. This is exactly the situation where telexistence can be used effectively. We called this framework the “third-generation framework”, or “third-generation robotics”.

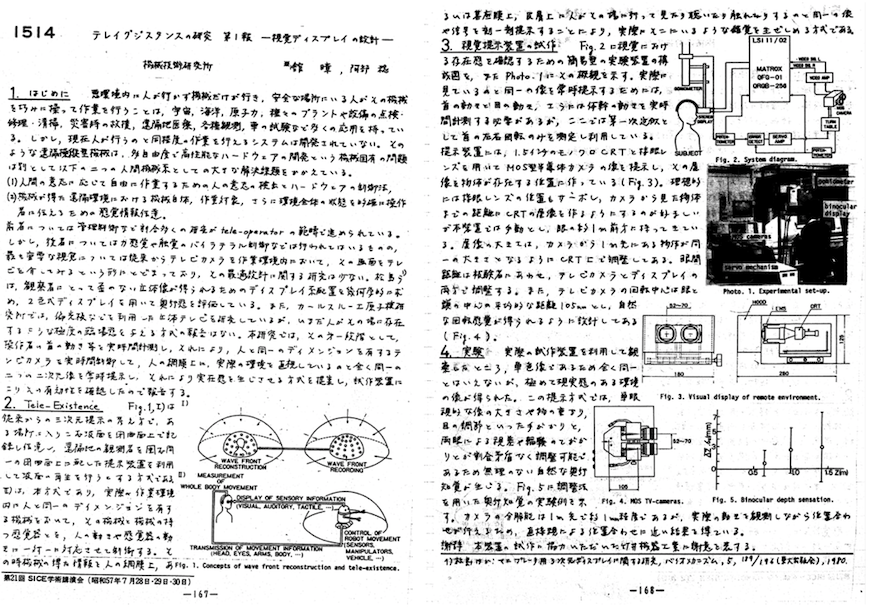

Once the precise project development planning was in place and the project had started, I returned to the laboratory and carried on researching telexistence. Then, the idea of telexistence appeared in the USA, as mentioned above. They called it telepresence. Marvin Minsky, the leading figure in Artificial Intelligence, proposed this concept in the context of space development. This was in 1983. The concept of telepresence itself had appeared in 1980, before being presented in the ARAMIS report in 1983, so it can be said that the concept was born simultaneously in Japan and the USA in 1980. However, I was the first person in the world to actually make a device and test it. I presented the first telexistence machine together with the concept at the SICE annual conference in Japan in July 1982 (Figure 7), and at the RoManSy international conference in June 1984 (Tachi et al. 1984). That is how ground-breaking the concept of telexistence was.

Figure 7 The first paper on telexistence design method and telexistence machine.

(From Susumu Tachi and Minoru Abe: Study on Tele-existence (I). In: Proceedings of the 21st Annual Conference of the Society of Instrument and Control Engineers (SICE), pp. 167-168, July 1982)

| Summary | How Telexistence was evolved | Telexistence vs. Telepresence | Mutual Telexistence |

| Haptic Telexistence | Haptic Primary Colors | Telexistence Avatar Robot System: TELESAR V |

| TELESAR VI: Telexistence Surrogate Anthropomorphic Robot VI | Toward a "Telexistence Society" |

| The Hour I First Invented Telexistence | How Telexistence was Invented | References |

References

[Goertz 1952] R. C. Goertz: Fundamentals of general-purpose remote manipulators, Nucleonics, vol. 10, no.11, pp. 36–42, 1952.

[Mosher 1967] R. S. Mosher: Handyman to Hardiman, SAE Technical Paper 670088, doi:10.4271/670088, 1967.

[Sheridan 1974] T. B. Sheridan, and W. R. Ferrell: Man–Machine Systems, MIT Press, Cambridge, MA, 1974.

[Tachi et al. 1980] Susumu Tachi, Kazuo Tanie and Kiyoshi Komoriya: Evaluation Apparatus of Mobility Aids for the Blind (patent filed 1980.12.26); Science and Technology Agency Featured Invention No. 42 (1983.4.18). (in Japanese) [PDF]

[Tachi et al. 1981] Susumu Tachi, Kazuo Tanie and Kiyoshi Komoriya: Operation Method of Manipulators with Sensory Information Display Functions (patent filed 1981.1.11). ( in Japanese) [PDF]

[Tachi et al. 1982] Susumu Tachi and Minoru Abe: Study on tele-existence (I). In: Proceedings of the 21st Annual Conference of the Society of Instrument and Control Engineers (SICE), pp. 167-168, July 1982. (in Japanese) [PDF]

[Tachi et al. 1982] Susumu Tachi and Kiyoshi Komoriya: The Third Generation Robotics, Measurement and Control, Vol.21, No.12, pp.1140-1146 (1982.12) (in Japanese) [PDF]

[Tachi 1984] Susumu Tachi: The Third Generation Robot, TECHNOCRAT, Vol.17, No.11, pp.22-30 (1984.11) [PDF]

[Tachi et al. 1984] Susumu Tachi, Kazuo Tanie, Kiyoshi Komoriya and Makoto Kaneko: Tele-existence(I): Design and Evaluation of a Visual Display with Sensation of Presence, in A.Morecki et al. ed., Theory and Practice of Robots and Manipulators, pp.245-254, Kogan Page, 1984. [PDF]

[Tachi and Arai 1985] Susumu Tachi and Hirohiko Arai: Study on Tele-existence (II)-Three Dimensional Color Display with Sensation of Presence-, Proceedings of the '85 ICAR (International Conference on Advanced Robotics), pp. 345-352, Tokyo, Japan , 1985. [PDF]

[Hightower et al. 1987] J. D. Hightower, E. H. Spain and R. W. Bowles: Telepresence: A Hybrid Approach to High Performance Robots, Proceedings of the International Conference on Advanced Robotics (ICAR ’87), Versailles, France, pp. 563–573, 1987.

[Tachi et al. 1988a] Susumu Tachi, Hirohiko Arai and Taro Maeda: Tele-existence Simulator with Artificial Reality (1)-Design and Evaluation of a Binocular Visual Display Using Solid Models-, Proceedings IEEE International Workshop on Intelligent Robots and Systems -Toward the Next Generation Robot and system-, pp. 719-724, Tokyo, Japan,1988. [PDF]